Abstract

The past 20 years have seen dramatic advances in cosmology, mostly driven by observations from new telescopes and detectors. These instruments have allowed astronomers to map out the large-scale structure of the Universe and probe the very early stages of its evolution. We seem to have established the basic parameters describing the behaviour of our expanding Universe, thereby putting cosmology on a firm empirical footing. But the emerging ‘standard’ model leaves many details of galaxy formation still to be worked out, and new ideas are emerging that challenge the theoretical framework on which the structure of the Big Bang is based. There is still a great deal left to explore in cosmology.

Similar content being viewed by others

Main

The idea of the Big Bang has been with us for more than half a century. During that time, its character has changed dramatically in several ways. Until the early years of the twentieth century, cosmology was primarily a metaphysical subject. With the arrival of Einstein's general theory of relativity1, a sensible mathematical description2,3 of the space-time structure of the entire Universe emerged in the 1920s (Box 1). Spurred on by Hubble's discovery of the cosmic expansion4, theorists in the 1930s began to speculate about how the Universe got to be in its present state, what physical processes might have been involved in its evolution, and what it might have been like in the past. These lines of enquiry gradually brought new areas of science, such as nuclear and particle physics, into play. The resulting confrontation between theoretical physics and observational astronomy, within a context provided by Einstein's theory, has been essential to progress in this field. The latest phase of this story has been dominated by stunning observational developments that have strengthened a growing belief that answers have been obtained to many of the basic questions that cosmologists have been asking for decades. But this is nowhere near the end of the road for cosmology. Many important issues within the standard model remain to be resolved, especially in the area of galaxy formation. Moreover, the newest physics of string theory has thrown up a number of theoretical ideas that may initiate a revolution in our understanding of the early Universe and overthrow some of the central concepts of the Big Bang.

The Friedmann models (Box 1) and their associated interpretational framework have allowed the construction of an astonishingly successful broad-brush description of the evolution of the Universe that accounts for most available observational data. Besides the Hubble expansion4, the main evidence in favour of the Big Bang theory has been the discovery5, by Penzias and Wilson in 1965, of the cosmic microwave background (CMB) radiation6. Although the spectrum of this radiation was not well determined at first, the COBE satellite7 revealed an astonishingly accurate black-body behaviour with a temperature around 2.73 K. Using the Friedmann framework allows us to turn the clock back to an epoch when the Universe was about one-thousandth of its present size, at which point the radiation temperature was a thousand times higher. This would be hot enough to ionize atomic matter, which would then have scattered radiation well enough to be effectively opaque. Although it is very difficult to imagine how such an accurate black-body spectrum could have been made naturally at the low temperature at which it exists now, dense plasmas can maintain sufficient thermal contact with radiation to establish a thermal spectrum. The microwave background was last scattered by such material about 300,000 years after the Big Bang. Turning the clock back further, to an epoch less than a minute after the Big Bang, the matter's temperature is measured in billions of degrees. At this time, the Universe resembles a thermonuclear explosion during which protons and neutrons are rapidly baked into light nuclei in a heat bath provided by the background radiation. Gamow8, and Alpher and Herman9 showed, for example, that in such conditions helium can be created much more easily than is accomplished by stellar hydrogen burning and with a much lower contamination of heavier elements. Deuterium, helium-3 and lithium-7 are also made in trace quantities during the Big Bang.

The production of the CMB and the synthesis of helium take place during conditions that are achievable in laboratory experiments. To go further into the early Universe, one needs to adopt more speculative theoretical considerations. When the Universe was around a microsecond old is the time when the hadrons split up into their constituent quarks and gluons. At about 10−11 seconds, the distinction between electromagnetic and weak nuclear reactions disappears with the restoration of electroweak symmetry, which is broken at low energies. Earlier still, the matter contents of the Universe are expected to be described by more speculative particle physics, such as grand unified theories (GUTs), so cosmologists sought ways of incorporating ideas from quantum field theory into the framework furnished by general relativity. These forays into particle cosmology have scored numerous successes, such as the idea of supersymmetric dark matter and — perhaps the most influential theoretical idea10,11,12,13 of the past 25 years — cosmic inflation (Box 2). Successful though inflation has been, it does represent a kind of half-way house on the road to a fully consistent description of the very early Universe. The marriage of low-energy effective quantum field theory with classical general relativity is an uncomfortable one, and in any case no fundamental scalar has yet been identified as a compelling candidate for the inflaton field or the form of its interaction potential.

More recent attempts to fuse quantum theory with gravity have focused on string theory, which is framed in spaces of higher dimensionality than the four used in the standard framework. The extra dimensions may be wrapped up on a very small scale so as to be unobservable, an old idea dating back to Kaluza14 and Klein15. On the other hand, in the latest ‘braneworld’ models, the extra dimensions can be large but we are confined to a four-dimensional subset of them (a ‘brane’). In one version of this idea, dubbed the ‘ekpyrotic universe’16,17, the origin of our observable Universe lies in the collision between two branes floating around in a higher-dimensional ‘bulk’. Other models are possible that result in the modification of the Friedmann equations at early times18. These steps towards string cosmology are controversial, but they do motivate a radically different interpretation of early Universe physics. In these models, the low-energy Universe is described by an effective quantum field theory with the configuration of the vacuum state being controlled by the extra dimensions. In these theories there is a myriad of possible vacua, each with its own set of fundamental constants, different gauge hierarchies, and so on. It is possible that these ideas may also lead to cosmological models that avoid the initial singularity that occurs in the standard Big Bang, but this also remains an open question.

One of the concrete things that inflation has achieved is to bring us closer to an explanation of how galaxies and large-scale structure came into being. So far we have focused on a broad-brush description of the cosmos in terms of models obeying the cosmological principle. In reality, our Universe is not at all smooth and featureless. It contains stars, galaxies, clusters and superclusters of galaxies, and a vast amount of complexity. The basic idea for the origin of cosmic structure is simple. Whereas a perfectly smooth, self-gravitating fluid will remain homogeneous for ever, any irregularities — however slight — will be amplified by the action of gravity. This process is exponentially fast if the starting configuration is static19, but in an expanding universe20 it must overcome the expansion in order to initiate collapse. This means that cosmological structure formation is a relatively difficult process, which requires significant initial fluctuations to get it going. In inflationary models, quantum fluctuations in the scalar field driving the expansion generate adiabatic density perturbations with particularly simple statistical properties21,22 that ‘seed’ cosmic structure formation. They also generate an as-yet undetected background of primordial gravitational waves23. Whereas the early stages of structure formation seem to be comprehensible, the hydrodynamical and radiative processes by which collapsed lumps turn into stars and galaxies are so complicated that even the sustained efforts of the most powerful supercomputers have left many questions open.

Cosmology by numbers

The Big Bang ‘theory’ is seriously incomplete. For the usual dust or radiation equations of state, the Universe is always decelerating. As it is expanding now, it must have been expanding more quickly in the past. There is therefore a stage, at a finite time in the past, at which the scale factor must shrink to zero and the energy density becomes infinite. This is the Big Bang singularity, at which the whole framework falls apart. We therefore have no way of setting the initial conditions that would allow us to obtain a unique solution to the system of equations (4)–(6) in Box 1. There is an infinitely large family of possible universes, so to identify which (if any) is correct we have to use observations rather than pure reason.

The simplest way to see how this is done is to re-write the Friedmann equation (equation (5)) in dimensionless form:

The different terms in this equation relate to the different terms in equation (5). The ordinary matter density is expressed via Ωm=8πGρ/3H2, the vacuum energy is ΩΛ=Λc2/3H2 and Ωk=−kc2/a2H2. (Here G is the gravitational constant, c is the velocity of light, Λ is the cosmological constant, and H is the expansion rate given in terms of the scale factor a by H=(1/a)(da/dt); the density ρ, curvature k and scale factor a are defined in Box 1.). Note that Hubble's constant is involved in these definitions, so in what follows I will take H0=100h km s−1 Mpc−1 so that h is also dimensionless. These dimensionless parameters can in principle (and, now, in practice) be measured. Relativistic particles (principally photons and light neutrinos) also contribute to equation (1), but their effect is negligible at late times. Cosmological nucleosynthesis provides a stringent constraint on the contribution of baryonic material (protons and neutrons), Ωb, to the overall density of non-relativistic matter, Ωm. For example, a high baryon abundance would result in more helium than observed, and vice versa for a low abundance. The value of Ωbh2≈0.02 is required to match the observations.

In 1994, G. Ellis and I wrote a review24 in which we focused on all the available evidence about Ωm. It is possible to estimate the mean density of the Universe (which is basically what this parameter measures) in many ways, including galaxy and cluster dynamics, galaxy clustering, large-scale galaxy motions, gravitational lensing, and so on. At the time, many of these ‘weighing’ techniques were in their infancy but, on the basis of what seemed to be the most robust evidence, we concluded that the evidence favoured a value 0.2<Ωm<0.4. This conclusion still requires that most of the matter in the Universe be non-baryonic, perhaps in the form of some relict of the early Universe produced at such high energies that it has been impossible to identify in accelerator experiments. But whatever it is, there is not enough of it to close the Universe (that is, to make Ωm=1). Our conclusion was somewhat controversial at the time: most theorists, but not all25, then preferred a universe with Ωm=1 because inflation suggested that the spatial sections should be flat (or very close to it; Box 2). In the absence of a cosmological constant, or dark energy, Ωm=1 is needed to make a flat universe. Our conclusion about the value of Ωm remains valid, but the past 10 years have seen two spectacular advances that we did not foresee at all, because there was no direct evidence at the time.

In our 1994 paper24, we devoted only a very small space to the field of ‘classical cosmology’. The idea of this approach is to use observations of distant objects (such as sources seen at appreciable redshifts) to directly probe the expansion rate and geometry of the Universe. For example, owing to the focussing effect of spatial curvature, a rod of fixed physical length would subtend a smaller angle when seen through an open universe (with k<0) than in a closed universe (with k>0; see Box 1). A standard light source would likewise appear fainter in a universe that is undergoing accelerated expansion than in a decelerating universe. The difficulty is that standard sources are difficult to come by. The Big Bang universe is an evolving system, so that sources seen at high redshift are probes of an earlier cosmic epoch. Young galaxies are probably very different to mature ones, so they cannot be used as standard sources. Classical cosmology consequently fell into disrepute until, just a few years ago, it underwent a spectacular renaissance.

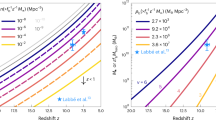

Dark energy

Two major programmes, the Supernova Cosmology Project26 and The High-z Supernova Search27, exploited the behaviour of a particular kind of exploding star, type Ia supernovae, as ‘standard candles’. The special thing about this kind of supernova is that it is thought to result from the thermonuclear detonation of a carbon-oxygen white dwarf. These events are themselves roughly standard explosions, because the mass involved is always close to the Chandrasekhar mass, but they are not perfectly regular. The breakthrough was the discovery of an empirical correlation between the peak luminosity of the event and the temporal evolution of its subsequent fading. This correlation can be used to reduce the scatter from event to event. The results from both teams seem very conclusive. The measurements indicate that high-redshift supernovae are indeed systematically fainter than one would expect on the basis of extrapolation from similar low-redshift sources using decelerating world models. The results are sensitive to a complicated combination of Ωm and ΩΛ through the integral along the line of sight ds2=0 from the source to the observer through the expanding space-time, as described in Box 1. These observations strongly favour accelerating world models. This in turn, strongly suggests the presence of a non-zero vacuum energy. More recently, evidence has emerged that the highest-redshift supernovae are probing an epoch during which the Universe was decelerating, showing that the onset of cosmic acceleration was relatively recent28,29 (Fig. 1). This is what one would expect, as the cosmological constant is constant, but the contribution of matter and radiation to the total energy budget should have been higher in the past.

The vertical axis represents distance modulus (m-M), which is a measure of the dimming of the source due to its distance and cosmological expansion. The horizontal axis is redshift, z. The results are plotted in terms of residuals (Δ) obtained by subtracting off the expected behaviour for an empty, undeccelerated universe. If this were the correct cosmological model, the results should lie on the straight dashed line. The most recent data (Autumn, 99) are plotted as filled circles with their standard errors; older data are shown as open circles. The diagram also shows the behaviour for three different cosmological models (solid lines): from top to bottom these are the ‘concordance model’, a model with Ωm=0.3 but Λ=0, and a model with Ωm=1. The data seem to be closest to the concordance model, in which the Universe accelerates at low redshifts but was decelerating at earlier times.

In 1994 there was no direct evidence either for flat space or for Λ. One major concern, which at the time was clouded by large observational uncertainties, was whether the age of the oldest stars (in globular clusters) was consistent with the age of the Universe based on the cosmic dynamics (Box 1) and the measured value of the Hubble constant. This was a major problem for cosmologies with Ωm=1, because such models have ages of 6.5h−1 Gyr, requiring h=0.5 to be compatible even with a conservative lower limit on the ages of globular cluster stars. The evidence for Λ from supernova observations has also relaxed the pressure on this issue; accelerating world models are older than decelerating ones for the same value of h. Refinements of the cosmic distance scale30 and globular cluster ages31 have subsequently brought observation and theory into good agreement, and provided further evidence for the Big Bang framework.

In the language of the Friedmann models, accelerating universes require the existence of a cosmological constant, as the density and pressure terms on the right-hand side of equation (6) are positive for ‘normal’ forms of matter. These terms arise because Einstein modified the left-hand side of equation (4) by the simple addition of a term involving Λ. Like the zero-point vacuum fluctuations discovered by Casimir32, it was realized that one-loop quantum fluctuations in ‘empty’ space should behave exactly in this way, so the natural description of the cosmological constant was as ‘vacuum energy’ or ‘dark energy’; that is, as a sort of quantum correction to the energy-momentum tensor.

This exciting connection between microscopic quantum physics and large-scale cosmic dynamics is one of the biggest unexplained mysteries in modern science33. The problem is that the vacuum energy is divergent, so should utterly dominate the curvature and expansion rate of the Universe. If the divergences are cut off at the Planck energy (hc5/G)1/2≈1019 GeV, the resulting energy density is 123 orders of magnitude larger than observations allow. A cut-off at the quantum chromodynamics scale would result in a discrepancy of 40 orders of magnitude, which is still a long way off. It seems reasonable to suppose that such a drastic problem has a very fundamental answer. Perhaps there is some reason why the vacuum energy should be exactly zero. Supersymmetric particle theories suggest that this might be possible owing to a cancellation of fermionic and bosonic contributions. However, the cosmological observations suggest this is not correct: there is a non-zero cosmological constant, but it is very small by particle physics standards. In order to get a vacuum energy consistent with the latest data, the cut-off would have to be at the meV scale. Could the right explanation be a supersymmetric theory involving a fundamental energy scale this small?

The realization that our Universe may be accelerating even in its present old age has resulted in a different manifestation of this idea, generically known as quintessence34,35,36. In these models, the cosmological constant (or vacuum energy) is not constant but dynamical, perhaps produced by an evolving scalar field at much lower energies than the GUT scales probably involved in inflation (Box 2). In such models, one can parametrize the behaviour of the field by an effective time-dependent equation of state relating the pressure ρ and the energy density ρc2 in the form p=w(t)ρc2. The case w=−1 corresponds to a classical Λ-term, but any value w<−1/3 would allow acceleration. Current observations do not allow discrimination between the various possibilities, but that may change in the future. As in the case of primordial inflation, this field may emerge as part of the low-energy effective theory arising from superstring theory, although in this case too the energy scale needs to be explained.

The world after WMAP

The supernova searches were a fitting prelude to the stunning results that emerged from CMB studies that provided definitive evidence for spatial flatness, culminating in the Wilkinson Microwave Anisotropy Probe37 (WMAP) measurements in 2003. The CMB is perhaps the ultimate vehicle for classical cosmology. In looking back to a period when the Universe was only a few hundred thousand years old, one is looking across most of the observable Universe. This enormous baseline makes it possible to carry out exquisitely accurate surveying. Although the microwave background is very smooth, the COBE satellite did detect small variations in temperature across the sky. These ripples are caused by acoustic oscillations in the primordial plasma. Whereas COBE was only sensitive to long-wavelength waves, WMAP, with its much higher resolution, could probe the higher-frequency content of the primordial roar. The pattern of fluctuations across the sky displays information about characteristic frequencies of the modes of oscillation of the cosmic fireball. The spectrum of the temperature variations displays peaks and troughs38 that contain fantastically detailed data about the basic cosmological parameters described above (and much more); see Fig. 2.

The fluctuations are described in terms of fluctuation amplitude Cl (y-axis) as a function of spherical harmonic multipole l (x-axis). The upper panel shows the WMAP data as well as complementary results from the Cosmic Background Interferometer (CBI) and the Arcminute Cosmology Bolometer Array (ACBAR), as well as the best-fitting concordance model (ΛCDM).

The WMAP data have been greeted with a kind of euphoria by cosmologists as marking the dawn of a new era of ‘precision cosmology’, but perhaps the most striking thing about these results is that they agreed so well with a plethora of previous observations. The process started with COBE39 but there were, for example, strong indications of what WMAP would subsequently confirm in the earlier balloon-based BOOMERANG40,41 and MAXIMA42,43,44 experiments, both separately and in combination with each other and with COBE45. It is also important to acknowledge the continuing role of smaller-scale interferometric observations, such as CBI46 and DASI47 (especially in the detection of CMB polarization48). These CMB-only constraints were also strengthened by combination with galaxy clustering information49,50. However, because WMAP was able to map the entire sky rather than small patches, the statistical accuracy it achieved over a large range of angular scales brought into the light what had previously been lurking in a thicket of error bars.

Even the preliminary first-year data yield stringent constraints51 on allowed world models, such as Ωmh2=0.14±0.02, Ωbh2=0.024±0.001, h=0.72±0.05 and Ωk=−0.02±0.02. These can be strengthened still further by combining the latest WMAP data with supernovae and galaxy clustering measurements52. These results are clearly consistent with flat space, and thus fit naturally within the inflationary paradigm, although there is a strange 30%–70% split in the overall energy budget of the Universe, which has yet to be explained by fundamental theory.

The data WMAP has yielded are indeed spectacular, but there are some issues that remain to be resolved. For example, there is a growing realization that the WMAP data contain some strange features53,54,55, perhaps resulting from some form of foreground contamination56. Our own galaxy pollutes the CMB as it is itself a source of dust, synchrotron and free–free emission, some of which appear in microwave frequencies. These must be modelled and corrected before one can see the primordial radiation. This is a difficult task, and it remains to be seen how accurately foreground removal can be accomplished in practice with the relatively simple techniques being used to date.

Whether the residual foreground is sufficiently important to affect the determination of the basic cosmological parameters seems unlikely, but it may disguise more subtle signatures of early Universe physics. One suggestion, for example, has been that the relative lack of large-scale power in the WMAP spectrum may be a consequence of a non-trivial topology for our Universe57. This suggestion has been challenged58, but it is nevertheless a timely reminder that Einstein's theory (Box 1) is essentially local and provides no prescription for the large-scale connectivity of space. We could, in principle, be living in a flat universe which is not infinite, but rather finite with a topology like that of a torus.

Future space- and ground-based CMB experiments will focus increasingly not on its temperature variations but on its polarization. The microwave sky is partially polarized, as expected from basic scattering theory. WMAP detected a significant cross-correlation between temperature and polarization fluctuations at a surprisingly high level59. This has been interpreted as providing evidence for additional scattering of CMB light owing to the recent reionization of matter, although it must be stressed it is a preliminary finding. If a relatively weak but polarized source of foreground contamination were in the CMB temperature maps, it might generate a spurious cross-correlation. If clean, detailed maps of the polarization pattern can be made, however, it should prove possible to identify the primordial gravitational waves expected to be produced by inflation60.

Structure formation

So far the story has focused on the basic parameters describing an idealized homogeneous and isotropic universe. Of course, our Universe is not really like that. It contains stars, galaxies, clusters and superclusters of galaxies, and a vast amount of messy complexity. Where did this structure come from? The basic idea dates back as far as Jeans19 and, in a cosmological context, Lifshitz20. A perfectly smooth, self-gravitating fluid with the same density everywhere will stay homogeneous for all time. But any irregularities, however slight, will be amplified by the action of gravity. A small blob with higher than average density will tend to attract material from its surroundings, getting denser still. As it gets denser it attracts yet more matter. The situation is therefore unstable, and the result of this instability is that the blob will turn into a highly concentrated clump held together by its own internal gravitational forces.

Gravitational instability is exponentially fast if the starting configuration is static, but in an expanding universe local gravitational effects must first work to overcome the tendency of the lump to expand before they can make it contract. The effect of this is that the Universe displays a slower growth of structure, like a power-law function of cosmic time rather than an exponential. The rate of clustering also depends on the overall density of matter, that is, on Ωm, but not on Λ as the dark energy is assumed not to cluster. The more matter there is, therefore, the faster inhomogeneities grow. Besides inflation, this provided an additional motivation for some cosmologists of the 1980s to lean towards a critical density, that is, Ωm=1. Given the tight limits on the allowed baryon abundance, this could only be achieved with the aid of an overwhelmingly predominant distribution of dark non-baryonic material. After pioneering work in numerical simulations of clustering growth, it became clear that the best kind of matter to assist gravitational clustering was cold dark matter (CDM), the required property being that it is slow-moving and easy to collect into structures61,62. The precise nature of this stuff is still unclear, although just about any massive weakly interacting relict of the primordial fireball would do. Supersymmetric theories include fermionic counterparts of the gauge bosons (and the Higgs). If one such particle has a lifetime larger than the age of the Universe, then that could be the dark matter.

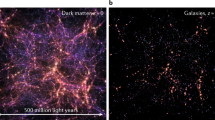

For a while, the ‘standard’ CDM model enjoyed great success, but it is now known to be at odds not just with the basic parameter determinations (which require Ωm<1) but also with the statistics of large-scale galaxy clustering. Adoption of the ‘concordance’ parameter set furnished by WMAP and other measurements gives a ‘ΛCDM’ model63 that seems to reproduce the large-scale features of the Universe very well (Fig. 3). In this model, the dark energy does not play a major role in the gravitational instability because it does not cluster. The Λ component is important, however, because it causes acceleration that prevents the extremely rapid evolution expected at very late times in models with Ωm=1.

The figure shows the redshift evolution of four variants on the theme of CDM; the concordance ΛCDM model is at the top, followed by the 1980s vintage standard CDM (SCDM; Ωm=1); the τCDM and OCDM models are variants of the basic theory with, respectively, a high density but modified initial power spectrum, and a low density without a cosmological constant. The only one of these models that fits available galaxy clustering measurements and the other cosmological constraints is ΛCDM. The colour scale indicates the density of dark matter, with dark regions representing low density.

There are good theoretical reasons for thinking that galaxies, in statistical terms, roughly trace the mass distribution on large scales up to a bias factor64, which can be estimated from the surveys. This is largely borne out by comparison with the latest large redshift surveys, such as the 2dF Galaxy Redshift Survey65,66,67,68 (2dfGRS; Fig. 4) and the Sloan Digital Sky Survey (SDSS)69,70, whose statistical properties are in good general accord with the gravitational instability picture. The power spectrum of galaxy clustering contains a relict of the acoustic oscillations seen in the CMB angular fluctuations, so comparing galaxy clustering measurements with, for example, the WMAP data, provides a powerful additional consistency check on these models. An independent probe of the bias is also furnished by gravitational lensing measurements, especially using a technique called weak lensing71, which can provide an independent handle on the underlying mass distribution.

The angular extent of the map is given in right ascension (h), with 24h being 360°. Each piece of the map spans about 75° across the sky in a narrow strip of declination. This survey contains approximately 250,000 galaxies, selected in blue light. Detailed statistical analysis finds good agreement with the LCDM model, at least on large scales.

On the scale of individual galaxies, however, things are not so clear. In a nutshell, the idea is that the CDM component begins to cluster in the early Universe, even before recombination (when the baryonic matter is still coupled strongly to radiation). Pools of CDM collect, generating gravitational potential wells into which the gas is subsequently drawn. These pools take the form of extended invisible haloes within which galaxies subsequently form. But the processes involved are complex, and it is hard to predict with certainty what happens inside individual haloes. The gas first heats up, so that it is in gravitational equilibrium with the halo. It then cools through atomic processes and collapses into the centre, fragmenting and forming stars61. These stars may heat the gas up again, perhaps even expelling some of it from the halo. While this is happening, haloes are continually merging and disrupting each other as gravity generates a hierarchy of structures from the bottom up. While there are also computer codes that can follow the behaviour of baryonic gas alongside the dark matter, there is no available technique for handling star formation, magnetic fields, dust and all the paraphernalia of star formation with similar precision. The best that has been done so far involves so-called semi-analytic models, which use a set of simple ad hoc rules to specify when star formation occurs within haloes during this hierarchical process72,73,74,75. This approach has achieved many successes, but also has many shortcomings.

One of the basic problems concerns the distribution of dark matter in galaxies. High-resolution numerical experiments76 suggest that the density profile ρ(r) of dark matter haloes, as a function of distance r from the halo centre, is expected to follow the universal form:

where A is a normalization parameter and rs is a characteristic scale related to the mass and degree of concentration of the halo. The slope γ is measured in simulations to be γ≈1.2±0.3. These experiments involve only gravity and cold collisionless matter, but even these results are somewhat controversial77,78,79,80,81,82.

Observations seem to be in disagreement with the predictions of equation (2), although this too is controversial83,84,85. The concentration of CDM seems to be low in galaxies with a low surface brightness of stellar emission. The steep ‘cusp’ in the centre is not usually seen in such galaxies: the core seems to have a flatter slope. There also seems to be a puzzling dearth of dark matter in some elliptical galaxies86. Moreover, the dark matter in galaxy haloes is expected to be highly clumped, leading to large numbers of dwarf haloes. If these objects formed stars as efficiently as ‘normal’ galaxies, then they would exceed the observed number of dwarf galaxies by a large factor. The CDM clumpiness is also expected to cause baryons to lose angular momentum by dynamical friction as they collapse. Galaxy disks are then predicted to be about a factor of five smaller than observed. All these conclusions may be affected to a greater or lesser extent by problems with numerical resolution.

Overall, the semi-analytic models struggle to explain the observed luminosity function of galaxies87: gas cooling (and consequent star formation) seems to be so efficient that the models predict too many very luminous galaxies. Some process, such as feedback from active galactic nuclei, supernovae, or stellar winds, may regulate the supply of cold gas by providing feedback that heats up or ejects the material falling into a galaxy, but the details of how this happens remain obscure. More dramatically, the role of a central supermassive black hole in the evolution of galaxies is yet to be fully elucidated. Perhaps accretion onto such objects causes violent outflows that expel at least some of the cold gas. The problem of galaxy formation is so complex that it is unlikely to be solved from first principles. What is more likely is that observations will pin down the range of possibilities to enable better models to be constructed.

A key question on the route to a fuller picture is how the whole process of galaxy formation got under way. After the production of the CMB radiation, baryonic matter is thought to have recombined into a neutral gas which cooled as the Universe expanded. At some point, something happened to inject sufficient energy into this gas to heat it up again to the point of ionization. Circumstantial evidence for when this happened is furnished by the WMAP polarization measurements59, as well as by more direct measurements of the intergalactic medium through lines-of-sight to distant quasars88,89. We do not know what kind of object — primordial stars, active galaxies or quasars — produces the first flash of ionizing radiation90,91, and we do not know the extent to which the reionization occurs smoothly or in lumps. And how does the epoch of reionization of the Universe relate to the onset of star formation in galaxies92,93? Answers to these questions will require observing facilities across a whole range of wavelengths, because light produced in the early Universe is seen at much longer wavelengths by present-day observers. Moreover, indications are that as much as half the stellar light94 produced as galaxies form and evolve has been obscured by dust, to be re-radiated in the infrared and submillimetre regions of the spectrum95.

The undiscovered cosmos

So this is the current state of the Universe, or at least of our understanding of parts of it. We seem to have established a ‘standard’ cosmological model dominated by dark energy and dark matter, with a tiny flavouring of the baryonic matter from which stars, planets and ourselves are made. This standard model accounts for many precise observations and has been hailed as a spectacular triumph. And so it is. But this progress should not distract us from the fact that modern cosmology also has a number of serious shortcomings that may take a long time to remedy. These difficulties can be summarized in a series of interrelated questions, most of them very basic, to which we still do not have answers, but where a number of upcoming observational programmes96 may yield the crucial clues.

Is general relativity right? In a sense we already know that the answer to this question is ‘no’. At sufficiently high energies (near the Planck scale), we expect classical relativity to be replaced by a quantum theory of gravity. But it is not just in the early Universe that departures from general relativity are possible. The different estimates of Ωk≈0 (for example, from WMAP), ΩΛ≈0.7 (from supernovae) and Ωm≈0.3 (from galaxy clustering) do seem to satisfy equation (1). This, together with the agreement between stellar ages and the dynamical age of the Big Bang, shows that we at least have a consistent picture based on general relativity. Whether this consistency will survive the test of time remains to be seen.

Is the Universe finite or infinite? If we accept the Big Bang theory then our observable Universe began a finite time in the past. The finite speed of light means that the part of it we can expect to see must be finite. But what happens beyond our horizon is not known, even if our observable patch is well described by the concordance model. With a cosmological constant, our accelerating Universe might continue speeding up for the indefinite future, but if the dark energy were dynamical the far future depends on its eventual ground state, which is unknown. Our Universe may not be infinite in duration, even if it is currently accelerating.

What is the dark energy? The question here is twofold. One part is whether the dark energy is of the form of an evolving scalar field, such as quintessence or its variants, or whether it really is constant, as in Einstein's original version. This may well be answered by future observational studies, such as the SNAP satellite (currently awaiting budget approval in the USA), which will observe not dozens but thousands of supernovae, and may be able to detect changes in the parameter w with redshift. The second part is whether dark energy can be understood in terms of fundamental theory. This is a more open question. At the least, it would require an understanding of the field(s) involved from a particle physics viewpoint. But it would also require an understanding of why the expected divergence of one-loop fluctuations is suppressed. I think it is safe to say that we are still far from such an understanding. Perhaps string theory holds the key.

What is the dark matter? Around 30% of the mass in the Universe is thought to be in the form of dark matter, but we do not know what it is — other than it is meant to be cold. As far as gravity is concerned, one cold particle is much the same as another. There is no prospect for learning about the nature of the CDM particles through astronomical means, unless they decay into radiation or some other identifiable particles. Experimental attempts to detect the dark matter directly are pushing back the limits of technology, but it would have to be a long shot for them to succeed in such a large parameter space.

Did inflation really happen? The success of concordance cosmology is largely founded on the appearance of ‘Doppler peaks’ in the CMB fluctuation spectrum. These arise from acoustic oscillations in the primordial plasma that have particular statistical properties consistent with them having been generated by quantum fluctuations in the scalar field driving inflation. This is circumstantial evidence in favour of inflation happening, but it is perhaps not strong enough to give a definitive answer. The definitive proof of inflation is probably the existence of a stochastic gravitational wave background; in the ekpyrotic universe, for example, the predicted background is very small. The identification and extraction of this may be possible using future polarization-sensitive CMB studies, such as the Planck Surveyor, even before direct experimental gravitational wave detectors of sufficient sensitivity, such as LISA, become available.

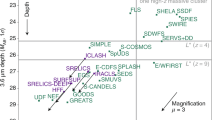

How and when did galaxy formation happen? The field of galaxy formation has changed immeasurably over the past decade. Interplay between theory, modelling and observation is now so intense that the boundaries have become blurred. The imminent arrival of the Atacama Large Millimetre Array (ALMA) for submillimetre observations of dust emission at high redshift and, in the longer term, 100-m-class optical telescopes to image very distant star-forming regions, and the Square Kilometre Array (SKA) for sensitive radio measurements, are just some of the developments on the horizon. SKA in particular will enable observations to reach back past the epoch of galaxy formation and into the cosmic dark ages before there were any stars, and also probe directly the supply of cold gas through its 21-cm emission. Provided that the necessary investment in instrumentation is made, this is one question where a firm, empirically based answer is likely.

References

Einstein, A. The gravitational field equations [in German]. Sitz. Ber. Preuss. Akad. Wiss. 844–847 (1915).

Friedmann, A. On the curvature of space [in German]. Z. Phys. 10, 377–386 (1922).

Lemaitre, G. A homogenous universe of constant mass and increasing radius accounting for the radial velocity of the extragalactic nebulae. Ann. Soc. Sci. Brux. A 47, 49–59 (1929); [in English] Mon. Not. R. Astron. Soc. 91, 483–490 (1931).

Hubble, E. A relation between distance and radial velocity among extragalactic nebulae. Proc. Natl Acad. Sci. 15, 168–173 (1929).

Penzias, A. A. & Wilson, R. W. Measurement of excess antenna temperature at 4080 MHz. Astrophys. J. 142, 419–421 (1965).

Dicke, R. H., Peebles, P. J. E., Roll, P. G. & Wilkinson, D. T. Cosmic blackbody radiation. Astrophys. J. 142, 414–419 (1965).

Mather, J. C. et al. Measurement of the cosmic background spectrum by the COBE FIRAS instrument. Astrophys. J. 420, 439–444 (1994).

Gamow, G. Expanding universe and the origin of elements. Phys. Rev. 70, 572–573 (1946).

Alpher, R. A. & Herman, R. C. Evolution of the universe. Phys. Rev. 73, 803–804 (1948).

Guth, A. H. Inflationary Universe: A possible solution to the horizon and flatness problems. Phys. Rev. D 23, 347–356 (1981).

Albrecht, A. & Steinhardt, P. J. Cosmology for grand unified theories with radiatively induced symmetry breaking. Phys. Rev. Lett. 48, 1220–1223 (1982).

Linde, A. D. A new inflationary universe scenario: A possible solution of the horizon, flatness, homogeneity, isotropy and primordial monopole problems. Phys. Lett. B 108, 389–393 (1982).

Linde, A. D. Chaotic inflation. Phys. Lett. B 129, 177–181 (1983).

Kaluza, T. On the unification problem of physics [in German]. Sitz. Ber. Preuss. Akad. Wiss. 966–972 (1921).

Klein, O. Quantum theory and five-dimensional relativity theory [in German]. Z. Phys. 37, 895–906 (1926).

Khoury, J., Ovrut, B. A., Steinhardt, P. J. & Turok, N. Ekpyrotic universe: Colliding branes and the origin of the hot big bang. Phys. Rev. D 64, 13522 (2001).

Khoury, J., Ovrut, B. A., Steinhardt, P. J. & Turok, N. From big crunch to big bang. Phys. Rev. D 65, 086007 (2002).

Randall, L. & Sundrum, R. An alternative to compactification. Phys. Rev. Lett. 83, 4690–4693 (1999).

Jeans, J. H. The stability of spiral nebulae. Phil. Trans. R. Soc. Lond. A 199, 1–53 (1902).

Lifshitz, E. M. On the gravitational instability of the expanding universe. Sov. Phys. JETP 10, 116–122 (1946).

Linde, A. D. Scalar field fluctuations in the expanding universe and the new inflationary universe scenario. Phys. Lett. B 116, 335–339 (1982).

Guth, A. H. & Pi, S. -Y. Fluctuations in the new inflationary universe. Phys. Rev. Lett. 49, 1110–1113 (1982).

Starobinsky, A. A. Spectrum of relict gravitational radiation and the early state of the universe. Sov. Phys. JETP Lett. 30, 682–685 (1979).

Coles, P. & Ellis, G. F. R. The case for an open universe. Nature 370, 609–615 (1994).

Efstathiou, G., Sutherland, W. J. & Maddox, S. J. The cosmological constant and cold dark matter. Nature 348, 705–706 (1990).

Perlmutter, S. et al. Measurements of omega and lambda from 42 high-redshift supernovae. Astrophys. J. 517, 565–586 (1999).

Riess, A. G. et al. Observational evidence from supernovae for an accelerating universe and a cosmological constant. Astron. J. 116, 1009–1038 (1998).

Riess, A. G. et al. The farthest known supernova: Support for an accelerating universe and a glimpse of the epoch of deceleration. Astrophys. J. 560, 49–71 (2001).

Tonry, J. L. et al. Cosmological results from high-z supernovae. Astrophys. J. 594, 1–24 (2003).

Freedman, W. L. et al. Final results from the Hubble Space Telescope key project to measure the Hubble constant. Astrophys. J. 553, 47–72 (2001).

Carretta, E., Gratton, R. G., Clementini, G. & Flavio, F. P. Distances, ages and epoch of formation of globular clusters. Astrophys. J. 533, 215–235 (2000).

Casimir, H. B. G. On the attraction between two perfectly conducting plates. Proc. K. Ned. Akad. Wet. 51, 793–795 (1948).

Weinberg, S. The cosmological constant problem. Rev. Mod. Phys. 6, 1–22 (1989).

Wang, L., Caldwell, R. R., Ostriker, J. P. & Steinhard, P. J. Cosmic concordance and quintessence. Astrophys. J. 530, 17–35 (2000).

Sahni, V. The cosmological constant problem and quintessence. Class. Quant. Grav. 19, 3435–3448 (2002).

Peebles, P. J. E. & Ratra, B. The cosmological constant and dark energy. Rev. Mod. Phys. 75, 559–606 (2003).

Bennett, C. L. et al. First-year Wilkinson Microwave Anisotropy Probe (WMAP) observations. Astrophys. J. Suppl. 148, 1–27 (2003).

Hinshaw, G. et al. First-year Wilkinson Microwave Anisotropy Probe (WMAP) observations: The angular power spectrum. Astrophys. J. Suppl. 148, 135–159 (2003).

Smoot, G. F. et al. Structure in the COBE differential microwave radiometer first year maps. Astrophys. J. 396, L1–L4 (1992).

de Bernardis, P. et al. A flat universe from high-resolution maps of the cosmic microwave background radiation. Nature 420, 763–765 (2000).

Lange, A. E. et al. Cosmological parameters from the first results of BOOMERANG. Phys. Rev. D. 63, 042001 (2001).

Hanany, S. et al. MAXIMA-1: A measurement of the cosmic microwave background anisotropy on angular scales 10 arc minutes to 5 degrees. Astrophys. J. 545, L5–L9 (2000).

Balbi, A. et al. Constraints on cosmological parameters from MAXIMA-1 Astrophys. J. 545, L1–L4 (2000).

Lee, A. et al. A high spatial resolution analysis of the MAXIMA-1 cosmic microwave background anisotropy data. Astrophys. J. 561, L1–L4 (2001).

Jaffe, A. H. Cosmology from MAXIMA-1, BOOMERANG, and COBE-DMR cosmic microwave background observations. Phys. Rev. Lett. 86, 3475–3479 (2001).

Padin, S. et al. First intrinsic anisotropy measurements with the cosmic background imager. Astrophys. J 549, L1–L5 (2001).

Halverson, N. W. et al. Degree Angular Scale Interferometer first results: A measurement of the cosmic microwave background angular power spectrum. Astrophys. J. 568, 38–45 (2002).

Kovac, J. M. et al. Detection of polarization in the cosmic microwave background using DASI. Nature 468, 46–51 (2002).

Efstathiou, G. Evidence for a non-zero and a low matter density from a combined analysis of the 2dF Galaxy Redshift Survey and cosmic microwave background anisotropies. Mon. Not. R. Astron. Soc. 330, L29–L35 (2002).

Lahav, O. et al. The 2dF Galaxy Redshift Survey: The amplitudes of fluctuations in the 2dFGRS and the CMB, and the implications for galaxy biasing. Mon. Not. R. Astron. Soc. 333, 961–968 (2002).

Spergel, D. N. et al. First-year Wilkinson Microwave Anisotropy Probe (WMAP) observations: Determination of cosmological parameters. Astrophys. J. Suppl. 148, 175–194 (2003).

Bridle, S. L., Lahav, O., Ostriker, J. P. & Steinhardt, P. J. Precision cosmology? Not just yet. Science 299, 1532–1536 (2003).

Eriksen, H. K., Hansen, F. K., Banday, A. J., Gorski, K. M. & Lilje, P. B. Asymmetries in the cosmic microwave background anisotropy field. Astrophys. J. 605, 14–20 (2004).

Vielva, P., Martinez-Gonzalez, E., Barreiro, R. B., Sanz, J. L. & Cayon, L. Detection of non-gaussianity in the Wilkinson Microwave Anisotropy Probe first-year data using spherical wavelets. Astrophys. J. 609, 22–34 (2004).

Coles, P., Dineen, P. J., Earl, J. & Wright, D. Phase correlations in cosmic microwave background temperature maps. Mon. Not. R. Astron. Soc. 350, 989–1004 (2004).

De Oliveira-Costa, A. et al. The quest for microwave foreground X. Astrophys. J. 606, L89–L92 (2004).

Luminet, J. P., Weeks, J. R., Riazuelo, A., Lehoucq, R. & Uzan, J. -P. Dodecahedral space topology as an explanation for the weak wide-angle temperature correlations in the cosmic microwave background. Nature 425, 593–595 (2003).

Cornish, N. J., Spergel, D. N., Starkman, G. D. & Komatsu, E. Constraining the topology of the universe. Phys. Rev. Lett. 92, 201302 (2004).

Kogut, A. et al. First-year Wilkinson Microwave Anisotropy Probe (WMAP) observations: Temperature-polarization correlation. Astrophys. J. Suppl. 148, 161–173 (2003).

Kosowksy, A. Introduction to microwave background polarization. New Astron. Rev. 48, 157–168 (1999).

Blumenthal, G. R., Faber, S. M., Primack, J. R. & Rees, M. J. Formation of galaxies and large-scale structure with cold dark matter. Nature 311, 517–525 (1984).

Davis, M., Efstathiou, G., Frenk, C. S. & White, S. D. M. The evolution of large-scale structure in a universe dominated by cold dark matter. Astrophys. J. 292, 371–394 (1985).

Jenkins, A. et al. Evolution of structure in CDM universes. Astrophys. J. 499, 20–40 (1998).

Coles, P. Galaxy formation with a local bias. Mon. Not. R. Astron. Soc. 262, 1065–1075 (1993).

Colless, M. et al. The 2dF Galaxy Redshift Survey: Spectra and redshifts. Mon. Not. R. Astron. Soc. 328, 1039–1063 (2001).

Percival, W. J. et al. The 2dF Galaxy Redshift Survey: The power spectrum and the matter content of the universe. Mon. Not. R. Astron. Soc. 327, 1297–1306 (2001).

Peacock, J. A. et al. A measurement of the cosmological mass density from clustering in the 2dF Galaxy Redshift Survey. Nature 410, 169–163 (2001).

Norberg, P. D. et al. The 2dF Galaxy Redshift Survey: Luminosity dependence of galaxy clustering. Mon. Not. R. Astron. Soc. 328, 64–70 (2001).

Abazajian, K. et al. The first data release of the Sloan Digital Sky Survey. Astron. J. 126, 2081–2086 (2003).

Zehavi, I. et al. Galaxy clustering in early Sloan Digital Sky Survey redshift data. Astrophys. J. 571, 172–190 (2002).

Mellier, Y. Probing the Universe with weak lensing. Annu. Rev. Astron. Astrophys. 37, 127–189 (1999).

Kauffmann, G., White, S. D. M. & Guiderdoni, B. The formation and evolution of galaxies within merging dark matter haloes. Mon. Not. R. Astron. Soc. 264, 201–218 (1993).

Somerville, R. S. & Primack, J. R. Semi-analytic modelling of galaxy formation: the local universe. Mon. Not. R. Astron. Soc. 264, 201–218 (1993).

Baugh, C. M., Cole, S., Frenk, C. S. & Lacey, C. G. The epoch of galaxy formation. Astrophys. J. 498, 504–521 (1998).

Cole, S., Lacey, C. G., Baugh, C. M. & Frenk, C. S. Hierarchical galaxy formation. Mon. Not. R. Astron. Soc. 319, 168–204 (2000).

Navarro, J. F., Frenk, C. S. & White, S. D. M. A universal density profile from hierarchical clustering. Astrophys. J. 490, 493–508 (1997).

Moore, B., Quinn, T., Governato, F., Stadel, J. & Lake, G. Cold collapse and the core catastrophe. Mon. Not. R. Astron. Soc. 310, 1147–1152 (1999).

Ghigna, S. et al. Density profiles and substructure of dark matter halos: Converging results and ultra-high numerical resolution. Astrophys. J. 544, 616–628 (2000).

Jing, Y. -P. & Suto, Y. The density profiles of the dark matter halo are not universal. Astrophys. J. 529, L69–L72 (2000).

Klypin, A., Kravtsov, A. V., Bullock, J. S. & Primack, J. R. Resolving the structure of cold dark matter halos. Astrophys. J. 554, 903–915 (2001).

Fukushige, T., Kawai, A. & Makino, J. Structure of dark matter halos from hierarchical clustering III. Shallowing of the inner cusp. Astrophys. J. 606, 625–634 (2004).

Navarro, J. F. et al. The inner structure of CDM haloes — III. Universality and asymptotic slopes. Mon. Not. R. Astron. Soc. 349, 1039–1051 (2004).

van den Bosch, F. C., Robertson, B. E., Dalcanton, J. J. & de Blok, W. J. G. Constraints on the structure of dark matter halos from the rotation curves of low surface brightness galaxies. Astron. J. 119, 1579–1591 (2000).

de Blok, W. J. G., McGaugh, S. S., Bosma, A. & Rubin, V. C. Mass density profiles of low surface brightness galaxies. Astron. J. 122, 2396–2347 (2001).

van den Bosch, F. C. & Swaters, R. A. Dwarf galaxy rotation curves and the core problem of dark matter haloes. Mon. Not. R. Astron. Soc. 325, 1017–1038 (2001).

Romanowsky, A. et al. A dearth of dark matter in ordinary elliptical galaxies. Science 301, 1696–1698 (2003).

Benson, A. J. et al. What shapes the luminosity function of galaxies? Astrophys. J. 599, 38–49 (2003).

Becker, R. H. et al. Evidence for reionization at z›6: Detection of a Gunn-Peterson trough in a z=6.28 quasar. Astron. J. 122, 2850–2857 (2001).

Fan, X. et al. Evolution of the ionizing background and the epoch of reionization from the spectra of z≈6 quasars. Astron. J. 123, 1247–1257 (2002).

Gnedin, N. Y. Cosmological reionization by stellar sources. Astrophys. J. 535, 530–554 (2000).

Barkana, R. & Loeb, A. In the beginning: The first sources of light and the reionization of the universe. Phys. Rep. 349, 125–238 (2001).

Abel, T., Bryan, G. & Norman, M. L. The formation of the first star in the universe. Science 295, 93–98 (2002).

Bromm, V. & Larson, R. B. The first stars. Annu. Rev. Astron. Astrophys. 42, 79–118 (2004).

Madau, P., Pozzetti, L. & Dickinson, M. The star formation histories of field galaxies. Astrophys. J. 498, 106–116 (1998).

Blain, A. W., Smail, I., Ivison, R. J. & Kneib, J. -P. The history of star formation in dusty galaxies. Mon. Not. R. Astron. Soc. 302, 632–648 (1999).

Coles, P. The future of extragalactic observations. Class. Quant. Grav. 19, 3539–3549 (2002).

Einstein, A. Cosmological considerations of the general theory of relativity [in German]. Sitz. Ber. Preuss. Akad. Wiss. 142–152 (1917); English translation in The Principle of Relativity (eds Lorentz, H. A., Einstein, A., Minkowski, H. & Weyl, H.) 177–188 (Methuen, London, 1950).

Author information

Authors and Affiliations

Ethics declarations

Competing interests

The author declares no competing financial interests.

Rights and permissions

About this article

Cite this article

Coles, P. The state of the Universe. Nature 433, 248–256 (2005). https://doi.org/10.1038/nature03282

Published:

Issue Date:

DOI: https://doi.org/10.1038/nature03282

This article is cited by

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.