Key Points

-

Several standards are contributing to advancement of knowledge in biology, however most standardization initiatives are still in the investment stage for biologists.

-

Developing a complete and self-contained standard in biology involves four steps: conceptual model design, model formalization, development of a data exchange format and implementation of the supporting tools.

-

In life sciences, standards development typically is done by grass roots movements, and it is difficult to persuade funding agencies to fund such activities.

-

Although it might be faster for a single organization to develop its own standards, a bottom-up community consensus approach is key to the long-term acceptance and usefulness of standards.

-

Developing and deploying a standard creates an overhead, which can be expensive. Standards related to a particular technology have a life span that is no longer than the technology itself and there is only a limited period of time in which the overheads can be paid off.

-

The body of biological knowledge is incomplete and expanding rapidly; therefore, standards that describe biological knowledge have to be flexible, and a mechanism of change must be a part of the standard.

-

To avoid proliferation of standards, common features of existing standards should be re-used wherever possible. Simplicity, but not oversimplification, is the key to success.

Abstract

High-throughput technologies are generating large amounts of complex data that have to be stored in databases, communicated to various data analysis tools and interpreted by scientists. Data representation and communication standards are needed to implement these steps efficiently. Here we give a classification of various standards related to systems biology and discuss various aspects of standardization in life sciences in general. Why are some standards more successful than others, what are the prerequisites for a standard to succeed and what are the possible pitfalls?

Similar content being viewed by others

Main

Barbarism is the absence of standards to which appeal can be made. José Ortega y Gasset

Historically, standards emerged from a need for a recommended practice in the manufacturing of products. The main purpose of standards is to help to fit together many pieces to make a useful whole. In information technology, standards are needed to exchange large volumes of information when many smaller transactions are needed. Examples of established standards include a Hypertext Transport Protocol (HTTP) and an Exensible Markup Language (XML) for structuring data.

In the life sciences, the advances in high-throughput experimental technologies have allowed the acquisition of data on a large scale, and at least some level of standardization is needed to manage and use these data (Box 1). Examples of data standards relevant to systems biology include Gene Ontology (GO) for describing gene function1, Minimum Information About a Microarray Experiment (MIAME) for describing microarray experiments2, Systems Biology Markup Language (SBML)3 and Cell Markup Language (CellML) for describing biomolecular simulations4.

Standardization has not only become a popular topic in bioinformatics, but it has almost developed into a field of its own. New standards-related acronyms, such as MAGE, MO, MIAPE, MISFISHIE, MIRIAM and MIACA, are appearing almost monthly (Tables 1a,b,2) and just keeping track of them requires some effort. Most of these initiatives for developing standards are community-based and involve close collaboration of biologists, bioinformaticians and information technologists. Nevertheless, the sheer number of different standards and the pace at which the field is changing is making it difficult, even for professionals, to keep track of all the new developments5,6.

What is the aim of these standardization initiatives? How many of these standards are used and how many have contributed to advances in biology? The goal of this review is to help systems biologists to navigate the rapidly changing maze of data standards in life sciences, and to encourage biologists to provide feedback on these developments. Such feedback is necessary to ensure that the developed standards are as close as possible to addressing the real needs of biology.

It is important to distinguish between standards that specify how to actually do experiments and standards that specify how to describe experiments. Recommendations such as what standard reporters (probes) should be printed on microarrays or what quality control steps should be used in an experiment belong to the first category. Here we focus on the standards that specify how to describe and communicate data and information.

Four steps in developing standards

By information or data communication standard we mean a convention on how to encode data or information about a particular domain (such as gene function) that enables unambiguous transfer and interpretation of this information or data (in this article, we treat the terms 'data' and 'information' as synonyms).

There are several international and national bodies, such as OMG, W3C, IEEE, ANSI and IETF (see Table 1b for definitions), that formally approve standards or provide a framework for standards development. Although some of the systems biology standards have been certified (for example, SBML is officially adopted by IETF), in general in life sciences this procedure is not particularly important — many of the most successful standards such as GO have not undergone any official approval procedure, but instead have become de facto standards. In fact, many of the most successful standards in other domains are de facto standards.

There are four steps involved in developing a complete and self-contained standard: conceptual model design, model formalization, development of a data exchange format (see Fig. 1 for an example) and implementation of the supporting tools. Box 2 shows how a standard can be developed for a specific simplified example.

The first result of the microarray data standardization effort was a community agreement about the level of detail necessary to make data exchange meaningful (MIAME). The work then proceeded in parallel to: first, formalize the domain model and use this formalization as a basis for a data exchange language; and second, create an ontology that contained the necessary controlled vocabularies, as well as structures for linking to external ontologies. These two components enabled data exchange. MGED, Microarray Gene Expression Data.

The order in which these four steps occur is not fixed. The first two steps often happen in parallel — formalizing the domain helps us to understand it better. Sometimes a specific format can be used as a way of formalization, for example, XML-based formats are often developed to circumvent any object models or ontologies (without explicitly implementing step 2). The reverse is also possible: the formalism used to model the domain can define the format automatically. Some successful standards are defined by successful software that becomes a market leader and defines the standard de facto (for example, the binary format of Affymetrix.cel files, or the netcdf format used in Bioconductor for microarrays7). Nevertheless, for a standard to be complete it is vital that it contains all the components described above.

Informal description and delimitation of the domain. In the microarray domain the first step of conceptualization has been implemented explicitly. MIAME provides an informal means of modelling a microarray experiment2. Two competing goals should be balanced when developing the conceptual model of a domain: domain specificity and the need to find the common ground for all related applications. Arguably, the most useful standards are those that consist of the minimal number of most informative parameters. Keeping the list short makes it simple and practicable, while selecting the most informative features ensures accuracy and efficiency. This need for minimalism is reflected in the titles for many such standards — Minimum Information About XYZ.

Inclusiveness and establishment of a dialogue between all, or at least most, of the domain leaders in academia and industry is one of the key issues at this stage. In the microarray field the consensus was achieved by establishing the Microarray Gene Expression Data (MGED) Society8,9, which started as a grass-roots movement comprising all the main microarray players at the time, and has continued to be inclusive since. Regardless of whether the conceptualization step is done explicitly or in parallel with the next step (formalization), it is important to understand the domain well before developing any specific format.

Building the formal model. A model can be formalized either using an ontology or an object model, or some other well-defined formalism (Box 3). Often a combination of formalisms is used. For instance, the Microarray Gene Expression Object Model (MAGE-OM) is used to formalize basic objects and their relationships10, whereas MGED Ontology (MO) is used to define the structured nomenclature describing the experiments11. Additionally, guidelines on how to encode information in the model (for example, how to encode MIAME in MAGE-OM for microarrays; see the MIAME and MAGE-OM Terms Explained web site) might be needed, although such guidelines could be a part of the formalism itself. In fact, in life sciences models often consist of two parts — an object model and an ontology. This is because the first is mostly used by software developers, and the second by biologists. Both are required for a complete description of a study.

It is important to ensure the uniqueness of the object identifiers of all the instances and objects used at the domain formalization level. For example, the Human Genome Organisation (HUGO) is working on standards for naming human genes12. A more generic approach to identifying objects is taken by the Life Sciences Identifier (LSID) convention (see below). Object identification is an important and highly complex issue in the life sciences, as the domain is dynamic. For example, how can predicted genes be identified in the human genome in a durable way when not only predictions but also genome assemblies continue to be updated? Assigning persistent identifiers to objects such as exons is even more daunting because the genome 'coordinate systems' are rapidly changing. This is one of the most important general problems in building standards for biology — our understanding of living systems is constantly developing.

A formal model is the core of any standard. The challenge is to build a model that represents the whole domain without being excessively complex. The key question is how granular the model should be. At one extreme, the model contains a set of data files and a free text that describes them. At the other extreme, the model can formally specify each elementary step done in the experiment and each numerical measurement obtained from each step. The tendency to include every detail and to develop a perfect model for the domain, rather than a practical data communication standard, can easily ruin the usability of the model. A good design should aim to deal with the majority of typical cases first, and if necessary, at the expense of the minority of less important cases. On the other hand, if a model is too simplistic, it might not fulfil its function as a way to describe the domain unambiguously.

Platform-specific implementations define particular formats. The so-called platform-specific implementation of a standard can be generated automatically or derived manually from a formal model. The efficiency of a standard, as of any product, depends not only on the concept behind it, but also on its actual technical solution. For example, XML has been widely used for data exchange in life sciences and has proved to be one of the most popular formats. In systems biology, XML-based formats provide links not only between various data storage systems, but also between modelling tools.

Another example is the LSID convention that can be used for resolving queries automatically. LSID has the following format: 'urn:lsid:authority:namespace:identifier:revision'; for example, urn:lsid:ebi.ac.uk:MIAMExpress/Experiment:E-MEXP-1, where ebi.ac.uk is the 'authority', MIAMExpress is the 'namespace' (assigned to the software tool MIAMExpress), and E-MEXP-1 is the 'identifier'. There is no revision in this example. If used correctly, this convention ensures that there is only one object with this identifier anywhere in the World Wide Web.

Implementing the supporting tools. For a standard to be successful laboratory information management systems (LIMS), databases and data analysis, and modelling tools should comply with it. One way of fostering this is to develop easy-to-use 'middleware' — software components that hide the technical complexities of the standard and facilitate manipulation of the standard format in an easy way. For instance, in the microarray domain, the role of such middleware is played by the microarray gene expression toolkit, MAGE-stk.

Although to develop a complete and self-contained systems biology standard all four steps described above have to be implemented, most of the existing standards that are described in the following section implement only some of these steps. For this reason a combination of several 'partial' standards is needed to define the complete standard. For instance, in the microarray domain each of the four parts is covered by a separate partial standard — the informal conceptualization is covered by MIAME guidelines2, the formalization of the domain is covered by MAGE-OM and MO, the platform-specific implementation is covered by the XML-based Microarray Gene Expression Mark-up Language (MAGE-ML)10 (automatically derived from MAGE-OM), and the supporting tools are covered by MAGE-stk (MAGE-OM, MAGE-ML and MAGE-stk are jointly known as MAGE). Note that in a way each of the four steps represents a level of formality.

What are we standardizing?

Standardization initiatives can be classified into two orthogonal dimensions: first, based on which of the four standardization steps (discussed above) the particular standard covers; and second, based on the particular biological or technological domain it addresses (Table 2). There are two fundamental classes of data standards within the second: those that aim to communicate the actual biological knowledge and those that relate to the supporting experimental evidence (usually to a particular technology). A typical example of the first is GO or SBML. An example of the second is MIAME or MAGE.

Standards for describing biology. Because our biological knowledge is incomplete and developing fast, standards that describe biological knowledge have to be flexible, and must contain some mechanism of change. There are at least three levels (layers) of increasing detail that such standards might intend to capture: first, properties of objects of the system (parts list); second, topology and logics of the system; and third, systems dynamics13,14.

Parts list is a: collection, naming, description and systematization of elements (for example, genes, proteins, cell types and anatomical structures) in a particular biological system. A basic standard that addresses the parts list on an even more elementary level is the HUGO gene naming standard. A semantically richer example of a standard describing the properties of objects is GO1. GO provides controlled vocabularies for describing gene products and their properties in three orthogonal areas: molecular function, biological process and cellular location. Each of these is organized in a directed acyclic graph (DAG), which is a generalization of a hierarchical tree (in this way GO uses a formalism that is simpler than DAML+OIL or OWL (Web Ontology Language), see Box 3). GO has been a true success story; it has been taken up by the entire scientific community as the main means for annotating gene products. Analogous, unified nomenclatures for anatomy or phenotypes are being developed. Several cell-type ontologies are both publicly available and used internally in laboratories in industry and academia15,16. An attempt to collect all the existing ontologies and initiate a discussion on unification is currently being made by the Open Biological Ontology (OBO)17 initiative. OBO is an umbrella for well-structured controlled vocabularies for shared use across different biological and medical domains. The main criterion for an ontology's inclusion in OBO is that it should be open, should share a common syntax, and should be orthogonal to other OBO ontologies.

Systems topology and logics (or pathway diagrams) can be thought of as a connection (interaction) diagram between the parts; this can be viewed as a graph, the nodes of which represent the objects (for example, genes, proteins or other interacting objects), whereas edges or arcs — connections between nodes, which can be directed or undirected — represent interactions between these objects (for example, metabolic, signalling and protein interaction networks). Examples of such topologies include protein interaction networks in a cell, or the network of regulatory interactions between genes. A standard known as the Proteomics Standards Initiative: Molecular Interactions (PSI-MI)18 has been developed to describe protein–protein interactions (Fig. 2).

a | In addition to the general MIAPE (Minimum Information About a Proteomics Experiment) guidelines, domain-specific recommendations for each of the stages of protein quantification techniques have been developed: MIAPE-MS, MIAPE-MSI, MIAPE-Gel and MIAPE-GelI. A need for a separate set of rules for publishing proteomics experiments has turned into the Publication Guidelines for the Analysis and Documentation of Peptide and Protein Identifications. These guidelines are currently developed by the Molecular and Cellular Proteomics Journal47 and are taken into consideration by the MIAPE community. b | Despite the success in achieving consensus on experiment reporting requirements and the availability of a centralized protein sequence resource, the UniProtKB knowledgebase, creation of a complete formalism (that is, object models for each of the related domains) might be more difficult than anticipated. PEDRo (the Proteomics Experiment Data Repository), with its XML (Extensible Markup Language) implementation PEML, represents a model for the most common workflows in proteomics and was used by the Proteomics Standards Initiative (PSI) as a prototype for the standard in proteomics34. It covers such areas as sample information, protein separation, mass spectrometry protocols and data analysis. Other attempts to formalize or model a process of protein separation such as two-dimensional gels, affinity columns and chemical treatments resulted in models that either lack some classes or are too specific towards a particular technique48. PEDRo was an inspiration for the creation of mzData — an XML format for capturing peak list information. mzData complements MIAPE guidelines for and analysis of mass spectrometry data and mass spectrometry data (MIAPE-MS and MIAPE-MSI). Analogous and effectively equivalent formats created earlier are mzXML49, pepXML and protXML. PEDRo–mzXML–mzData evolution demonstrates how arduous it can be to create a model for a specific platform, even when general agreement has been achieved.

Another example of a standard for describing overall structure of interactions and information about the nature of each interaction is BioPAX19. BioPAX is a collaborative project to create a data exchange format for biological pathway data. It uses an ontology language OWL, and it consists of several so-called 'levels' (releases). BioPAX Level 2 covers metabolic pathways, molecular interactions and post-translational protein modifications, and is backwards compatible with Level 1. Future levels will expand support for signalling pathways, gene regulatory networks and genetic interactions. Data from pathway databases, such as Reactome, are now available in BioPAX format20. BioPAX does not contain quantitative parameters and cannot describe dynamics of interactions, which is not its goal.

Systems dynamics simulates real-time behaviour of the system such as the cell cycle, and predicts its response to various environmental changes — for example, to stress. Differential equations and hybrid systems are among the formalisms (languages) used to describe network dynamics and simulations (for example, see Refs 21,22). Standards for describing network simulations include SBML3 and CellML4, and are relatively well established. For instance, SBML release 2 (termed Level 2) consists of lists of various components that are needed to describe and simulate the dynamics of chemical reactions and their interaction networks. These include so-called compartments (containers of substances), species (for example, chemical substances), reactions (description of processes), parameters, unit definitions and rules (mathematical expressions). SBML has been described using a Unified Modeling Language (UML) model, and XML has been used as the framework for the format.

The main difference between CellML and SBML is that CellML is modular, whereas SBML is hierarchical. Arguably, CellML is conceptually more satisfying, but SBML is more usable. CellML was originally developed by a company, and although it is now in the academic domain there are only a few groups developing tools for it. SBML is much more of a community effort. For some reviews of these standards the reader should consult Refs 4,23.

Minimum Information Requested In the Annotation of Biochemical Models (MIRIAM) is a human-orientated counterpart of SBML and CellML, or any machine-readable format for describing models24. The goal is to encode models in a systematic way and annotate them sufficiently and consistently. The need for these human-orientated guidelines has become apparent because of the sometimes inconsistent use of SBML. Using an analogy to the microarray domain, MIRIAM corresponds to MIAME, the same way as SBML or CellML corresponds to MAGE-ML. Unlike MIAME, MIRIAM was developed after the formal languages had been introduced, partly as the result of the realization that the formalisms were not used consistently and that often not enough information was provided.

The standardization of syntax (SBML, CellML) and semantics (MIRIAM) of computational models enabled their storage, exchange and integration. The BioModels Database is a data resource that allows biologists to store, search and retrieve published mathematical models of biological interest25. Models accepted in the BioModels Database comply with MIRIAM, the standard of model curation. The syntax of the models and their results are carefully verified. Each model is annotated and linked to relevant data resources, such as publications, databases of compounds and pathways, and controlled vocabularies. Although the internal format of models is SBML, users can retrieve the models in many formats (including SBML, CellML, XPP, SciLab and BioPAX)

Technology-related standards for experimental evidence. The lifespan of each particular technology is limited, which means that if an investment in developing a particular standard is to pay off, either the development and deployment of this standard should be relatively inexpensive, or the lifespan of the technology should be sufficiently long. The state of development of technology-related standards varies from domain to domain — in some areas there already is a general agreement on the minimum information to be reported, in others there is less coordination and several XML formats or data models are being developed.

Arguably, standards related to microarray technology are the most advanced. MIAME is now accepted by most scientific journals as a guideline for making publication-supporting data available in a usable format26,27. This has facilitated a flow of data from laboratories into public repositories such as ArrayExpress28 and Gene Expression Omnibus (GEO)29. In turn, this has given a boost to other components of microarray data standards, most importantly to MAGE-ML10. For instance, MAGE-ML files generated by more than 20 software systems, which account for over 20,000 hybridizations, account for half the data in ArrayExpress (May 2006). There are fully automated MIAME-compliant data submission pipelines from the Stanford Microarray Database (SMD)30 and The Institute for Genomic Research database to ArrayExpress that are based on the MAGE-ML standard. The ArrayExpress repository itself is based on the MAGE-OM model31.

Minimum Information Specification For in situ Hybridization and Immunohistochemistry Experiments (MISFISHIE) is a proposal for a reporting standard for all studies on gene expression localization (see the MISFISHIE Standard Working Group web page). This specification was jointly developed by members of the US National Institutes of Health/National Institute of Diabetes and Digestive and Kidney Diseases Stem Cell Genome Anatomy Projects Consortium, providing a list of what must be submitted along with experimental results.

Modern proteomics technologies allow information on expression levels, modifications, localization, interactions and the structure of proteins to be gathered on the scale of the entire proteome. One of the working groups of the Human Proteome Organisation (HUPO) — PSI — was founded in 2002 to develop standards for representating mass spectrometry and protein interaction data32. General guidelines, MIAPE33 (Minimum Information About a Proteomics Experiment, analogous to MIAME for microarrays) have been adopted and domain-specific recommendations for each of the stages of protein quantification techniques have been developed: MIAPE-MS, MIAPE-MSI, MIAME-Gel, reflecting the complexity of the data. Despite the success in achieving a consensus on experiment reporting requirements and the availability of the UniProtKB knowledgebase — the centralized protein sequence reso urce — creation of a complete formalism (that is, an object model for each of the related domains) might not be trivial. PEDRo (the Proteomics Experiment Data Repository) with its XML implementation, PEML, is a model for the most common workflows in proteomics and was used by PSI as a prototype for a proteomics standard34 (for more detail on proteomics standards see Fig. 2).

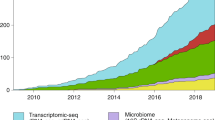

Metabolomics and metabonomics provide an insight into the metabolic status of a biological system by profiling small molecules. Standardization initiatives in these fields are relatively recent by comparison with those of transcriptomics and proteomics. Several attempts have been made to conceptualize the output and metadata of metabolomics experiments, including stand-alone models and formats such as ArMet35 (a standard for plant data) and CCPN (Collaborative Computing Project for the Nuclear-Magnetic Resonance Community)36. Despite tremendous technological progress, the main focus of the field remains the evaluation of the performance of certain platforms, rather than high-throughput interpretation of the results. A recent publication on a standard metabolic reporting structure (SMRS)37 outlines three areas that potentially could benefit from standards: origin of sample, analytical platform and interpretation of the results. Although the guidelines will have to stay flexible for the near future, the motivation to start a discussion is high, for even if the standards cannot be developed at this stage, the metabolomics field will benefit from establishing a community.

Among emerging and less well-developed initiatives is the Open Microscopy Environment (OME)38, a framework for data sharing and data storage in the field of biological microscopy. Minimal Information About Cellular Assays (MIACA) and Minimum Information About an RNAi Experiment (MIARE) are also in this group; the former aims to delineate the minimal descriptors for experiments involving cellular assays, the latter aims to develop a standard to unambiguously describe RNAi experiments.

The experimental areas described above share many common steps; for example, sample processing can be described similarly for transcriptomics, proteomics and metabonomics experiments. There have been several attempts to integrate these domains, such as FGE-OM39 and SysBio-OM40. Although both were derived from MAGE-OM, the two approaches are different — whereas SysBio-OM is 'flat', with all model classes introduced at the same level of abstraction, FGE-OM developers thought more about hierarchically separating technological domain-independent constructs from domain-specific structures. The Functional Genomics Experiment Model (FuGE) is an ongoing project to develop a general model for representation of functional genomics investigations that can be extended for specific domains. This effort will provide a ready-made framework for domain-specific modelling, guiding and simplifying development of more detailed models. Similar to the microarray domain (MAGE-OM versus MGED Ontology), stable domain structure representation (object model — FuGE) and evolving vocabularies (ontology — FuGO) are being developed as separate projects. These initiatives are important because they help to prevent developing competing or incompatible standards that describe the same domain. The MGED working group Reporting Structure of Biological Experimentation (RSBE) is working to facilitate interactions between different communities and collecting use-cases.

It is worth pointing out that the separation between standards that describe biology and standards that describe the experimental evidence is not always clear cut. For instance, GO includes the concept of the 'evidence code' for capturing information on how the knowledge of a particular biological fact has been obtained, whereas microarray experiments could provide information about the expression levels of particular genes in particular biological conditions. Some standards, such as PSI-MI, which describes protein interactions, can be placed equally well within both categories — the biological facts and the experimental evidence. Note that although one of the main difficulties in developing standards for describing biology is the potential infinite richness of the underlying subject and our limited and changing understanding of it, the limited lifespan of a technology is an important factor in developing standards for each particular technology.

Secrets of successful standards

GO is an example of a successful standard that is widely accepted and used, and is helping to advance our knowledge about biology. GO can be used not only to query databases for genes of a particular function, it can also provide an 'executive summary' for the characterization of groups of genes found by some independent means, such as gene expression data clustering. GO is even used for computational analysis of large groups of genes or genomes41. But not all standards succeed. For instance, the attempts to introduce Common Object Request Broker Architecture (CORBA) in bioinformatics have largely failed. More light-weight approaches to software integration, such as those that are based on XML, have prevailed instead. So why has GO been successful, but CORBA not so?

Standards are only means. Despite the growing standardization 'industry', standards will always remain only a means for advancing our knowledge in biology. Standardization itself is not a scientific discipline, at least not a biologically interesting one. Introducing standards should not be a goal in itself, but should help biologists to solve problems.

Well-defined scope and goals. It is important that the goal of the standardization is well defined and limited. Instead of trying to standardize everything, it is better to rely on other related standards and be consistent with them (GO uses other ontologies, such as ontologies for cell types and chemical compounds). In fact it is detrimental to the community not to use relevant standards developed by others. One should ask oneself a question: suppose my standard is developed and adopted by the community, how exactly will it help to advance our knowledge about biology?

Community involvement: bottom-up rather than top-down approach. Attempts to impose standards unilaterally usually fail. The need for the standards should come from the community. Developing and using a standard is often an investment that will not pay off immediately, therefore there is a much better chance of success if the user community decides that the respective standard is needed. There is a notable difference between, for instance, the success story of GO, which involved the wide community right from the beginning, and the failure of CORBA, which largely ignored the established practices already in use. It is therefore a worry that calls for introduction of standards in systems biology in a top-down manner have recently been made42 (see also Ref. 43 for the response).

Cost effectiveness. To develop and deploy a standard creates an overhead, which can be expensive. Standards will help only if a particular type of information has to be exchanged often enough to pay off the development, implementation and usage of the standard during its lifespan. Standards designed to describe biological knowledge have to evolve and change as our knowledge in biology grows. Standards related to a particular technology have a lifespan that is no longer than the technology itself. This means that there is only a limited period of time in which overheads can be paid off. In some cases it might be cheaper to deal with data communication problems on a case-by-case basis, rather than to develop a standard. The high complexity and the lack of 'cost effectiveness' were arguably among the main reasons that the attempts to introduce CORBA as a standard in bioinformatics failed. Because the market in bioinformatics is considerably smaller than in the banking or telecommunication domains the same approaches might not necessarily work in bioinformatics.

Lack of ambiguity. Standards that allow different ways of encoding the same semantic information are difficult to use. MAGE initially suffered from this problem, which was later addressed by recommendations for how to encode MIAME in MAGE (see above); ideally this should be defined by the model itself. Even more important is to realize that there is a considerable difference between building a 'perfect ontology' for knowledge representation, and building a practical standard that can be taken up by the entire community as a means for information exchange. If the ontology is complex, it is unlikely that the wider community will use it consistently, if they use it at all. Even in GO, where there is a relatively limited community doing the actual annotation, consistency of use is an issue44. For ontologies such as MO, which is used to describe and submit experiment descriptions to public databases by experimentalists who are not trained to use it, this is an even more important problem.

Simplicity. Simplicity relates to all the issues noted above and, in particular, to cost effectiveness and lack of ambiguity. But the need for simplicity goes further. An information communication standard can apply to communicating information between humans and computers (for example, GO), or between computers (for example, FASTA). But in the end, to advance our knowledge, information must be communicated between humans, a computer being only a tool. This consideration dictates that a useful standard must not introduce a higher granularity (and therefore complexity) than a human can use. For instance, does it help to record every step of a laboratory protocol as a separate action, if this protocol is recorded for a biologist to read rather than for a robot to execute? (The latter is possible and could be useful, but in this case a programming language not an information communication standard is used.) This, however, does not necessarily mean that only the minimum granularity of free text can be useful. For instance, although a simple human voice recording on a magnetic tape might be sufficient to communicate information from person to person, a finer granularity might allow for automatic translation of the recording from one language to another, by parsing the speech into an appropriate model.

To demonstrate the importance of these points, let us consider for a moment an example of how these principles worked in a totally different domain. In the 1970s two new high-level programming languages were created — Ada and C. Although Ada was arguably better designed and was supported by the US Department of Defense, C gained much wider popularity and is still used today, along with C++ and other C derivatives. Ada was developed with an aim to have a single universal programming language, whereas C was built with an aim to write programs more easily and evolved naturally (standards are only means). Ada was meant to satisfy all US Department of Defense needs; C designers saw the language as a tool for UNIX programming (with well-defined scope and goals). Ada was the result of a contract for programming language development, whereas C evolved initially as a two-man effort, and standardization and committees came later (community involvement; bottom-up rather than top-down approach). That also meant cost effectiveness. Finally, Ada supported parallel programming, generics and so on, whereas one of the main C-design criteria was that a simple, single-pass compiler should be possible (simplicity). This example is relevant for standards in systems biology; after all, standardization is nothing more than the mutual agreement about a model, be it a programming model or a data representation model.

The future

The role of ontologies and their use is likely to grow in the future, for instance, in the context of the Semantic Web. Research in the Semantic Web is concentrated on designing languages, methods and tools that would enable building of distributed knowledge resources, similar to the World Wide Web but precise enough for automated reasoning at some level. Life sciences is one of the areas that would benefit from the Semantic Web; to facilitate computer communication and reasoning humans have to design and adopt languages for knowledge exchange (for example, see the W3C Semantic Web Health Care and Life Sciences Interest Group web site). Although there are no practical demonstrations of the usefulness of the Semantic Web for life sciences so far, this is an active area of research (see the summary report).

GO has already been mentioned as an example of standards success stories. MIAME is another. It has helped software developers to design databases and laboratory information management systems for managing microarray data26,45, which is reflected in the growing number of citations of the original MIAME paper (Table 3). The adoption of MIAME by scientific journals has made publications that support microarray gene expression data accessible to the community in a usable format and in the necessary detail. The impact of MIAME can be seen by comparing the availability of data from gene expression and array-CGH (comparative genomic hybridization) experiments. In array-CGH experiments, where MIAME has not been widely adopted by journals, important data sets supporting publications are sometimes unavailable (B.Ylstra, personal communication).

The data exchange formats PSI-MI and MAGE-ML have helped to get many of the high-throughput data sets into the public domain. Nevertheless, from a bench biologist's point of view benefits from adopting standards are not yet overwhelming. Most standardization initiatives are still mainly an investment for biologists. So how many of the growing number of standardization initiatives will pay off?

We think that the answer is related to The Law of Standards — “Whenever a major organization develops a new system as an official standard for X, the primary result is the widespread adoption of some simpler system as a de facto standard for X” — established by a well-known computer scientist, John F. Sowa. Many of the current standardization initiatives are useful in that they help biologists and bioinformaticians to better understand particular types of data and domains of systems biology, and create a common language with which biologists and information technologists can communicate10. It is possible that some of the more complex standards will never develop into data exchange formats that are accepted and used by everybody. Nevertheless, they will create a better understanding of the domain, which is needed to develop the next generation of standards, if and when they are required. For example, a draft for a simpler standard for microarray data, MAGE-TAB, has been recently presented (see the Microarray and Gene Expression web page), the development of which has been based on the experience with the first-generation standard MAGE. XML had a predecessor SGML, which was too complex to become widely adopted (although it is still used in particular applications).

Most of the successful standard development initiatives have started as grass-roots movements without any dedicated funding. This is certainly true of GO and MGED. Only after GO became successful was dedicated funding provided to the GO Consortium. Some funding has been recently awarded to some of the MGED members for developing the next-generation microarray standards, including MAGE-TAB. Nevertheless, there are no established mechanisms to fund initiatives for standards development, and funding agencies are reluctant to provide funding for such 'unglamorous, blue-collar' work. Creating a mechanism for funding standards development does not contradict the need to develop standards in a bottom-up fashion — the funding should be awarded to multi-institutional groups that have proved their ability to work together successfully.

Although standardization is not a goal in itself, its importance is growing in a high-throughput era. This is similar to what happened to manufacturing during industrialization. The data from high-throughput technologies are being generated at a rate that makes managing and using these data sets impossible on a case-by-case basis. Although some of the data generated by the newest technologies might have a low signal-to-noise ratio to make data re-usable, the data quality is improving as the technology matures, and it is a waste of resources not to share and re-use these expensive data sets. However, this is only possible if the instrumentation that generates these data, laboratory-based storage information management systems and databases, data analysis tools, and systems modelling software can talk to each other easily. This is the purpose of standardization.

One of the main goals of systems biology research is ultimately to improve health care. Health-care standards, as facilitated, for example, by Health Level Seven (HL7), an organization accredited by the American National Standards Institute (ANSI), are beyond the scope of this review; for more information an interested reader should consult Ref. 46. Interactions between genomics and bioinformatics on the one hand, and medical informatics on the other hand are on increase: there are large projects to investigate the effects of specific molecules on a patient's health (for example, the Molecular Phenotyping to Accelerate Genomic Epidemiology project — MolPAGE), organizations that coordinate these projects (for example, the UK National Cancer Research Institute) and the US Food and Drugs Administration are exploring voluntary genomics data submission (MIAME being noted among the possibilities). From the data standards perspective, integrating a patient's data with bioinformatics data will be the next big challenge.

Conclusions

Several standards are already contributing to the advancement of knowledge in biology, although most standardization initiatives are still in the investment stage for biologists. In life sciences standards development is typically done by grass-roots movements, and it is difficult to persuade funding agencies to fund such activities. Although it might be faster for a single organization to develop its own standards, a bottom-up community consensus approach is the key to long-term acceptance and usefulness of standards. To avoid proliferation of standards common features of existing standards should be re-used wherever possible. Simplicity, but not oversimplification, is the key to success.

References

Ashburner, M. et al. Gene Ontology: tool for the unification of biology. Nature Genet. 25, 25–29 (2000). GO has been a true success story: it has been taken up by the entire scientific community as the main means for annotation of gene products.

Brazma, A. et al. Minimum Information About a Microarray Experiment (MIAME) — toward standards for microarray data. Nature Genet. 29, 365–371 (2001). The first result of the microarray data standardization effort was a community agreement about the level of detail necessary to make data exchange meaningful (MIAME). MIAME set a pace for such standards (Minimum Information About XYZ) in other domains.

Hucka, M. et al. The Systems Biology Markup Language (SBML): a medium for representation and exchange of biochemical network models. Bioinformatics 19, 524–531 (2003). SBML has been evolving since the early 2000s through the efforts of an international group of software developers and users. Today, SBML is supported by over 90 software systems.

Lloyd, C. M., Halstead M. D. & Nielsen P. F. CellML: its future, present and past. Prog. Biophys. Mol. Biol. 85, 433–450 (2004).

Quackenbush, J. Data standards for 'omic' science. Nature Biotechnol. 22, 613–614 (2004).

Stoeckert, C. J. Jr, Causton, H. C. & Ball, C. A. Microarray databases: standards and ontologies. Nature Genet. 32, S469–S473 (2002).

Gentleman, R. C. et al. Bioconductor: open software development for computational biology and bioinformatics. Genome Biol. 5, R80 (2004).

Brazma, A. On the importance of standardisation in life sciences. Bioinformatics 17, 113–114 (2001).

Brazma, A., Robinson, A., Cameron, G. & Ashburner, M. One-stop shop for microarray data. Commentary. Nature 403, 699–700 (2000).

Spellman, P. A status report on MAGE. Bioinformatics 21, 3459–3460 (2005).

Whetzel, P. L. et al. The MGED Ontology; a resource for semantics-based description of microarray experiments. Bioinformatics 22, 866–873 (2006).

Eyre, T. A. et al. The HUGO Gene Nomenclature Database, updates. Nucleic Acids Res. 34, D319–D321 (2006).

Schlitt, T. & Brazma A. Modelling gene networks at different organisational levels. FEBS Lett. 579, 1859–1866 (2005).

Schlitt, T. & Brazma A. Modelling in molecular biology: describing transcription regulatory networks. Philos. Trans. R. Soc. B 361, 483–494 (2006).

Bard, J., Rhee, S.Y. & Ashburner, M. An ontology for cell types. Genome Biol. 6, R21 (2005).

Kelso, J. et al. eVOC: a controlled vocabulary for unifying gene expression data. Genome Res. 13, 1222–1230 (2003).

Bard, J. B. & Rhee, S.Y. Ontologies in biology: design, applications and future challenges. Nature Rev. Genet. 5, 213–222 (2004).

Hermjakob, H. et al. The HUPO PSI's molecular interaction format — a community standard for the representation of protein interaction data. Nature Biotechnol. 22, 177–183 (2004). The PSI aims to define community standards for data representation in proteomics to facilitate data comparison, exchange and verification. The data exchange format for protein–protein interactions PSI-MI was designed by a group of people including representatives from database providers and users in both academia and industry, and is supported by the DIP, MINT, IntAct, BIND and HPRD databases.

Luciano, J. S. PAX of mind for pathway researchers. Drug Discov. Today. 10, 937–942 (2005).

Joshi-Tope, G. et al. Reactome: a knowledgebase of biological pathways. Nucleic Acids Res. 33, D428–D432 (2005).

Tyson, J. J. Modeling the cell division cycle: cdc2 and cyclin interactions. Proc. Natl Acad. Sci. USA 88, 7328–7332 (1991).

Huang, C. Y. & Ferrell, J. E. Jr. Ultrasensitivity in the mitogen-activated protein kinase cascade. Proc. Natl Acad. Sci. USA 93, 10078–10083 (1996).

Stromback, L. & Lambrix, P. Representations of molecular pathways: an evaluation of SBML, PSI MI and BioPAX. Bioinformatics. 21, 4401–4407 (2005).

Le Novere, N. et al. Minimum information requested in the annotation of biochemical models (MIRIAM). Nature Biotechnol. 23, 1509–1515 (2005).

Le Novere, N. et al. BioModels Database: a free, centralized database of curated, published, quantitative kinetic models of biochemical and cellular systems. Nucleic Acids Res. 34, D689–D691 (2006).

Ball, C. A. et al. Submission of microarray data to public repositories. PLoS Biol. e317 (2004).

Stoeckert, C. J., Quackenbush, J., Brazma, A. & Ball, C. A. Minimum information about a functional genomics experiment: the state of microarray standards and their extension to other technologies. Drug Discov. Today 3, 159–164 (2004).

Brazma, A. et al. ArrayExpress — a public repository for microarray gene expression data at the EBI. Nucleic Acids Res. 31, 68–71 (2003).

Barrett, T. et al. NCBI GEO: mining millions of expression profiles — database and tools. Nucleic Acids Res. 33, D562–D566 (2005).

Gollub, J. et al. The Stanford Microarray Database: data access and quality assessment tools. Nucleic Acids Res. 31, 94–96 (2003).

Sarkans, U. et al. The ArrayExpress gene expression database: a software engineering and implementation perspective. Bioinformatics, 21, 1495–1501 (2005).

Orchard, S., Hermjakob, H., Taylor, C., Aebersold, R. & Apweiler, R. Human Proteome Organisation Proteomics Standards Initiative. Pre-Congress Initiative. Proteomics 5, 4651–4652 (2005).

Orchard, S. et al. Common interchange standards for proteomics data: public availability of tools and schema. Proteomics 4, 490–491 (2004).

Taylor, C. F. et al. A systematic approach to modeling, capturing, and disseminating proteomics experimental data. Nature Biotechnol. 21, 247–254 (2003).

Jenkins, H. et al. A proposed framework for the description of plant metabolomics experiments and their results. Nature Biotechnol. 22, 1601–1606 (2004).

Fogh, R. et al. The CCPN project: an interim report on a data model for the NMR community. Nature Struct. Biol. 9, 416–418 (2002).

Lindon, J. C. et al. Standard Metabolic Reporting Structures working group. Summary recommendations for standardization and reporting of metabolic analyses. Nature Biotechnol. 23, 833–838 (2005). The SMRS group aims to supply an open, community-driven specification for the reporting of metabonomic/metabolomic experiments and a standard file transfer format for the data. Participants in the SMRS include leaders in the fields of metabonomics and metabolomics from both industry and academia.

Goldberg, I. G. et al. The Open Microscopy Environment (OME) data model and XML file: open tools for informatics and quantitative analysis in biological imaging. Genome Biol. 6, R47 (2005).

Jones, A., Hunt, E., Wastling, J. M., Pizarro, A. & Stoeckert, C. J. Jr. An object model and database for functional genomics. Bioinformatics 20, 1583–1590 (2004).

Xirasagar, S. et al. CEBS object model for systems biology data, SysBio-OM. Bioinformatics 20, 2004–2015 (2004).

Rendl, M., Lewis, L. & Fuchs, E. Molecular dissection of mesenchymal–epithelial interactions in the hair follicle. PLoS Biol. 3, e331 (2005).

Cassman, M. Barriers to progress in systems biology. Nature 438, 1079 (2005).

Quackenbush, J. et al. Top-down standards will not serve systems biology. Nature 440, 24 (2006).

Raychaudhuri, S., Chang, J. T., Sutphin, P. D. & Altman, R. B. Associating genes with gene ontology codes using a maximum entropy analysis of biomedical literature. Genome Res. 12, 203–214 (2002).

[Editorial] Microarray standards at last. Nature 419, 323 (2002).

Dolin, R. H. et al. HL7 clinical document architecture, Release 2. J. Am. Med. Inform. Assoc. 13, 30–39 (2006).

Carr, S. et al. Working Group on Publication Guidelines for Peptide and Protein Identification Data. The need for guidelines in publication of peptide and protein identification data. Mol. Cell. Proteomics 3, 531–533 (2004).

Jones, A., Wastling, J. & Hunt, E. Proposal for a standard representation of two-dimensional gel electrophoresis data. Comp. Funct. Genomics 5, 492–501 (2003).

Pedrioli, P. G. et al. A common open representation of mass spectrometry data and its application to proteomics research. Nature Biotechnol. 22, 1459–1466 (2004).

Acknowledgements

We would like to thank M. Ashburner, C. Brooksbank, H. Hermjakob and N. Le Novere for reading the manuscript and providing valuable comments. The work on this survey was partly funded by the MolPAGE grant from the European Commission and a grant from the US National Human Genome Research Institute and National Institute of Biomedical Imaging and Bioengineering.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing financial interests.

Related links

Related links

FURTHER INFORMATION

MIAME and MAGE-OM Terms Explained web site

Microarray and Gene Expression

Microarray Gene Expression Data Society web site

MISFISHIE Standard Working Group web page

Proteomics Standards Initiative — Molecular Interactions

Summary Report — W3C Workshop on Semantic Web for Life Sciences

Systems Biology Markup Language

W3C Semantic Web Health Care and Life Sciences Interest Group web site

Glossary

- Domain

-

A field of study.

- Conceptual model

-

In information engineering, a model that is meant to facilitate human communication; it does not need to be absolutely precise, as opposed to a formal model that has strictly defined semantics.

- Data exchange format

-

A file or message format that is formally defined so that software can be built that 'knows' where to find various pieces of information.

- Ontology

-

A model that describes a domain and can be used to reason about objects and relationships between them.

- Tool

-

In softwa re engineering, a program or set of programs that enables a certain task(s).

- Directed acyclic graph

-

A graph consisting of nodes and edges, where edges have direction (that is, can be traversed only one way), and it is not possible to find a set of edges that form a closed loop.

- Diagram

-

A visual representation of concepts and relationships, used in information engineering to facilitate human communication.

- Semantics

-

The meaning of something; in computer science, it is usually used in opposition to syntax (that is, format).

- Reporting requirements

-

An agreed set of information items that needs to be provided for meaningful information communication (reporting).

- Metabolomics

-

The study of metabolite profiles in individual cells and cell types.

- Metabonomics

-

The study of systemic response to the pathophysiological stimuli and regulation of function in the whole organism through analysis of biofluids and tissues.

- Visual language

-

In computer science and computer engineering, an agreed set of conventions for drawing diagrams that formally describe a model or a program.

- Class

-

A concept used in ontology engineering and model building for referring to a set of objects with similar properties.

- Graph

-

A visual representation of information in the form of edges (lines) and nodes (connection points). In biology, graphs can be represented as boxes (nodes) and lines between boxes.

Rights and permissions

About this article

Cite this article

Brazma, A., Krestyaninova, M. & Sarkans, U. Standards for systems biology. Nat Rev Genet 7, 593–605 (2006). https://doi.org/10.1038/nrg1922

Issue Date:

DOI: https://doi.org/10.1038/nrg1922

This article is cited by

-

A minimum information standard for reproducing bench-scale bacterial cell growth and productivity

Communications Biology (2018)

-

Image Data Resource: a bioimage data integration and publication platform

Nature Methods (2017)

-

Compliance with minimum information guidelines in public metabolomics repositories

Scientific Data (2017)

-

e!DAL - a framework to store, share and publish research data

BMC Bioinformatics (2014)

-

Biomarkers in autism spectrum disorder: the old and the new

Psychopharmacology (2014)