Key Points

-

Having information from multiple senses converge onto the same neurons allows the neurons to work in concert so that their combined product can enhance the physiological salience of an event, increase the ability to render a judgment about its identity, and initiate responses faster than would otherwise be possible.

-

This interactive synergy among the senses, or 'multisensory integration', is manifested in individual neurons, by enhancing or degrading their responses, and in behaviour, by producing corresponding alterations in performance.

-

Multisensory integration is guided by principles that relate to the spatial and temporal relationship among cross-modal stimuli, as well as to the vigor of the neuron's responses to their individual component stimuli.

-

The spatial principle of multisensory integration relies on faithful register among a neuron's different receptive fields and this register must be maintained in spite of independent movement of the sense organs (such as the eyes). Recent studies suggest that compensation for such movement is less than perfect, and occurs to varying degrees in different neurons and brain regions. Degradation in receptive-field register has strong implications for multisensory integration, but these remain to be examined empirically.

-

Multisensory integration is crucial for high-level cognitive functions in which considerations such as semantic congruence might determine its neural products and the perceptions and behaviours that depend on them.

-

Multiple approaches have demonstrated the impact of multisensory integration in different brain structures in different species, including single-neuron and event-related-potential recordings and brain-imaging techniques.

-

Primary, sensory-specific areas of the brain have now been shown to receive inputs from other senses. The functional role of these other inputs is not yet known, but they might facilitate the processing of information in the native sense.

Abstract

For thousands of years science philosophers have been impressed by how effectively the senses work together to enhance the salience of biologically meaningful events. However, they really had no idea how this was accomplished. Recent insights into the underlying physiological mechanisms reveal that, in at least one circuit, this ability depends on an intimate dialogue among neurons at multiple levels of the neuraxis; this dialogue cannot take place until long after birth and might require a specific kind of experience. Understanding the acquisition and usage of multisensory integration in the midbrain and cerebral cortex of mammals has been aided by a multiplicity of approaches. Here we examine some of the fundamental advances that have been made and some of the challenging questions that remain.

Similar content being viewed by others

Main

Encoding, decoding and interpreting information about biologically significant events are among the brain's most important functions, and collectively they require a good deal of neural circuitry. These functions have been powerful driving forces in evolution and have led to the development of an array of specialized sensory organs, each of which is linked to multiple specialized brain regions.

There are obvious advantages associated with having multiple senses: each sense is of optimal usefulness in a different circumstance, and collectively they increase the likelihood of detecting and identifying events or objects of interest. However, these advantages pale in comparison with those afforded by the ability to combine sources of information. In this case the integrated product reveals more about the nature of the external event and does so faster and better than would be predicted from the sum of its individual contributors. It might be surprising to find that this is not a new evolutionary strategy: our earliest single-celled progenitor is thought to have been endowed with multiple 'senses' (that is, receptors for different environmental stimuli) and the ability to use them synergistically1.

It is this synergy, or interaction, among the senses, and the fusion of their information content, that is described by the phrase 'multisensory integration'. Multisensory integration is most commonly assessed by considering the effectiveness of a cross-modal stimulus combination, in relation to that of its component stimuli, for evoking some type of response from the organism. For example, the likelihood or magnitude of a response to an event that has both visual and auditory components is compared with that for the visual and the auditory stimuli alone. At the level of the single neuron, multisensory integration is defined operationally as: a statistically significant difference between the number of impulses evoked by a cross-modal combination of stimuli and the number evoked by the most effective of these stimuli individually2.

Multisensory integration can therefore result in either enhancement or depression of a neuron's response. In principle, the magnitude of multisensory integration is a measure of the relative physiological salience of an event3. If one imagines that sensory stimuli compete for attention and for access to the motor machinery that generates reactions to them, then the potential consequence of multisensory enhancement (or multisensory depression) is an increased (or decreased, for depression) likelihood of detecting and/or initiating a response to the source of the signal (Fig. 1, also see Refs 4–6 for reviews). The extent to which multisensory integration aids the detection of an event has a direct, positive effect on the speed with which a response can be generated7,8,9,10,11. The magnitude of multisensory integration can vary widely for different neurons and even for the same neuron encountering different cross-modal stimulus combinations. For multisensory enhancement (the form of interaction on which we will mainly focus), differences in magnitude reflect different underlying computations. The largest enhancements are due to superadditive combinations of cross-modal influences and the smallest are due to subadditive combinations. Along with changes in sensory-response magnitude, multisensory integration can shorten the interval between sensory encoding and motor-command formation7, and it can speed sensory processing itself by enhancing the initial subthreshold portion of a response such that the multisensory response has a significantly shorter latency than either of the component unisensory responses12.

A woman and cat detect the approach of a dog, based on sight and sound. When these cues are weak (when the dog is far away), the neural computation involved in their integration is superadditive, such that the response not only exceeds the most vigorous component response, but also exceeds their sum (top). As the dog gets closer, the cues become more effective, unisensory component responses become more vigorous, and integrated responses become proportionately smaller. The computation now becomes additive (middle) and then subadditive (bottom). Although both the additive and the subadditive computations also produce responses that exceed the most vigorous component response (that is, they all exhibit multisensory integration), their enhancements are proportionately less than the one shown at the top. All enhancements increase the probability of orientation, but the benefits of multisensory integration are proportionately greatest when cross-modal cues are weakest. Figure modified, with permission, from Ref. 3 © (2007) Lippincott Williams & Wilkins.

In addition to altering the salience of cross-modal events, multisensory integration involves creating unitary perceptual experiences. Taste, for example, emerges from the synthesis of gustatory, olfactory, tactile and sometimes visual information. This raises some non-trivial issues: integrating information from different senses must take into account not only the inherent complexities of information processing in each individual modality, but also the fact that each modality has its own unique subjective impressions or 'qualia' (for example, the perception of hue is specific to the visual system, whereas tickle and itch are specific to the somatosensory system) that must not be disrupted by the integrative process. Although we still do not fully understand how this is accomplished, we have learned some of the strategies that the nervous system uses to integrate (or 'bind') cues from different senses so that they produce a unitary experience. Often this is accomplished by weighting the various cues based on how much information they are likely to provide about a given event13,14,15. In this context it is important to recognize that information in any given sensory category is always dealt with against a background of inputs from many senses, thereby complicating the task of deciding which of them are appropriate for binding. It is interesting to note that we are largely unaware of these processes except when small temporal and/or spatial discrepancies disrupt the tight links between cross-modal cues that are naturally associated; this often results in a vivid illusion (Box 1).

The benefits of multisensory integration for orienting behaviour have received a good deal of attention and provided many insights into the neural mechanisms that underlie the integration of sensory information (Box 2). These insights have been derived from physiological studies of individual multisensory neurons in a number of species and brain regions, particularly in the midbrain and cerebral cortex of cats and monkeys. By contrast, we know much less about the physiological processes that underlie higher-order multisensory processes, such as perceptual binding. These inquiries are in their nascent stages. Thus, this Review focuses heavily on the insights obtained from physiological studies in single neurons, and examines how that information has influenced our thinking about the impact of multisensory integration on behaviour and perception. We begin by briefly reviewing what is known about the properties of multisensory neurons in the midbrain and the role of these neurons in orienting behaviour. We then consider a host of current issues relating to multisensory integration in the cerebral cortex of the cat, the monkey and the human brain. In doing so, we move beyond simple orienting behaviour to explore the neural bases of some higher-order multisensory phenomena.

Multisensory neurons in the superior colliculus

Multisensory neurons respond to stimuli from more than a single sense. Although they are present at all levels in the brain and in all mammals, they are particularly abundant in the superior colliculus (SC) of cats, making it a rich source of information about their properties. This midbrain structure controls changes in orientation (for instance, gaze shifts) in response to stimuli in the visual space on the opposite side of the head to the SC under study. Its visual, auditory and somatosensory inputs are derived from ascending sensory pathways and descending projections from the cortex, which converge in various combinations on SC neurons.

The principles of multisensory integration in SC neurons. The spatial principle is an issue of particular importance for the orienting role of the SC. Each multisensory neuron has multiple excitatory receptive fields, one for each modality to which it responds. These receptive fields are in spatial register with one another (for example, the two receptive fields of a visual–auditory neuron overlap in space), so that the location of an event, rather than the modality it activates, is of greatest importance in determining whether the neuron is activated (Fig. 2). These two stimulus modalities will be defined as originating from the same source location as long as they are within the space that is registered by their overlapping receptive fields; they do not need to originate from an identical point source in space16. If the stimuli are derived from spatially disparate locations, such that one stimulus falls within and the other outside the neuron's receptive field, there will be either no enhancement or response depression17,18,19. The response depression occurs when the second stimulus lies within an inhibitory region that borders the excitatory receptive fields of some SC neurons, and can be powerful enough to suppress the excitation evoked by the other stimulus.

Depiction of visual (V), auditory (A) and combined (VA) stimuli, impulse rasters (in which each dot represents a single neural impulse and each row represents a single trial), peristimulus time histograms (in which the impulses are summed across trials at each moment of time and binned), single-trace oscillograms and a bar plot depicting the response of a superior colliculus (SC) neuron to the stimulation. Note that the multisensory response greatly exceeds the response to the either stimulus alone, thereby meeting the criterion for multisensory integration (that is, response enhancement). In this case, however, the integrated response exceeds the sum of the component responses, revealing that the underlying neural computation that is engaged during multisensory integration is superadditivity. Figure reproduced, with permission, from Ref. 26 © (1986) Cambridge University Press.

The spatial principle of multisensory integration is remarkably robust and is evident in a host of perceptual situations in which the location of the event is crucial. However, the need to maintain receptive-field register and the ability to move each sense organ independently seem to be incompatible. A solution adopted by the SC is to link the various modality-specific receptive fields to the position of the eyes. For example, moving the eyes leftward produces compensatory shifts in both auditory20,21,22 and somatosensory receptive fields23. Such compensation for eye movements seems to create a common oculocentric coordinate frame, ensuring that the individual stimulus components of a cross-modal event interact to produce a single, coherent locus of activity within the SC sensory–motor map. The issues of common coordinate frames and the implications of incomplete compensatory receptive-field shifts for multisensory integration are discussed below.

If they are to be integrated, different sensory stimuli must also be linked in time24,25. In general, these stimuli can reach the nervous system within a window of time that is comparatively long, sometimes lasting several hundred milliseconds. This enables integration to take place despite the different response latencies, conduction speeds and onsets of visual, auditory and somatosensory stimuli. The magnitude of the integrated response is sensitive to the temporal overlap of the responses that are initiated by each sensory input and is usually maximal when the peak periods of activity coincide.

Multisensory enhancement is typically inversely related to the effectiveness of the individual cues that are being combined26. This principle of inverse effectiveness makes intuitive sense. Individual cues that are highly salient will be easily detected and localized. Thus, their combination has a proportionately modest effect on neural activity and behavioural performance. By contrast, weak cues evoke comparatively few neural impulses and their responses are therefore subject to substantial enhancement when stimuli are combined. In these cases the multisensory response can exceed the arithmetic sum of their individual responses3,26,27,28,29and can have a significant positive effect on behavioural performance by increasing the speed and likelihood of detecting and locating an event7,8,9,10,11,30,31,32.

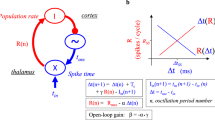

Descending excitatory inputs from a specific region of the association cortex are essential for multisensory integration in SC neurons. These inputs mainly come from the anterior ectosylvian sulcus (AES) (Fig. 3), but also come from the adjacent rostro-lateral suprasylvian sulcus, (rLS)31,32,33,34,35,36,37. The existence of similar circuits in other species remains to be determined. When the AES is deactivated, its target neuron in the SC might retain its multisensory character, but the response to the cross-modal stimulus is no longer more effective than its response to its modality-specific component stimuli. This loss of physiological integration is coupled with a loss of the SC-mediated behavioural benefits that are associated with multisensory integration.

a | A view of the cat brain, showing the anterior ectosylvian sulcus (AES). The somatosensory (SIV, the fourth somatosensory area), visual (AEV, the anterior ectosylvian visual area) and auditory (FAES, the auditory field of the AES) regions of the AES are shown (see Ref. 106 for further details). b | Schematic of the visual–auditory convergence onto a superior colliculus (SC) neuron from ascending and descending sources. Descending influences derive from the visual and auditory regions of the AES. The hypothetical convergence pattern is predicted by a computational model of multisensory integration, in which only descending inputs target electrotonically coupled areas of the target neuron in the SC. All inputs also project to interneurons (In) that project to the multisensory output neuron. Cor, coronal sulcus; L, lateral sulcus; LS, lateral suprasylvian sulcus; PAG, periaqueductal grey; PES, posterior ectosylvian sulcus; rLS, rostral LS. Part b modified, with permission from Ref. 39 © (2007) Pion.

It seems reasonable to expect that every multisensory neuron would be capable of synthesizing its inputs from different sensory modalities regardless of their source. Surprisingly, this is not the case, at least not in the cat SC. The descending cortical neurons from the AES are unisensory and they converge on an SC neuron in a way that matches the sensory profile that it acquires from other sources. A visual–auditory SC neuron, for example, will receive visual inputs from the anterior ectosylvian visual area (AEV) and auditory inputs from the auditory field of the anterior ectosylvian region (FAES)38. These excitatory inputs, along with some hypothetical inhibitory elements which are also predicted by a new computational model that helps us understand the relative contributions of ascending and descending inputs from different senses to the production of a multisensory response39(see also ref. 40), are shown in Fig. 3b. Some of the model's predictions are based on sensory terminal patterns, membrane channels and receptor clusters that remain to be demonstrated in SC neurons. Gaining further information on these aspects of SC neuron physiology and how they develop during postnatal life (Box 3) might thus aid future research into the biophysical mechanisms that are engaged in this circuit during multisensory integration.

Multisensory integration in the cerebral cortex

In addition to the cerebral cortex of the cat, neurophysio-logical and functional-imaging studies have identified many 'multisensory' cortical regions in both humans and non-human primates (Fig. 4). Although few neuro-physiological studies in non-human primates have demonstrated multisensory integration as it is operationally defined, there seems little doubt that many of these regions contain neurons that would be capable of integrating cross-modal cues. Here we consider studies of the cat AES cortex, followed by results from both non-human primates and humans that extend our discussion of multisensory integration beyond the simple orienting behaviour described above to more complex forms of multisensory coding.

a | Putatively multisensory regions of the monkey cortex. The coloured areas represent regions in which neurons that respond to multiple sensory modalities have been identified and include the lateral intraparietal area (LIP), the parietal reach region (PRR) in the medial intraparietal area (MIP), and the ventral intraparietal area (VIP), which is located at the fundus of the intraparietal sulcus, the ventrolateral prefrontal cortex (VLPFC) and the superior temporal sulcus (STS). Studies have shown that the visual, auditory and/or tactile receptive fields of single neurons in regions of the posterior parietal cortex can be encoded within a common reference frame. Single-neuron studies of the STS and the VLPFC have shown that multisensory neurons are sensitive to the semantic congruency of multisensory stimulus components. b | A rendering of the human brain showing putative multisensory regions, as defined by functional imaging criteria. Areas in which blood-oxygen-level-dependent (BOLD) activity related to visual, auditory and tactile stimuli was measured are shown. Red denotes brain regions in which the auditory, visual and tactile activations overlapped (trisensory or AVT regions); blue denotes regions in which the auditory and visual (audiovisual) activations overlapped; green denotes regions in which the visual and tactile (visuotactile) activations overlapped. The inset shows a horizontal section and identifies regions in which activations related to more complex multisensory stimuli (objects, communication and speech) have been measured. Part b modified, with permission, from Neuron Ref. 107 © (2008) Elsevier Sciences.

Along with its SC-projecting unisensory neurons, the cat AES contains multisensory neurons that do not project to the SC38 — their circuitry remains to be determined. This counterintuitive observation (one would expect multisensory neurons in interconnected structures to interact) became even more surprising when it was found that these neurons integrate inputs from different senses in many of the same ways that SC neurons do41,42. Indeed, many of the properties of multi-sensory neurons in the AES and the SC are similar42. For example, spatial register of the modality-specific receptive fields of multisensory neurons is an essential feature of neurons in both structures. Spatially disparate stimuli are either not integrated or produce multisensory depression, although this effect might be less potent in the AES than in the SC. AES multisensory enhancement also requires temporal concordance and exhibits inverse effectiveness. Given their many parallels, it is tempting to conclude that multisensory neurons in the SC and the AES have similar functions. However, it is not known whether multisensory AES neurons target regions that are involved in orienting behaviours.

Unfortunately, few other cortical areas have been the subject of studies that assess the capacity of constituent neurons to integrate inputs from different senses. For example, few studies have considered the effect of spatial coincidence or disparity on the products of integration in other cortical areas. Nevertheless, determining the spatial relationship among a neuron's modality-specific receptive fields will be of paramount importance in understanding the neural computations that are performed within a given region. Rather than the AES (for which the primate homologue is unknown), in primates such studies have focused on the posterior parietal cortex (PPC), where sensory information from many different modalities (visual, vestibular, tactile and auditory) converges.

The brain's huge energy investment in aligning sensory maps during development and in keeping them aligned during overt behaviour is amply rewarded. Because different unisensory neurons contact the same motor apparatus, map alignment is critical for coherent behavioural output, even in the absence of multisensory integration. Moreover, sensory-map alignment also sets the stage for the spatial principle of multisensory integration and the behavioural benefits that it supports.

Keeping receptive fields aligned in the posterior parietal cortex. In primates, the PPC is composed of subregions that are involved in various aspects of spatial awareness and guidance of actions towards spatial goals. Prominent among these are the lateral intraparietal (LIP), medial intraparietal (MIP) and ventral intraparietal (VIP) areas (Fig. 4a). The PPC transforms sensory signals into a coordinate frame suitable for guiding either gaze or reach. For example, LIP neurons encode visual and auditory stimuli with respect to current eye position, a reference frame that is appropriate for computing the vector of a gaze-shift towards a visual, auditory or cross-modal goal43. This requires auditory receptive fields to be dynamic, as they must shift with each eye movement (Fig. 5). In the parietal reach region (PRR), a physiologically defined region that includes part or all of the MIP, visual or auditory targets are likewise encoded in a common eye-centred coordinate scheme44,45. However, the PRR is responsible for producing goal-directed limb movements, and an eye-centred representation does not directly specify the spatial relationship between an object of interest and the necessary limb movement to reach it. The implications of this more abstract representation are beyond the scope of this Review (but see Refs 46,47), but it should be noted that many areas of the brain have a mechanism for creating, and dynamically maintaining, a spatial correspondence between stimuli from different modalities. Although multisensory integration has not been explicitly examined in either the LIP or the MIP, such re-mapping into common coordinate frames would be a prerequisite if multisensory enhancement and depression were to adhere to the same spatial rules that are apparent in the SC and the AES.

A hypothetical auditory receptive field shown in eye-centred coordinates. a | The position of an auditory receptive field (dotted circle) when the eyes are directed ahead (left panel) and when the eyes are deviated 20° to the right (right panel). With the change in the angle of gaze, the receptive field shifts from 20° to the left of the head to 0° relative to the head. In doing so, it maintains a constant spatial relationship to the direction of gaze: in eye-centred coordinates the auditory receptive field is always 20° to the left of the gaze. b | Response magnitude as a function of the auditory stimulus position with respect to the head for each of the two gaze angles. The figure depicts a complete shift, one that compensates fully for changes in gaze angle. Examples of such neurons have been found in many regions, including the superior collicus and the lateral intraparietal area. However, partial shifts are also common. Although this figure illustrates auditory information in eye-centred coordinates, the concept generalizes to all sensory modalities and reference frames. L, left; R, right.

Despite its simplifying appeal, there is evidence to suggest that reference-frame re-mapping is often incomplete. It is not uncommon to observe neurons that have receptive fields that shift only partially with changes in eye position and that code information in an intermediate reference frame48,49,50,51. The presence of such neurons seems to be the rule rather than the exception in cortical areas, and this might also be true in the SC20,21,22. It has been argued, on the basis of neural-network simulations, that these neurons could serve as essential elements in a network that enables efficient transformation from one coordinate frame (for example, eye-centred) to another (for example, head-centred)48,52. For multisensory integration, the primary implication of such incomplete receptive-field shifts would be that spatially congruent cross-modal stimuli would not necessarily fall simultaneously within the individual receptive fields of any given neuron. For such neurons, the spatial register of modality-specific receptive fields would vary with changes in eye position, as has been shown in the VIP49. Thus, the probability of observing multisensory enhancement should also vary, although this has yet to be tested empirically in any structure. To date, only one study of VIP neurons has explicitly examined multisensory integration, and it did so with the eyes and head aligned53. Interestingly, this study showed that spatially congruent visual–tactile stimuli were just as likely or more likely to evoke multisensory depression as enhancement, suggesting that there is a higher degree of complexity in the cortex than there is in the SC.

The importance of stimulus congruence. The complexity of cortical multisensory representations is further emphasized by considering the integration of non-spatial information. This is particularly germane to communication, as semantic congruence between sight and sound is more important than stimulus location54. Single-neuron studies have only recently begun to explore this aspect of multisensory integration. In one study55, the responses of visual–auditory neurons in the superior temporal sulcus (STS) were quantified (Fig. 4a). Using monkey vocalizations that were either congruent or incongruent with facial movements depicted in video clips of human faces, the authors identified neurons for which responses to the visual images were modulated by the concurrently presented sounds. The sample size was small and multisensory integration was as likely to produce depression as enhancement. However, when enhancement was obtained, it was greater for congruent than for incongruent pairings. More recently it was shown that a region of the ventrolateral prefrontal cortex (VLPFC) that receives input from the STS is dedicated to the multisensory integration of vocal communication signals56,57. This study also demonstrated more depression than enhancement in its sample of integrating neurons, and noted that multisensory integration was more commonly observed for face–vocalization pairs than for more generic visual–auditory pairings.

These single-neuron studies suggest a complexity of multisensory integration that is not observed in the SC, emphasizing that multisensory integration is not a unitary phenomenon. The principles of space and time, and their relationship to multisensory enhancement and depression, are more relevant to the SC, a structure that has evolved to detect and drive orientation to salient events. Because it is possible to orient to a single location at a time, it seems functionally imperative that spatially congruent stimuli reinforce each other (leading to multisensory enhancement) and spatially disparate stimuli compete (leading to multisensory depression). Different computational goals in the cortex might dictate different integrative principles.

Although discrete receptive fields and physical limits to temporal integration dictate that multisensory interactions in virtually all regions will be constrained by the spatial and temporal proximity of the stimulus components, the specific products of integration will necessarily reflect the particular functions to which the regions contribute. With this in mind, we note that the conceptualizations of regional cortical multisensory functions are at an early stage. In many cases the computational endpoints of cortical neurons are unclear, making the task of interpreting a diversity of multisensory outcomes difficult. Thus, for example, the contributions of multisensory enhancement and depression to the representation of congruent communication signals remain to be determined. Undoubtedly, the relationships between such integrative products and the computational goals that they support will become clearer as more studies are conducted and as their findings contribute to the development of more complete conceptual frameworks. Such studies are likely to be aided by insights derived from functional-imaging studies in humans.

Studies in the human cerebral cortex. Most studies of multisensory processing in the human cortex come from neuroimaging and evoked-potential studies6,58. Local field potential (LFP) studies have revealed multisensory integration (enhancement and depression) in specific regions of the auditory cortex. Consistent with the results of single-neuron studies in the primate STS55, and analogous to those in the primate VLPFC56,57, multisensory interactions in the human auditory cortex favoured the integration of stimulus pairs containing conspecific face and vocal clips59. These findings are generally consistent with earlier human neuroimaging studies suggesting that the STS is specialized for integrating auditory and visual speech signals60. In these studies, the blood-oxygen-level-dependent (BOLD) signal was enhanced for congruent pairings of audible speech and lip movement and depressed for incongruent pairings, suggesting that interactions in the STS underlie the well-documented effects of multisensory integration on improving (congruent) or degrading (incongruent) speech intelligibility60.

The STS is perhaps unique in that evidence from multiple studies supports a similar conclusion. However, whether or not multisensory integration also occurs for non-speech stimuli in the STS has been the subject of debate. It has been argued that the BOLD response for cross-modal stimuli must exceed the sum of the BOLD responses to the modality-specific components if multi-sensory integration is to be conclusively established61. This superadditivity requirement recognizes that lesser enhancements (such as simple summation) could reflect the independent contributions of neighbouring unisensory neurons and not true multisensory convergence. We also note here that there is evidence to suggest that the BOLD response only weakly reflects postsynaptic activity and that it is therefore unlikely to be directly analogous to results from single-neuron studies (see ref. 62 for a review).

This stringent criterion eliminates false positives; however, it almost inevitably leads to misses63, leading some researchers to promote the use of less restrictive statistical criteria64. This approach has led to the conclusion that the STS integrates information about a variety of common cross-modal objects65. However, although such studies reveal potential foci of multisensory interactions, they cannot be considered definitive and have to be considered in light of corroborating evidence. In an attempt to conclusively establish integration for non-speech stimuli in the STS, one study used cross-modal combinations composed of stimulus components that were near the threshold for detection66. The logic of this approach was based on the rule of inverse effectiveness, which dictates that combinations of the weakest stimuli produce the largest relative enhancements in the activity of multisensory neurons26(thus, superadditive interactions are most likely to occur3,12,27,28). Moreover, the use of weak cross-modal stimuli reduces the possibility that their combination saturates the BOLD response. This study demonstrated superadditivity in the STS for common auditory-visual objects and argues in favour of a more general multisensory role for the region.

Is the concept of a unisensory cortex still useful?

Functional imaging studies have identified numerous putatively multisensory regions in all cortical lobules (Fig. 4b). This conflicts with the classical view of sensory organization, in which multisensory interactions arise from the late-stage convergence of segregated modality-specific cortical streams. Studies have demonstrated multisensory influences on activity within classically defined unisensory domains, including relatively low-order regions of sensory cortices (see ref. 58 for a review). One interpretation, for which there is ample evidence (see ref. 67 for a review), is that these influences represent feedback from higher-order cortical territories. This suggests that top-down influences could affect relatively early sensory encoding, which in turn could underlie a host of well-documented multisensory attentional effects4,6,68,69. A more provocative interpretation is that these influences are not due solely to feedback from higher cortical areas, but that instead they are carried by feedforward pathways that support multisensory integration at very early stages in the cortical processing hierarchy. This view received early support from an event-related potential (ERP) study70, which provided evidence of auditory–visual interactions in the visual cortex less than 50 ms after stimulus onset. Interactions that take place too early to be due to feedback have since been reported for other 'unisensory' cortices67,71. The functional significance of such early interactions between the senses is yet to be established, however, one intriguing hypothesis is that they have an important role in input binding67. Functional implications notwithstanding, evidence of early multisensory convergence raises fundamental questions about the sensory-specific organization of the cortex.

The observations that many areas that were previously classified as unisensory contain multisensory neurons are supported by anatomical studies showing connections between unisensory cortices72,73,74,75,76and by the many imaging and ERP studies that reveal multisensory activity in these regions. These observations question whether there are any exclusive, modality-specific cortical regions and, thus, whether it is worth retaining designations that imply such exclusivity. Does this growing certainty that inputs from a second (or third) sensory modality access a unisensory cortex warrant a change in current cortical parcellation schemes? Such a change would radically alter concepts of how the cortex is organized and how research to understand the cortex's functional properties is conducted. The issue is complicated by a host of other questions. It is important to define the criterion incidence of modality convergence that warrants designating a region 'multisensory'. It is also important to understand the functional impact of the cross-modal input(s). Armed with this knowledge, it will be possible to consider the value of changing well-established modes of categorization.

Unfortunately, there is no generally accepted criterion incidence for designating a cortex 'multisensory'. Furthermore, there is considerable variability in estimates of the incidence of non-matching inputs (that is, inputs that don't match the predominant sensory representation) in cortical regions that have traditionally been defined as unisensory. The estimates of non-matching neurons in presumptive unisensory cortex vary from less than 8% to 50% or more72,77,78,79,80,81. In part, this is due to differences in the experimental techniques that are used and in the particular regions of the cortex that are being scrutinized. In one study, the comparative modality convergence patterns were examined in the rat78. The results revealed large expanses of visual, auditory and somatosensory cortices, with comparatively few neurons (less than 8%) that are responsive to other sensory stimuli. However, the borders between two sensory representations contained a mixture of unisensory neurons from both modalities and a far higher than average incidence of multisensory neurons. The existence of such multisensory transitional zones41,72,82 might affect interpretations of some (but not all58) of the results from studies using methods that provide lower spatial resolution.

Nevertheless, primary areas are generally thought to contain fewer non-matching neurons than surrounding cortices. Interestingly, the multisensory neurons noted within and bordering sensory areas discussed above were able to integrate their inputs from different senses in much the same way that SC and AES neurons do. The authors of this study78 concluded that the comparatively small number of non-matching inputs did not warrant eliminating the current designation of 'unisensory' cortex, but instead suggested adding a designation for the transitional zones between unisensory regions, where neurons integrate their cross-modal inputs in much the same way that SC and AES neurons do.

The sensory reports of patients undergoing direct cortical stimulation are also in keeping with the current designation of unisensory cortices. Electrical stimulation of primary and secondary sensory cortex produces comparatively simple, sensory-specific sensations83,84,85,86. The only non-matching sensations referred to are linked to inadvertent electrical stimulation of the scalp or meninges. This result is very different from the complex sensations, memories and hallucinations produced by stimulation of other cortical regions86, and is consistent with the seemingly sensory-specific perceptual effects of lesions in these areas.

Although it does not seem that a compelling case has yet been made for discarding traditional schemes of cortical organization, one question remains: what is the role of the non-matching sensory inputs that clearly do reach these unisensory regions and alter the responses of its constituent neurons? The question remains unresolved, but it might be that they somehow facilitate the unisensory functional role of each cortex — perhaps, as suggested for the auditory cortex87, by resetting its ongoing activity to render it more responsive to subsequent sensory-specific input.

Concluding remarks

It is apparent from the discussion above that the field of multisensory integration is at an intermediate stage of development. We began this Review with a very brief summary of work that explored the neurophysio-logical underpinnings of multisensory integration in the cat SC. Although our understanding of this process continues to be refined, the most basic mechanisms of multisensory integration, and their implications for orienting to a sensory target, have been known for some time. However, we have entered a new era in which there has been explosive growth in both the number and the variety of investigations into multisensory phenomena; in this Review we have chosen to highlight a few areas of exploration that are likely to help define the field going forward.

In particular, the issue of coordinate frameworks for representing sensory information, which has historically been discussed in the context of re-mapping sensory information for the purpose of generating motor output, is an inherently multisensory problem. How brain areas like those in the PPC maintain, or fail to maintain, register of modality-specific representations has profound implications for multisensory integration and the spatial rules to which it seems to adhere. Likewise, investigators have only begun to consider the issues relating to deciphering the neural representations of higher-order multisensory phenomena, such as speech perception and multisensory semantic congruency. Unlike the simple interplay between excitation and inhibition that is necessary to create topographic maps of stimulus location and movement metrics, the coding dimensions for these higher-order functions are undoubtedly much more complex. Understanding the functional roles of these brain regions that are capable of integrating information from different senses is a necessary prelude to understanding how the brain coordinates their contributions to perception and behaviour.

Understanding the principles that govern these more complex multisensory functions will require strong correlative evidence from human imaging studies and from single-neuron studies in animals. We have only alluded to the difficulty in relating the results obtained from these distinct methodologies; although the same terms are commonly used (such as multisensory enhancement or superadditivity), we should be aware of the fact that the mapping between the BOLD response and the activity of single neurons is an area of open inquiry.

Finally, it is not immediately apparent how the field will come to a consensus on what constitutes a multisensory (as opposed to a unisensory) region. The issue is far from settled and definitive criteria are not as readily apparent as it might seem. Determining how information from a non-matching sense modality alters the information processing in a classically defined unisensory domain will be crucial for understanding multisensory integration.

Although there are many outstanding issues, it is important to note that progress in the field has been impressive. A great deal has been learned about multi-sensory integration at the individual-neuron and neuronal-network levels, the principles that govern multisensory integration, its impact on behaviour and perception, and the maturational and experiential requirements for its acquisition during early life. The rapid growth of this young field, the excitement that it generates and the impressive armamentarium available to its practitioners provide confidence that the best is yet to come.

References

Stein, B. E. & Meredith, M. A. The Merging of the Senses (ed. Gazzaniga, M. S.) (The MIT Press, Cambridge, Massachusetts, 1993).

Meredith, M. A. & Stein, B. E. Interactions among converging sensory inputs in the superior colliculus. Science 221, 389–391 (1983). This seminal paper demonstrated multisensory integration in single superior colliculus neurons.

Stanford, T. R. & Stein, B. E. Superadditivity in multisensory integration: putting the computation in context. Neuroreport 18, 787–792 (2007). This study considers the relatively disproportionate effect of superadditivity in multisensory neurons. Superadditivity in multisensory integration is obtained only when the neuron's response to the component stimuli is weak.

Spence, C. & Driver, J. Crossmodal Space and Crossmodal Attention (Oxford Univ. Press, Oxford, 2004).

Gillmeister, H. & Eimer, M. Tactile enhancement of auditory detection and perceived loudness. Brain Res. 1160, 58–68 (2007).

Calvert, G. A., Spence, C. & Stein, B. E. The Handbook of Multisensory Processes (The MIT Press, Cambridge, Massachusetts, 2004).

Bell, A. H., Meredith, M. A., Van Opstal, A. J. & Munoz, D. P. Crossmodal integration in the primate superior colliculus underlying the preparation and initiation of saccadic eye movements. J. Neurophysiol. 93, 3659–3673 (2005).

Diederich, A. & Colonius, H. Bimodal and trimodal multisensory enhancement: effects of stimulus onset and intensity on reaction time. Percept. Psychophys. 66, 1388–1404 (2004).

Frens, M. A., Van Opstal, A. J. & Van der Willigen, R. F. Spatial and temporal factors determine auditory-visual interactions in human saccadic eye movements. Percept. Psychophys. 57, 802–816 (1995).

Hughes, H. C., Reuter-Lorenz, P. A., Nozawa, G. & Fendrich, R. Visual-auditory interactions in sensorimotor processing: saccades versus manual responses. J. Exp. Psychol. Hum. Percept. Perform. 20, 131–153 (1994).

Nozawa, G., Reuter-Lorenz, P. A. & Hughes, H. C. Parallel and serial processes in the human oculomotor system: bimodal integration and express saccades. Biol. Cybern. 72, 19–34 (1994). This is an early article establishing the relationship between reaction speed and multisensory integration.

Rowland, B., Quessy, S., Stanford, T. R. & Stein, B. E. Multisensory integration shortens physiological response latencies. J. Neurosci. 27, 5879–5884 (2007).

Burr, D. & Alais, D. Combining visual and auditory information. Prog. Brain Res. 155, 243–258 (2006).

Ernst, M. O. & Banks, M. S. Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415, 429–433 (2002).

Shams, L., Ma, W. J. & Beierholm, U. Sound-induced flash illusion as an optimal percept. Neuroreport 16, 1923–1927 (2005).

Kadunce, D. C., Vaughan, J. W., Wallace, M. T. & Stein, B. E. The influence of visual and auditory receptive field organization on multisensory integration in the superior colliculus. Exp. Brain Res. 139, 303–310 (2001).

Meredith, M. A. & Stein, B. E. Spatial determinants of multisensory integration in cat superior colliculus neurons. J. Neurophysiol. 75, 1843–1857 (1996).

Meredith, M. A. & Stein, B. E. Spatial factors determine the activity of multisensory neurons in cat superior colliculus. Brain Res. 365, 350–354 (1986).

Kadunce, D. C., Vaughan, J. W., Wallace, M. T., Benedek, G. & Stein, B. E. Mechanisms of within- and cross-modality suppression in the superior colliculus. J. Neurophysiol. 78, 2834–2847 (1997).

Hartline, P. H., Vimal, R. L., King, A. J., Kurylo, D. D. & Northmore, D. P. Effects of eye position on auditory localization and neural representation of space in superior colliculus of cats. Exp. Brain Res. 104, 402–408 (1995).

Jay, M. F. & Sparks, D. L. Auditory receptive fields in primate superior colliculus shift with changes in eye position. Nature 309, 345–347 (1984). This article is a seminal study of sensory coordinate frames; it is the first to systematically examine the eye-position-dependence of auditory receptive fields in the superior colliculus.

Peck, C. K., Baro, J. A. & Warder, S. M. Effects of eye position on saccadic eye movements and on the neuronal responses to auditory and visual stimuli in cat superior colliculus. Exp. Brain Res. 103, 227–242 (1995).

Groh, J. M. & Sparks, D. L. Saccades to somatosensory targets. III. Eye-position-dependent somatosensory activity in primate superior colliculus. J. Neurophysiol. 75, 439–453 (1996).

Meredith, M. A., Nemitz, J. W. & Stein, B. E. Determinants of multisensory integration in superior colliculus neurons. I. Temporal factors. J. Neurosci. 7, 3215–3229 (1987).

Recanzone, G. H. Auditory influences on visual temporal rate perception. J. Neurophysiol. 89, 1078–1093 (2003).

Meredith, M. A. & Stein, B. E. Visual, auditory, and somatosensory convergence on cells in superior colliculus results in multisensory integration. J. Neurophysiol. 56, 640–662 (1986).

Stanford, T. R., Quessy, S. & Stein, B. E. Evaluating the operations underlying multisensory integration in the cat superior colliculus. J. Neurosci. 25, 6499–6508 (2005).

Perrault, T. J. Jr, Vaughan, J. W., Stein, B. E. & Wallace, M. T. Superior colliculus neurons use distinct operational modes in the integration of multisensory stimuli. J. Neurophysiol. 93, 2575–2586 (2005).

Wallace, M. T., Wilkinson, L. K. & Stein, B. E. Representation and integration of multiple sensory inputs in primate superior colliculus. J. Neurophysiol. 76, 1246–1266 (1996).

Stein, B. E., Meredith, M. A., Huneycutt, W. S. & McDade, L. Behavioral indices of multisensory integration: orientation to visual cues is affected by visual stimuli. J. Cogn. Neurosci. 1, 12–24 (1989).

Wilkinson, L. K., Meredith, M. A. & Stein, B. E. The role of anterior ectosylvian cortex in cross-modality orientation and approach behavior. Exp. Brain Res. 112, 1–10 (1996).

Jiang, W., Jiang, H. & Stein, B. E. Two corticotectal areas facilitate multisensory orientation behavior. J. Cogn. Neurosci. 14, 1240–1255 (2002).

Jiang, W., Jiang, H. & Stein, B. E. Neonatal cortical ablation disrupts multisensory development in superior colliculus. J. Neurophysiol. 95, 1380–1396 (2006).

Wallace, M. T. & Stein, B. E. Cross-modal synthesis in the midbrain depends on input from cortex. J. Neurophysiol. 71, 429–432 (1994).

Alvarado, J. C., Vaughan, J. W., Stanford, T. R. & Stein, B. E. Multisensory versus unisensory integration: contrasting modes in the superior colliculus. J. Neurophysiol. 97, 3193–3205 (2007).

Jiang, W., Wallace, M. T., Jiang, H., Vaughan, J. W. & Stein, B. E. Two cortical areas mediate multisensory integration in superior colliculus neurons. J. Neurophysiol. 85, 506–522 (2001). The critical nature of influences from two cortical areas on multisensory integration in superior colliculus neurons is demonstrated here.

Stein, B. E., Spencer, R. F. & Edwards, S. B. Corticotectal and corticothalamic efferent projections of SIV somatosensory cortex in cat. J. Neurophysiol. 50, 896–909 (1983).

Wallace, M. T., Meredith, M. A. & Stein, B. E. Converging influences from visual, auditory, and somatosensory cortices onto output neurons of the superior colliculus. J. Neurophysiol. 69, 1797–1809 (1993).

Rowland, B., Stanford, T. R. & Stein, B. E. A model of the neural mechanisms underlying multisensory integration in the superior colliculus. Perception 36, 1431–1443 (2007).

Anastasio, T. J. & Patton, P. E. A two-stage unsupervised learning algorithm reproduces multisensory enhancement in a neural network model of the corticotectal system. J. Neurosci. 23, 6713–6727 (2003).

Wallace, M. T., Meredith, M. A. & Stein, B. E. Integration of multiple sensory modalities in cat cortex. Exp. Brain Res. 91, 484–488 (1992).

Stein, B. E. & Wallace, M. T. Comparisons of cross-modality integration in midbrain and cortex. Prog. Brain Res. 112, 289–299 (1996).

Stricanne, B., Andersen, R. A. & Mazzoni, P. Eye-centered, head-centered, and intermediate coding of remembered sound locations in area LIP. J. Neurophysiol. 76, 2071–2076 (1996). This was one of the first studies to demonstrate that the auditory receptive fields of LIP neurons can be coded in an eye-centred reference frame.

Batista, A. P., Buneo, C. A., Snyder, L. H. & Andersen, R. A. Reach plans in eye-centered coordinates. Science 285, 257–260 (1999).

Cohen, Y. E. & Andersen, R. A. Reaches to sounds encoded in an eye-centered reference frame. Neuron 27, 647–652 (2000).

Cohen, Y. E. & Andersen, R. A. A common reference frame for movement plans in the posterior parietal cortex. Nature Rev. Neurosci. 3, 553–562 (2002).

Pouget, A., Ducom, J. C., Torri, J. & Bavelier, D. Multisensory spatial representations in eye-centered coordinates for reaching. Cognition 83, 1–11 (2002).

Pouget, A., Deneve, S. & Duhamel, J. R. A computational perspective on the neural basis of multisensory spatial representations. Nature Rev. Neurosci. 3, 741–747 (2002). In this article, the issue of partial reference-frame shifts is considered in detail from a computational perspective.

Avillac, M., Deneve, S., Olivier, E., Pouget, A. & Duhamel, J. R. Reference frames for representing visual and tactile locations in parietal cortex. Nature Neurosci. 8, 941–949 (2005).

Mullette-Gillman, O. A., Cohen, Y. E. & Groh, J. M. Eye-centered, head-centered, and complex coding of visual and auditory targets in the intraparietal sulcus. J. Neurophysiol. 94, 2331–2352 (2005).

Schlack, A., Sterbing-D'Angelo, S. J., Hartung, K., Hoffmann, K.-P. & Bremmer, F. Multisensory space representations in the macaque ventral intraparietal area. J. Neurosci. 25, 4616–4625 (2005).

Snyder, L. H. Frame-up. Focus on “eye-centered, head-centered, and complex coding of visual and auditory targets in the intraparietal sulcus”. J. Neurophysiol. 94, 2259–2260 (2005).

Avillac, M., Ben Hamed, S. & Duhamel, J. R. Multisensory integration in the ventral intraparietal area of the macaque monkey. J. Neurosci. 27, 1922–1932 (2007).

Laurienti, P. J. et al. Cross-modal sensory processing in the anterior cingulate and medial prefrontal cortices. Hum. Brain Mapp. 19, 213–223 (2003).

Barraclough, N. E., Xiao, D., Baker, C. I., Oram, M. W. & Perrett, D. I. Integration of visual and auditory information by superior temporal sulcus neurons responsive to the sight of actions. J. Cogn. Neurosci. 17, 377–391 (2005).

Sugihara, T., Diltz, M. D., Averbeck, B. B. & Romanski, L. M. Integration of auditory and visual communication information in the primate ventrolateral prefrontal cortex. J. Neurosci. 26, 11138–11147 (2006). This paper is one of the first to examine multisensory integration in single neurons of the prefrontal cortex. It suggests a role for this region in the integration of the visual and auditory stimuli that constitute coherent communication signals.

Romanski, L. M. Representation and integration of auditory and visual stimuli in the primate ventral lateral prefrontal cortex. Cereb. Cortex 17, i61–i69 (2007).

Ghazanfar, A. A. & Schroeder, C. E. Is neocortex essentially multisensory? Trends Cogn. Sci. 10, 278–285 (2006).

Ghazanfar, A. A., Maier, J. X., Hoffman, K. L. & Logothetis, N. K. Multisensory integration of dynamic faces and voices in rhesus monkey auditory cortex. J. Neurosci. 25, 5004–5012 (2005).

Calvert, G. A., Campbell, R. & Brammer, M. J. Evidence from functional magnetic resonance imaging of crossmodal binding in the human heteromodal cortex. Curr. Biol. 10, 649–657 (2000).

Calvert, G. A., Hansen, P. C., Iversen, S. D. & Brammer, M. J. Detection of audio-visual integration sites in humans by application of electrophysiological criteria to the BOLD effect. Neuroimage 14, 427–438 (2001). A rationale is provided here for using superadditivity of the BOLD signal as a definitive criterion for detecting multisensory integration in functional-imaging studies.

Logothetis, N. K. & Pfeuffer, J. On the nature of the BOLD fMRI contrast mechanism. Magn. Reson. Imaging 22, 1517–1531 (2004).

Laurienti, P. J., Perrault, T. J., Stanford, T. R., Wallace, M. T. & Stein, B. E. On the use of superadditivity as a metric for characterizing multisensory integration in functional neuroimaging studies. Exp. Brain Res. 166, 289–297 (2005).

Beauchamp, M. S. Statistical criteria in FMRI studies of multisensory integration. Neuroinformatics 3, 93–113 (2005).

Beauchamp, M. S., Lee, K. E., Argall, B. D. & Martin, A. Integration of auditory and visual information about objects in superior temporal sulcus. Neuron 41, 809–823 (2004).

Stevenson, R. A., Geoghegan, M. L. & James, T. W. Superadditive BOLD activation in superior temporal sulcus with threshold non-speech objects. Exp. Brain Res. 179, 85–95 (2007). Using the stringent superadditivity criterion for BOLD activation, this study is the first to demonstrate that the superior temporal sulcus integrates information about multisensory objects.

Foxe, J. J. & Schroeder, C. E. The case for feedforward multisensory convergence during early cortical processing. Neuroreport 16, 419–423 (2005). The issue dealt with here is how multisensory convergence at the level of the sensory cortex can be accomplished as a consequence of feedforward projections in the CNS.

Busse, L., Roberts, K. C., Crist, R. E., Weissman, D. H. & Woldorff, M. G. The spread of attention across modalities and space in a multisensory object. Proc. Natl Acad. Sci. USA 102, 18751–18756 (2005).

Talsma, D., Doty, T. J. & Woldorff, M. G. Selective attention and audiovisual integration: is attending to both modalities a prerequisite for early integration? Cereb. Cortex 17, 679–690 (2007).

Giard, M. H. & Peronnet, F. Auditory-visual integration during multimodal object recognition in humans: a behavioral and electrophysiological study. J. Cogn. Neurosci. 11, 473–490 (1999). This early event-related-potential study in human subjects demonstrated that multisensory integration can take place very early in the processing of auditory and visual information.

Macaluso, E. & Driver, J. Multisensory spatial interactions: a window onto functional integration in the human brain. Trends Neurosci. 28, 264–271 (2005).

Bizley, J. K., Nodal, F. R., Bajo, V. M., Nelken, I. & King, A. J. Physiological and anatomical evidence for multisensory interactions in auditory cortex. Cereb. Cortex 17, 2172–2189 (2007).

Cappe, C. & Barone, P. Heteromodal connections supporting multisensory integration at low levels of cortical processing in the monkey. Eur. J. Neurosci. 22, 2886–2902 (2005).

Rockland, K. S. & Ojima, H. Multisensory convergence in calcarine visual areas in macaque monkey. Int. J. Psychophysiol. 50, 19–26 (2003).

Falchier, A., Clavagnier, S., Barone, P. & Kennedy, H. Anatomical evidence of multimodal integration in primate striate cortex. J. Neurosci. 22, 5749–5759 (2002). This paper provided one of the first anatomical demonstrations of non-visual inputs to the primary visual cortex.

Meredith, M. A., Keniston, L. R., Dehner, L. R. & Clemo, H. R. Crossmodal projections from somatosensory area SIV to the auditory field of the anterior ectosylvian sulcus (FAES) in cat: further evidence for subthreshold forms of multisensory processing. Exp. Brain Res. 172, 472–484 (2006).

Allman, B. L. & Meredith, M. A. Multisensory processing in “unimodal” neurons: cross-modal subthreshold auditory effects in cat extrastriate visual cortex. J. Neurophysiol. 98, 545–549 (2007).

Wallace, M. T., Ramachandran, R. & Stein, B. E. A revised view of sensory cortical parcellation. Proc. Natl Acad. Sci. USA 101, 2167–2172 (2004).

Brosch, M., Selezneva, E. & Scheich, H. Nonauditory events of a behavioral procedure activate auditory cortex of highly trained monkeys. J. Neurosci. 25, 6797–6806 (2005).

Fishman, M. C. & Michael, P. Integration of auditory information in the cat's visual cortex. Vision Res. 13, 1415–1419 (1973).

Morrell, F. Visual system's view of acoustic space. Nature 238, 44–46 (1972).

Schroeder, C. E. et al. Somatosensory input to auditory association cortex in the macaque monkey. J. Neurophysiol. 85, 1322–1327 (2001).

Penfield, W. & Rasmussen, T. The Cerebral Cortex of Man: A Clinical Study of Localization of Function (Macmillan, New York, 1950).

Brindley, G. S. & Lewin, W. S. The sensations produced by electrical stimulation of the visual cortex. J. Physiol. 196, 479–493 (1968).

Dobelle, W. H., Stensaas, S. S., Mladejovsky, M. G. & Smith, J. B. A prosthesis for the deaf based on cortical stimulation. Ann. Otol. Rhinol. Laryngol. 82, 445–463 (1973).

Penfield, W. Some mechanisms of consciousness discovered during electrical stimulation of the brain. Proc. Natl Acad. Sci. USA 44, 51–66 (1958).

Lakatos, P., Chen, C. M., O'Connell, M. N., Mills, A. & Schroeder, C. E. Neuronal oscillations and multisensory interaction in primary auditory cortex. Neuron 53, 279–292 (2007).

McGurk, H. & MacDonald, J. Hearing lips and seeing voices. Nature 264, 746–748 (1976).

Sumby, W. H. & Pollack, I. Visual contribution to speech intelligibility in noise. J. Acoust. Soc. Am. 26, 212–215 (1954).

Sams, M. et al. Seeing speech: visual information from lip movements modifies activity in the human auditory cortex. Neurosci. Lett. 127, 141–145 (1991).

Howard, I. P. & Templeton, W. B. Human Spatial Orientation (Wiley, London, 1966).

Jousmaki, V. & Hari, R. Parchment-skin illusion: sound-biased touch. Curr. Biol. 8, R190 (1998).

Shams, L., Kamitani, Y. & Shimojo, S. Visual illusion induced by sound. Brain Res. Cogn. Brain Res. 14, 147–152 (2002).

Graybiel, A. Oculogravic illusion. AMA Arch. Ophthalmol. 48, 605–615 (1952).

Wallace, M. T., Carriere, B. N., Perrault, T. J. Jr, Vaughan, J. W. & Stein, B. E. The development of cortical multisensory integration. J. Neurosci. 26, 11844–11849 (2006).

Wallace, M. T. & Stein, B. E. Development of multisensory neurons and multisensory integration in cat superior colliculus. J. Neurosci. 17, 2429–2444 (1997).

Stein, B. E., Labos, E. & Kruger, L. Sequence of changes in properties of neurons of superior colliculus of the kitten during maturation. J. Neurophysiol. 36, 667–679 (1973).

Wallace, M. T. & Stein, B. E. Sensory and multisensory responses in the newborn monkey superior colliculus. J. Neurosci. 21, 8886–8894 (2001).

Stein, B. E., Wallace, M. W., Stanford, T. R. & Jiang, W. Cortex governs multisensory integration in the midbrain. Neuroscientist 8, 306–314 (2002).

Wallace, M. T. & Stein, B. E. Onset of cross-modal synthesis in the neonatal superior colliculus is gated by the development of cortical influences. J. Neurophysiol. 83, 3578–3582 (2000).

Wallace, M. T., Perrault, T. J. Jr, Hairston, W. D. & Stein, B. E. Visual experience is necessary for the development of multisensory integration. J. Neurosci. 24, 9580–9584 (2004).

Wallace, M. T. & Stein, B. E. Early experience determines how the senses will interact. J. Neurophysiol. 97, 921–926 (2007). This was the first paper to show that the gradual postnatal development of multisensory integration in superior colliculus neurons allows early experience to form its operational principles.

Rowland, B., Jiang, W. & Stein, B. E. Long-term plasticity in multisensory integration. Soc. Neurosci. Abstr. 31, 505.8 (2005).

Neil, P. A., Chee-Ruiter, C., Scheier, C., Lewkowicz, D. J. & Shimojo, S. Development of multisensory spatial integration and perception in humans. Dev. Sci. 9, 454–464 (2006).

Putzar, L., Goerendt, I., Lange, K., Rosler, F. & Roder, B. Early visual deprivation impairs multisensory interactions in humans. Nature Neurosci. 10, 1243–1245 (2007).

Stein, B. E. The development of a dialogue between cortex and midbrain to integrate multisensory information. Exp. Brain Res. 166, 305–315 (2005).

Driver, J. & Noesselt, T. Multisensory interplay reveals crossmodal influences on 'sensory-specific' brain regions, neural responses, and judgments. Neuron 57, 11–23 (2008).

Acknowledgements

The author's research is supported in part by NIH grant N536916, EY016716 and EY12389.

Author information

Authors and Affiliations

Corresponding author

Related links

Glossary

- Multisensory integration

-

The neural processes that are involved in synthesizing information from cross-modal stimuli. It should not be confused with the particular underlying neural computation that determines multisensory integration's relative magnitude (superadditive, additive or subadditive).

- Cross-modal stimuli

-

Stimuli from two or more sensory modalities or an event providing such stimuli. This term should not be confused with the term 'multisensory'.

- Multisensory enhancement

-

A situation in which the response to the cross-modal stimulus is greater than the response to the most effective of its component stimuli.

- Multisensory depression

-

A situation in which the response to the cross-modal stimulus is less than the response to the most effective of its component stimuli.

- Qualia

-

The qualities of sensation such as the subjective impression that a sensation gives.

- Multisensory neuron

-

A neuron that responds to, or is influenced by, stimuli from more than one sensory modality.

- Receptive field

-

The area of sensory space in which presentation of a stimulus leads to the response of a particular neuron.

- Inverse effectiveness

-

The phenomenon whereby the degree to which a multisensory response exceeds the response to the most effective modality-specific stimulus component declines as the effectiveness of the modality-specific stimulus components increases.

- Additivity

-

A neural computation in which the multisensory response is not different from the arithmetic sum of the responses to the component stimuli.

- Subadditivity

-

A neural computation in which the multisensory response is smaller than the arithmetic sum of the responses to the component stimuli.

- Evoked-potential studies

-

Electrophysiological studies in which the electrical activity (that is, the electrical potential) of the brain in response to a stimulus is measured using scalp-surface electrodes.

- Blood-oxygen-level-dependent (BOLD) signal

-

An index of brain activation based on detecting changes in blood oxygenation with functional MRI.

- Superadditivity

-

A neural computation in which the multisensory response is larger than the arithmetic sum of the responses to the component stimuli.

- Summation

-

A neural computation in which the response to a multisensory stimulus (for example, a number of action potentials) equals the sum of the responses to each of the modality-specific component stimuli presented individually.

Rights and permissions

About this article

Cite this article

Stein, B., Stanford, T. Multisensory integration: current issues from the perspective of the single neuron. Nat Rev Neurosci 9, 255–266 (2008). https://doi.org/10.1038/nrn2331

Issue Date:

DOI: https://doi.org/10.1038/nrn2331

This article is cited by

-

Dynamics and concordance alterations of regional brain function indices in vestibular migraine: a resting-state fMRI study

The Journal of Headache and Pain (2024)

-

Triple dissociation of visual, auditory and motor processing in mouse primary visual cortex

Nature Neuroscience (2024)

-

A primary sensory cortical interareal feedforward inhibitory circuit for tacto-visual integration

Nature Communications (2024)

-

Towards Language-Guided Visual Recognition via Dynamic Convolutions

International Journal of Computer Vision (2024)

-

Intelligent Recognition Using Ultralight Multifunctional Nano-Layered Carbon Aerogel Sensors with Human-Like Tactile Perception

Nano-Micro Letters (2024)