Abstract

Brain–computer interfaces (BCIs) enable control of assistive devices in individuals with severe motor impairments. A limitation of BCIs that has hindered real-world adoption is poor long-term reliability and lengthy daily recalibration times. To develop methods that allow stable performance without recalibration, we used a 128-channel chronic electrocorticography (ECoG) implant in a paralyzed individual, which allowed stable monitoring of signals. We show that long-term closed-loop decoder adaptation, in which decoder weights are carried across sessions over multiple days, results in consolidation of a neural map and ‘plug-and-play’ control. In contrast, daily reinitialization led to degradation of performance with variable relearning. Consolidation also allowed the addition of control features over days, that is, long-term stacking of dimensions. Our results offer an approach for reliable, stable BCI control by leveraging the stability of ECoG interfaces and neural plasticity.

Similar content being viewed by others

Main

BCIs offer the promise of long-term, reliable and intuitive control of complex assistive devices in people with severe motor disabilities1,2,3,4,5,6,7,8,9,10,11,12,13,14. This goal might be best achieved by allowing BCI control to resemble our natural ability to learn and consolidate motor skills15,16,17,18. Despite impressive demonstrations of neuroprosthetic control in paralyzed individuals using invasive intracortical recordings3,4,6,7,9,12,13,14,19, an important factor that has been largely overlooked in clinical translational studies is the long-term reliability of control and the overall setup time, such as the time needed to calibrate the decoder that converts neural activity into control signals. Current intracortical studies must recalibrate the mapping from neural parameters to control dimensions on a daily basis because of recording instability; while few studies have explicitly monitored calibration times, this can require >30 min7,13,20. The requirement for daily decoder recalibration likely increases the variability of BCI performance across days and impedes both recruitment of neural plasticity and consolidation of control strategies15,16,17,21. Past studies of assistive technology have repeatedly shown that both setup time and performance stability are strong predictors of real-world use20,22,23,24,25,26. Thus, methods that allow long-term stable performance without recalibration (that is, ‘plug-and-play’ (PnP)) will enable broader use of BCIs.

An alternative to spike-based recordings is ECoG, which is known to be more stable over time27,28 and can enable BCI control6,8,13,27,29,30,31,32,33. Preclinical studies in animals have also indicated that ECoG signals can be recorded reliably over long periods of time and can allow stable decoding27,28. However, it remains unclear whether ECoG signals can be used in paralyzed individuals to allow consolidation of neural control and achieve PnP. Here, we used a 128-channel chronic ECoG implant, a device cleared for multi-year implantation, to test approaches for long-term stable control that can also optimally engage mechanisms of consolidation. Using the ECoG implant in a single participant with severe tetraparesis, we tracked at high resolution how the neural representations underlying BCI control changed over time. In particular, we compared decoders seeded each day using imagined control with decoders that retained weights. Within-session improvements have been observed using closed-loop decoder adaptation (CLDA)3,34,35, which modifies decoder properties in a stepwise manner as the user learns control. However, owing to the instability of intracortical spike signals, this required daily CLDA for decoder recalibration. It remains unclear how to conduct long-term CLDA (ltCLDA), with adaptation occurring over a period of days and weeks. A particular area of uncertainty is the learning rate, defined by a half-life value, which determines how decoders are updated; longer half-lives imply a greater history of data is used to update decoder weights. We found that ltCLDA, in which weights were carried across days with a long half-life, allowed reliable PnP. A benefit of consolidation was long-term stacking, which is the ability to add control dimensions over days to existing control. Our results demonstrate that ltCLDA leverages the stability of ECoG to allow increasingly complex control strategies based on consolidation of control maps.

Results

ltCLDA and BCI performance

We conducted experiments over a period of ~6 months to assess two approaches for decoder adaptation: daily initialization and ltCLDA (Fig. 1, feature extraction shown in Fig. 1a; see Methods for ECoG implant, Kalman-filter decoder and adaptation methods). Experiments were conducted ~2 months after implantation. Before these experiments, the participant had a recovery period of several weeks, followed by general exposure to cursor control. We first started with daily initialization, in which decoder weights were initialized each day by the participant imagining movements (imagined neck and right wrist) during passive cursor movements (Fig. 1); this approach is commonly used with spike-based recordings. The Fitt’s information transfer rate (ITR), which combines metrics of speed, accuracy and task parameters such as target size, remained constant over this time (Fig. 1b–d shows target sizes and speed/accuracy). There were days during this period when the participant could not even complete the fixed block because of poor control. We also quantified decoder adaptation by computing the change in angle between the decoder subspaces each day (90° indicates orthogonality and 0° indicates no change). Of note, the weights appeared to reset toward orthogonality at the start of each daily session (Fig. 1e,f).

a, Cursor-control pipeline. Illustration of the ECoG electrodes (colored by anatomical region) overlaid on the participant’s brain MRI. ECoG signals were continuously streamed, filtered and binned into neural features in a velocity Kalman-filter (KF) decoder. Decoder weights were learned through a closed-loop decoder adaptation process (CLDA). S1, primary somatosensory cortex; M1, primary motor cortex; PMC, premotor cortex; PMV, ventral premotor cortex; and SMA, supplementary motor area. b, Relative size of targets in the center-out task. c, BCI performance measured during ‘fixed’ control, that is, with decoder parameters held constant. Speed and accuracy changes during periods of daily initialization and ltCLDA. For days without values, the participant had such poor control that he could not complete the fixed block. d, BCI performance over time as measured by changes in Fitt’s ITR. e, Changes in the angle between x-velocity (Vx) and y-velocity (Vy) decoder weights over time. The angle between the decoder at each trial and the final trial for each day is marked by the vertical line. f, Angle between the decoder at each trial and the final decoder at the end of ltCLDA, that is, day +36. g, R2 values were computed by fitting a decoder to each respective imagined movement session (dashed lines) or by fitting a decoder to using all previous imagined movement sessions (solid lines). Thus, for a value of 7, all the previous seven sessions were used. h, Left: block diagram illustrating the two-learner system used to simulate ltCLDA. Right: ‘time to target’ for fixed control after simulated CLDA using the half-life of λ values (Supplementary Note). The box plots show the distribution of time to targets across 50 simulated fixed-block trials for each value of λ; the red vertical line depicts the median time to target. The edges of the box plots correspond to the 25th and 75th quartiles of data and the whiskers correspond to the entire data spread. CNS, central nervous system; Vint, intended velocity.

We hypothesized that the reason for this phenomenon might be variation in the neural strategy during daily imagined movement data collection with daily initialization. Consistent with this, we found that a decoder trained using a single imagined session tracked the cursor well (Fig. 1g); the R2 values in Fig. 1g were generated using ‘leave-out’ multivariate regression between all features and the displayed Vx and Vy cursor velocities (Methods). However, when we pooled data from multiple days in a systematic manner, the R2 value was progressively lower with the addition of each day (Fig. 1g). We interpreted this to mean that the relationship between neural features and the cursor velocity during imagined sessions was not stable across days; thus, a regression model that pooled days performed worse than a single session.

We then wondered whether ltCLDA, in which the weights from the previous session were used for the current session, might allow stabilization of decoder weights and improve performance by enforcing a single neural strategy (Fig. 1d–f). Even when sessions were separated by multiple days, we noticed that there was now ‘convergence’ of decoder weights, that is, the angles between the decoder weight subspaces converged toward zero (Fig. 1f and Supplementary Fig. 1; Supplementary Fig. 2a,b shows a ‘vector-based’ metric and comparison of changes in the Kalman gain). To quantify decoder convergence, we examined ‘within-day decoder differences’, that is, the angle of the decoder at the beginning of each day in Fig. 1e. We compared this measure during the first 5 d versus the last five experimental days and found a significant decrease in within-day decoder differences (unpaired t-tests, Vx: effect size = 60.9°, P = 5.959 × 10−6; Vy: effect size = 51.7°, P = 1.335 × 10−4). We also noted a concurrent improvement in BCI performance (Fig. 1c,d); there was a significant improvement in the Fitt’s ITR during the last 5 d compared to performance during daily initialization (unpaired t-test: effect size = 0.165 bits/s, P = 4.012 × −9; Fig. 1d; Supplementary Movie 1 and Supplementary Fig. 3 show trajectories). We also did not find evidence for overt movement strategies during the participant’s control (see Methods for video-based quantification). Our participant did continue to report using the ‘imagined’ movements (dominant hand and neck movements) to control the cursor.

To compare decoder convergence during daily initialization and ltCLDA, we contrasted the convergence trajectories (decoder angles relative to final trial) during the first 5 d of both daily initialization and ltCLDA. We found a significant difference in the convergence trajectories between the two for both the x-velocity (Vx differences: two-sample Kolmogorov–Smirnov (KS) test, P = 9.97 × 10−84) and y-velocity decoder coefficients (Vy differences: two-sample KS test, P = 2.02 × 10−124), highlighting significant differences in convergence over time. Moreover, using a linear model to quantify the rate of convergence (daily Vx slope: −0.043 (−0.047, −0.038); daily Vy slope: −0.020 (−0.026, −0.014); ltCLDA Vx slope: −0.109, (−0.116, −0.102); ltCLDA Vy slope: −0.097, (−0.101, −0.094); units: degrees per trial), we found significantly faster convergence rates during ltCLDA when compared to daily initialization (slope difference between ltCLDA and daily: Vx: 0.066 deg/trial, Vy: 0.077 deg/trial, P < 0.0001, permutation test). Together, these results indicate that there was a sustained convergence of weights to a stable decoder with ltCLDA.

Our results suggest that ltCLDA is helpful for long-term BCI learning; moreover, they suggest that long time constants for decoder updates (such as 500–5,000 s) are important, that is, λ half-life values that determine how past data is exponentially weighted (Methods). An intuitive understanding of the half-life of λ can be gained by viewing it as a parameter (in units of time) that reduces the contribution of previously estimated decoders by a factor of two at the current time step. Our BCI system is, therefore, ‘coadaptive’3,34,35,36; namely, while the user relied on visual feedback to gain a reliable control strategy, the decoder had to estimate the neural features underlying the controller during learning (Fig. 1b–f). To provide a quantitative estimate of the importance of ltCLDA with higher λ half-life values, we developed a computational model of a ‘two-learner’ BCI system (Fig. 1h and Supplementary Note). Our simulation found that ltCLDA with higher λ half-life values in the setting of a converging neural strategy translated to better fixed control (Fig. 1h). More specifically, ltCLDA provided a smoothly moving goal for the simulated user during training; this also allowed a better estimate of decoder weights to match the user’s strategy. In contrast, smaller λ half-life values provided a highly variable target for the simulated user during learning and thus likely resulted in impoverished learning; CLDA estimates of decoder weights poorly generalized to fixed control. Additionally, we tracked ltCLDA and its corresponding BCI performance over multiple months. The results showed relatively little variation in decoder weights and Kalman gain over time with continued ltCLDA (Supplementary Fig. 4a,b); fixed performance also continued to be stable (Supplementary Fig. 4c–e).

Emergence of a stable decoder map

We next examined how decoder weights (that is, for each of seven features) evolved over the 2-month period. When looking at ‘maps’ of decoder weights, we observed that the spatial map for some features appeared to stabilize rapidly, while others stabilized slowly (Fig. 2a,b: comparison of μ and γ2 ‘maps’; maps for all features are shown in Supplementary Figs. 5 and 6). The convergence trajectories (decoder angles relative to the final trial) during ltCLDA of the various decoder features were significantly different from each other (pairwise two-sample KS test between features at 0.01 level, multiple comparison corrected, average P = 3.97 × 10−5). The KS test here accounted for both the initial angle at the start of ltCLDA and how rapidly features converged at the end of ltCLDA. In particular, when focusing on the three exemplary features in Fig. 2c and by fitting a linear model, we found that γ2 was already starting at an angle closer to its final decoder than δ (difference in linear model intercept = 30.49 deg, P < 0.001, permutation test) and μ (difference in linear model intercept = 47.66 deg, P < 0.001, permutation test) at the beginning. Subsequently, γ2 exhibited a higher rate of convergence than δ (difference in linear model slope = 0.028 deg/trial, P < 0.001, permutation test) and μ (difference in linear model slope = 0.049 deg/trial, P < 0.001, permutation test). The different rates of convergence across features were not significantly related to initial decoder properties (Supplementary Table 1; linear regression of values show imagined sessions versus slope of convergence, P = 0.23). Supplementary Fig. 6a shows the separate feature maps at the end of the period and Supplementary Fig. 6b shows individual feature contributions across testing days.

a, Comparison of μ and γ2 decoder weights across days. Each box displays the L2 norm of the x-velocity and y-velocity decoder weights as a heat map each day. Each pixel represents the weight magnitude for a single ECoG channel. b, Examples of selected decoder weights for μ and γ2. Ant., anterior; Lat. lateral. c, Changes in angle between decoder weights at each trial and the final decoder at the end of ltCLDA. The colored lines represent different neural features. The asterisk highlights that γ2 power decoder weights were significantly lower than other feature weights; in general, all rates were significantly different from each other. d,e, Distribution of the slope for weight magnitude trends over time. Insets: respective μ and γ2 channels with a negative and positive slope. Values greater than zero (vertical dashed lines) indicate increasing weights over time. f, Increasingly restrictive levels of binary masks (γ2 masks are shown). Black squares represent channels that were retained. g,h, BCI performance (speed and Fitt’s ITR) while applying the different binary masks across all neural features during control (n.s., not significant). Error bars (mean ± s.d) are generated using n = 16 trials of experiment per binary task.

We also examined whether there was evidence for sparsification of control weights; such a process has been reported to occur during the process of BCI learning in animal models15,21,37. This phenomenon has great translational relevance as it may allow BCI users to learn control using only a fraction of the features, thereby possibly permitting unused channels to be used for independent multiplexed control. During the convergence period, within each feature, the magnitude for some weights increased over time (positive slope in Fig. 2e), and the magnitude for other weights reduced over time (negative slope in Fig. 2d). These positive and negative slopes suggest that over multiple sessions there may be evidence of respective systematic upscaling and downscaling of feature weights as identified by ltCLDA. Together, this indicated that the process of convergence not only stabilized informative features over time, but also may have ‘pruned weights’ that were less important for control.

We also conducted online experiments to test the sparsification hypothesis by examining control when low-magnitude weights were set to zero, that is, ‘masked out’. Three different masks were tested by keeping the largest 75%, 50% and 25% of weights (Fig. 2f and Supplementary Fig. 7). There was not a significant drop in performance (Fig. 2g,h; ANOVA, d.f. = 3, F = 1.33, P = 0.27). Overall, these results suggest that during map consolidation, ltCLDA selectively increased feature weights that were consistently important for control while simultaneously pruning uninformative weights (Supplementary Note).

Emergence of a neural map

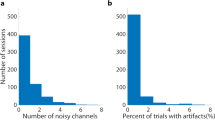

What drives the differential convergences of weights during ltCLDA? One possibility is that the ECoG signal itself is unstable for certain features and channels. However, the power spectrum over days was remarkably consistent (Supplementary Fig. 8); moreover, the distributions of day-to-day changes across channels were not significantly different between the different features (Fig. 3a; ANOVA, d.f. = 1, F = 3.83, P = 0.0513; Supplementary Fig. 9). In other words, the different rates of convergence did not appear to be driven by day-to-day differences in feature values or signal instability. A second possibility is that differences in representational stability, that is, the mapping between features and behaviors, could account for the different convergence rates. We calculated the preferred direction (PD) using a cosine-tuning model for individual channel features (Fig. 3b)15,38. As expected15,21, the variation in PDs (computed using circular s.d.) across features became more stable at the end of the ltCLDA in comparison to daily initialization (Fig. 3c shows the comparison for γ2; two-sided Wilcoxon rank-sum test: z = 2.82, P = 4.78 × 10−3, n = 128 channels; daily: median = 46.083, s.d. = 18.96; continuous–late: median = 30.84, s.d. = 21.06; Supplementary Fig. 10 shows feature plots). There was a significant relationship between PD variation and weight magnitude for all features (Fig. 3d; r = −0.635, linear regression, P = 3.47 × 10−102); the solid line shows an exponential fit, and the dashed lines represent the 95% confidence interval (CI; P = 3.466 × 10−102). Moreover, there was not a significant relationship between well-tuned features and the initial weights after an imagined block (regression between initial weight magnitude and PD s.d. was r = −0.06). This suggested that during co-learning with ltCLDA, higher decoder weights were assigned to emergent well-tuned features.

a, Distribution of standardized (z-scored) average γ2 power values for each day. Features were normalized relative to a 2-min baseline period collected at the beginning of each recording session. b, Cosine-tuning fits for an example channel across days (n = 15 days). Inset: a tuning fit for a single day, error bars indicate the s.e.m. c, Distribution of s.d. for PD during daily initialization and the last 5 days of ltCLDA were significantly different (two-sided Wilcoxon rank-sum test: z = 2.822, P = 4.78 × 10–3, n = 128 channels; daily: median = 46.08, s.d. = 18.96; continuous–late: median = 30.84, s.d. = 21.06). Inset: top 25% of channels d, Decoder weight magnitude (day +36) was significantly correlated with the variation of the s.d. of PDs within the last 5 d of continuous adaption (r = −0.63, P = 3.47 × 10−102). The solid line shows the exponential fit, and the dashed lines show the 95% CIs (r.m.s.e. = 2.93 × 10−4, P = 3.466 × 10−102). e, Comparison of the spatial distribution of cosine-tuning fits (that is, R2 is variance explained by a cosine-tuning model per channel for γ2) on the final day of ltCLDA with the decoder weight map. Left: goodness of fit for the cosine-tuning model for 𝛾2 feature as a heat map. Right: L2 norm of the x- and y-velocity decoder weights as a heat map. Also shown is the correlation between the two maps. f, Correlation of cosine-tuning goodness-of-fit spatial distributions and decoder-weight magnitudes for each feature across days. γ2 was relatively stable; other features changed at different timescales. The red asterisk represents the data in e.

Moreover, the long-term coadaptation strategy resulted in maps in which decoder weights closely corresponded to well-tuned channels. Figure 3e shows the correspondence of tuning and decoder weights for γ2). The R2 metric measures how much of the feature’s task-dependent activity is modulated as a function of target direction using a cosine-tuning model15. Thus, higher values indicate a greater fraction of the neural variance (R2) is modulated as a function of target direction. Figure 3e shows the distribution of R2 across the array for the γ2 map on the last day of ltCLDA. There was a high correlation between this distribution of R2 values and the decoder magnitude map (ρ = 0.917); we interpreted this to mean that features with higher weights were also likely to be well tuned.

We then measured the evolution of tuning across features and time. Figure 3f shows how the different feature maps evolved over the training period; while the γ2 map stabilized quickly (that is, the correlation between tuning and weight maps rapidly plateaued), other maps evolved more slowly). This difference in stabilization is consistent with the notion of different convergence rates for neural tuning. Based on the masking experiments, which revealed that only a fraction of features were necessary, we examined whether the top 25% of features, in terms of the weight magnitude, became more correlated over time. We found that with ltCLDA, there was greater correlation between the neural features during control; this was not evident for the bottom 25% of features (Supplementary Fig. 11). Together, this suggests that although daily initialization may be sufficient to capture γ2 power representations, ltCLDA appears to assist in stabilizing the other feature maps in a stepwise manner over time.

Plug-and-play performance

We next wondered whether ltCLDA can result in PnP, that is, stable performance without recalibration over a period of weeks. Thus, we sought to specifically test whether the participant could (1) achieve BCI control across days or months without further decoder adaptation and (2) learn stacking of additional control dimensions without degradation of the previously consolidated map. PnP tests were first performed for a period of multiple days (Supplementary Fig. 1f); this was conducted at the end of the experiments shown in Fig. 1. There was no significant degradation in performance over this period (Supplementary Fig. 1f shows the performance and timing of experiments).

We next evaluated PnP performance over a much longer period. In this case, we started a new ltCLDA experiment using a reduced set of features. As suggested by the masking experiments (Fig. 2f and Supplementary Fig. 7) and our data analysis (Supplementary Figs. 6 and 10), only a fraction of the features were used for control; thus, we conducted ltCLDA using only three features per channel (δ, β and γ2). Figure 4a–c shows the resulting performance and time relative to the experiments in Fig. 1 (Supplementary Fig. 4). We then tested PnP control over a 44-d period; experimental access dictated the timing. Strikingly, PnP BCI performance was stable for >1 month from the last calibration; there was no significant drop in overall performance when comparing CLDA and the PnP session (unpaired t-tests: effect size = 0.12, P = 0.19). What were the performance trends within each session? When we compared the first block to the last block of each daily PnP session, there was a 20.1% ± 10% improvement in performance over time in the daily session. Given that these testing periods were many days apart, this finding might be consistent with the notion of ‘warm-up’ periods associated with recall of complex skills39,40. Overall, our participant appeared capable of recalling the consolidated neuroprosthetic strategy after extended breaks.

a, Angle between the decoder at each trial and the final PnP decoder. b, BCI performance measures during fixed control. Speed and accuracy changes during periods of decoder convergence and fixed decoder/PnP. c, BCI performance over time as measured by Fitt’s ITR (dashed line is the mean baseline ITR) . d, Left: angle between decoders (for each trial and with reference to final PnP decoder) during ltCLDA with a consolidated decoder and CLDA after reinitialization. Time points 0, 1 and 2 reflect the end of ltCLDA with the consolidated decoder, reinitialization and the endpoint after further CLDA. Right: BCI performance at time points 0, 1 and 2. e, Left: average mean angle between the decoders with ltCLDA and after reinitialization (n = 7 experiments). Right: corresponding mean BCI performance at the respective time points (n = 7, 5, 5), with error bars (mean ± s.d). f,g, Illustration of the changes in decoder weight maps during ltCLDA with a stabilized decoder and CLDA after reinitialization.

What is the effect of decoder reinitialization with consolidated control? After ltCDLA had stopped, we compared performance of newly initialized decoders to the final decoder after ltCLDA; decoders were initialized using the approach in Fig. 1. Figure 4d,e compares decoders with ltCLDA and after reinitialization. After reinitialization (decoder 1), there was a >45° deviation from the fixed decoder (decoder 0) and a drop in performance (Fig. 4d,e). Following further CLDA, the reinitialized decoders appeared to converge back to the PnP decoder (decoder 2; Fig. 4d,e). This further highlights the potential detrimental effects of recalibration after consolidation of a neuroprosthetic control strategy. Figure 4f,g illustrates example variations in the neural maps across 48 trials for ltCLDA and after decoder reinitialization. It shows that the neural map for ltCLDA is largely stable across trials, and that, while reinitialization perturbed the map, there was a partial return to the original map with corresponding improvements in performance.

Long-term stacking of control dimensions

Given that a stable map for control was established, we next tested whether the participant could stack two ‘neural clickers’ (see Fig. 5a for task design). The participant was able to achieve reliable click control in a grid task (with > 85% accuracy); in doing so, the participant demonstrated that he could generalize his control to the new task workspace (see Fig. 5a for cursor trajectories; Supplementary Movie 2 with a bit rate of 0.92 bits per second (bps); and see Supplementary Fig. 4 for timeline of experiments). In general, with the change in task space and the addition of the clicker, the participant could typically achieve 0.5–1 bps. Learning this new d.f. did not interfere with previously consolidated maps (Fig. 5b). We also examined the reliability and neural strategy underlying the clicker. By projecting the activity onto the click decoder hyperplane, we visualized the participant’s strategy (Fig. 5c). Once he learned to use the clicker, the participant was able to selectively enter this subspace to click in a stable manner across days (Fig. 5d). On average, the participant entered the target 1.065 s (0.995–1.139 s) before ‘clicking’. He was also able to largely avoid clicking while he directed the cursor to the target. Notably, there was no correlation between low speed values and clicking (Supplementary Fig. 12); these were designed to run independently in parallel. Once the first click was stable for >1 month, we stacked a second clicker (B) while keeping the decoder for clicker A fixed. As illustrated in Fig. 5e, the participant was able to enter a given target and engage either clicker A or B based on instructions. Overall, our results highlight the potential for ECoG-based control to stack multiple discrete dimensions for control.

a, Outline of the click task in which the participant had to move the cursor to the cued target and ‘click’ to select it. Two example trajectories are included. b, Comparison of γ2 decoders before and after learning click control. Also shown are the γ2 weights from the click decoder. The angle between the click and cursor-control decoder was ~55°. c, An example of changes for neural-state values in click space; the moment that click happens, the neural state crosses the click threshold. d, Illustration of click space across multiple days. Clicker A was stable across days. The vertical dashed lines show the moments that cursor enters the target area. e, Left: outline of the click task for two-clicker testing (A and B). The participant had to move the cursor to the cued target. After entering the target, the participant was instructed to click either A or B in a randomized manner. Right: comparison of γ2 maps after learning the second clicker (B).

Discussion

In summary, our ECoG implant allowed us to specifically test approaches for ltCLDA that promoted consolidation of control, PnP and dimensional stacking. Our results highlight the importance of performing ltCLDA in which the decoder weights are carried over with a long time constant. Of note, during the early phase of ltCLDA, the participant could seamlessly continue ltCLDA even when sessions were separated by days. Our analyses provide a mechanism for why ltCLDA offers a clear benefit over reinitialization. First, while the convergence of some weights and the formations of some representations were rapid, ltCLDA appeared to allow parallel slower rates of consolidation for other weights. Second, our results indicate that ECoG BCIs can stack additional d.f. over a period of days while maintaining existing control. Together, these results indicate that ECoG can offer the potential for long-term control without recalibration, thereby dramatically reducing setup time.

What is the specific role of ltCLDA in allowing proficient control? In our approach, ltCLDA resulted in consistent performance improvements when contrasted with single-day CLDA with reinitialization. When a similar single-day CLDA was performed after consolidation (Fig. 4), performance improved rapidly and weights reverted toward the consolidated map. This suggests that single-day CLDA might have different roles when a control strategy is consolidated versus when it is nascent. This might be consistent with the observation that single-session CLDA in able-bodied animals can allow rapid improvements3,34,35; it is possible that because these animals had a robust movement representation, CLDA remapped representations to align with the decoder and thereby rapidly improved control. We can also compare ltCLDA to a ‘fixed-decoder’ approach. Theoretically, it is possible that a fixed-decoder strategy may also work; this was demonstrated in animal experiments in which biofeedback allowed for the emergence of neural representations to match decoder properties15,21,30. In those cases, however, experimental conditions could be manipulated to maintain engagement (for example, food scheduling). In contrast, our user did not show consistent engagement in the setting of poor performance. For example, during the ‘daily initialization’ period, on 2 d (days –11 and –7), the user was unable to perform the task with a fixed decoder after CLDA ended. It was only with ltCLDA that we observed sustained improvements in performance. Our analysis showing slow decoder convergence is consistent with these findings as well. Together, our results support the growing notion that CLDA, based on coadaptation, can more rapidly allow skilled control3,34,35.

In general, skilled behaviors, once consolidated, can be performed accurately and consistently. While the precise neural basis of such control is not fully known, there is growing evidence that stable neural representations underlie stable behaviors. For example, studies in nonhuman primates have shown that single-neuron directional tuning can be quite stable for skilled behaviors21,41,42. Furthermore, task-related neural manifolds, representing the activity patterns of neural populations, can be remarkably stable for skilled behaviors17,43. Our chronic ECoG recordings also provide evidence that mesoscale representations in people with paralysis also demonstrate relative stability during task periods with consolidation. Our results also indicate that the δ, β and γ2 bands demonstrate the fastest convergence; they are also sufficient to allow stable control. These bands also demonstrated robust plasticity with ltCLDA (Supplementary Fig. 10). One possibility is that they directly capture neural processes. While there is a wealth of evidence for β44 and γ245 in motor neuroscience, growing evidence exists for δ46,47. While γ2 is likely a correlate of localized spiking48; δ may represent emergent coordinated population spiking across cortical and subcortical regions46,47,49. In contrast, it is possible that features that were ‘noisy’ and poorly represented control were downscaled; this is most apparent for the δ angle, which experienced aggregate downscaling (Supplementary Fig. 6). Further study is required to fully understand the mechanisms of plasticity and convergence described here.

It is worth noting that our results were obtained in a single participant. The main goal of this study was to demonstrate feasibility of achieving PnP in individuals with paralysis using an ECoG device. Testing of this approach in additional individuals is certainly an important next step. In addition to providing general support for ECoG, this will also help validate the importance of specific features for BCI control. There has been a move toward the use of stereotactic electroencephalography (sEEG) recordings over ECoG for epilepsy patients50; sEEG electrodes can be selectively placed at desired locations and can target a more distributed network, including deeper structures. Moreover, sEEG can also stably record field potentials. Future research can contrast the benefits of recording from an ECoG grid versus recording from multiple sites in a network. Moreover, while translational studies using spike-based recordings recalibrated decoders because of signal instability, it is possible that new decoding methods or signal conditioning might minimize calibration time while using spiking signals. For example, studies in able-bodied monkeys have suggested that thresholded multi-unit activity can stably decode kinematics, notwithstanding the daily recalibration17,51; however, local-field potentials, perhaps analogous to ECoG, were more stable.

Lastly, while our results indicate the feasibility of PnP, the bit rates shown here are below the highest rates for spike-based BCIs. In the best performance to date, rates for three individuals ranged from 1.4 to 4.2 bps7. In contrast, our continuous control performance is equivalent to the best performance of spike-based BCIs from a few years ago (ref. 52). For our best performance, which was achieved in the grid task, we reset the cursor to the center after every trial, a factor that potentially boosted performance. Future studies could optimize the need for continuous control versus a reset regarding task needs and data rates. While performance is key, also important is the documentation of required calibration times to achieve skilled performance and to monitor performance variability across days, especially if it takes greater calibration time, particularly because calibration time and variability affect real-world use of assistive technology20,22,23,24,25. There is likely an optimal balance between stability, reliability and performance; understanding this balance may be key for robust translation. To enable long-term reliable control of complex devices, neuroprosthetic control may need to tap into natural processes of memory consolidation for high d.f. devices. For example, although studies have impressively shown the feasibility of reach-to-grasp control14,19,52,53, another study has noted that degradation of spike signals and a lack of reliable decoding resulted in variable performance across days53. Thus, it will be important for future studies to compare daily rebuilding of complex control with methods that promote consolidation and optimize the trade-off between stability and performance.

Methods

Study design

Approval for this study was granted by the US Food and Drug Administration (FDA; investigational device exemption) and the University of California, San Francisco Institutional Review Boards. The study was also registered before enrollment (NCT03698149). The participant described in this report has provided permission for photographs, videos and portions of his protected health information to be published for scientific and educational purposes. Participant B1 is a right-handed man who was 36 years old at the start of this study. About 16 years before this, he was diagnosed with extensive bilateral pontine strokes resulting from a presumed vertebral dissection after a motor vehicle accident. This stroke left the individual with severe spastic tetraparesis (Medical Research Council scale of the right upper extremity: 1/5 for finger flexion, 3/5 for elbow flexion, 2/5 for elbow extension and 0/5 for other upper limb muscles; upper extremity Fugl–Meyer score of 7/66). Participant B1 is wheelchair bound, has difficulty speaking and has limited, slow control over proximal and distal limb movements.

ECoG array and surgical implantation

As outlined above, we obtained FDA clearance to chronically implant an ECoG array in individuals with severe motor weakness. The device consisted of a 128-channel ECoG array (PMT; 128 channels in an 8 × 16 array arrangement (Fig. 1a); total array dimensions of 6.7 × 3.2 cm2, 2-mm recording surface contact with 4-mm spacing between electrodes) connected to a Blackrock transdermal connector (Blackrock Microsystems). The PMT ECoG array is commercially available and currently widely used for clinical monitoring (typically for <28 d). The Blackrock pedestal system is also commercially available and is mainly used for microelectrode arrays. In our specific experiment, channels from the ECoG device were bonded by Blackrock Microsystems to the pedestal connector such that all 128 channels were mapped to the 128-channel transdermal connector. Connection reliability was checked, and impedance measurements were conducted before implantation. After completion of informed consent and medical and surgical screening procedures, the ECoG array of electrodes was implanted through a surgical procedure. This device was then placed over sensory and motor cortices (Fig. 1).

Signal conditioning and feature extraction

Neural signals were collected at 30 kHz from the ECoG grid via the NeuroPort recording system (Blackrock Microsystems). An analog bandpass filter (0.3–500 Hz) and an adaptive filter with a 10-s time constant (for line noise cancellation) were used to filter the signal before it was digitized and sampled at 1 kHz. The signal block was then streamed via Ethernet based on a sampling rate of either 5 or 10 Hz to a computer that performed signal conditioning and feature extraction using custom MATLAB software (The Mathworks). We locally referenced the neural signals by subtracting the common median across channels at each sample. Then, we extracted the neural features.

To compute low-frequency features (<13 Hz), we applied a filter bank of bandpass filters (Butterworth; third order) to each neural channel: delta, δ: 0.5–4 Hz; theta, θ: 4–8 Hz; and Mu, μ: 8–13 Hz. Each filtered signal was stored in a 2-s buffer. To compute the power of each signal band, we calculated the analytic amplitude across the entire 2-s buffer and then computed the average log power in the most recent signal block. For the delta phase, we computed the 200-ms circular average of the Hilbert transform angle.

To compute high-frequency features (>13 Hz), we split functionally relevant neural frequency bands into several smaller bands54. We applied a filter bank of these tighter bandpass filters (Butterworth; third order) to each neural channel: beta, β: 13-19 Hz, 19-30 Hz; low gamma, γ1: 30–36 Hz, 36–42 Hz, 42–50 Hz; and high gamma, γ2: 70–77 Hz, 77–85 Hz, 85–93 Hz, 93–102 Hz, 102–113 Hz, 113–124 Hz, 124–136 Hz and 136–150 Hz. The power of each filtered signal was computed by taking the log of the average squared signal for every signal block. Finally, functionally relevant putative bands were averaged together.

Every time we plugged the participant into the system and began recording neural features, we recorded spontaneous neural activity during a 120-s ‘baseline’ period in which the participant was asked to rest. We computed the mean and s.d. of each neural feature during baseline. During each recording, the neural power features were z-scored by subtracting the baseline mean and dividing it by the baseline s.d. This procedure yielded a total of 896 normalized neural features (128 channels × (6 power features + 1 phase feature)).

BCI tasks

We used custom MATLAB software (Mathworks) that interfaced with the Psychophysics Toolbox to control task flow and update a computer monitor with low latencies55.

Center-out task

The participant was instructed to move the ‘cursor’ to the ‘target’ (blue and green dots, respectively; Fig. 1). A target was placed at the center of the screen, and eight outer targets were placed on a circle around the center target at 45° intervals. Targets were presented in blocks of eight trials; in a single trial, each outer target was presented once in a random order. To complete a trial, the center of the cursor had to overlap with the target for a single 200-ms time bin. A trial consisted of one reach to the center target and another reach to the instructed outer target. If the participant failed to select the target within the time limit (15 s for the first session of daily initialization and 25 s thereafter), the trial was registered as an error, and the cursor was reset to the center.

We had three types of blocks: imagined control blocks, CLDA control blocks and fixed control blocks. During imagined control blocks, the participant was not in control of the cursor; rather, the cursor followed preset trajectories to complete the task. The participant was instructed to imagine moving his arm up and down to move the cursor up and down and to imagine moving his head left and right to move the cursor left and right. The participant did not move during this task and was unable to move at speeds comparable to the virtual cursor movements. During CLDA control blocks, the participant was in control of the cursor, but the decoder parameters were updated during each time bin (see “Cursor-control algorithm”). During the daily initialization period (days –20 through –6) and the first 2 d of ltCLDA, we began each session with a small level of assistance (~5-10%; see “Parameter adaptation”), which decayed within a few blocks. During fixed control blocks, the participant was in full control of the cursor and the decoder parameters were held constant. This assistance was important for mitigating the frustration of using an initially inaccurate decoder and maintaining the participant’s engagement. Once the user was able to reliably complete the task, we stopped using assistance entirely.

During a few sessions, the cursor was automatically reset to the center after each reach target was selected. Target sizes and distances varied across sessions based on the participant’s performance and preferences. Targets were placed 4.4–7.5 cm from the start target and had diameters of 1.7–2.9 cm.

Point-and-click task

Briefly, gray squares were laid out in a 4 × 4 grid. One square would be illuminated green to indicate that it was the target for that trial. Initially, the target could be successfully selected if the participant could hold the cursor over the target for a duration of 600–1000 ms, which was optimized to cursor speed and drift on that particular testing day. Once the participant gained the ability to perform a neural ‘click’, any of the squares could be selected by moving the cursor to a given square and clicking. The participant was then instructed to move the cursor to the illuminated target and click to make the correct selection. After each trial, the next target was randomly selected from the grid and immediately illuminated (Fig. 5a). While cursor velocities were continually updated (that is, there was no intertrial interval), we reset cursor position to the grid center following the completion of every trial.

Cursor-control algorithm

Velocity Kalman-filter decoder

The online cursor-control algorithm used here was first described by Gilja et al.3. Briefly, we describe the decoder algorithm. Let there be two vectors, xt and yt that represent the kinematic state of the cursor and the neural features at time t. The state vector xt contains the horizontal and vertical position (p) and velocity (v) of the cursor at time t:

The Kalman filter models the evolution of cursor kinematics as a linear dynamical system and models the relationship between the kinematic state and the neural features as a linear relationship:

where A and C are the state and observation matrices and w and q are Gaussian noise sources: wt~N(0,W) and qt~N(0,Q). In our study, A and W were set parameters that were hand-tuned to optimize performance.

For the majority of our experiments, a was set to 0.825 and w to 150. C and Q were fit parameters during an adaptation procedure (see “Parameter adaptation”).

During online implementation of the Kalman filter, the decoder kept track of an internal estimate of the cursor kinematic state uncertainty (P). As described previously as ‘innovation 2’ (ref. 3), we set the position and position-velocity interaction terms of P to 0 at each time step. This reduced high-frequency jitter in the cursor kinematics.

Parameter adaptation

To adapt the decoder parameters, we followed the procedure outlined in Dangi et al.35. This method sets the adaptation procedure up as a recursive maximum-likelihood problem, which enables small adjustments to the Kalman C and Q matrices at each time bin. As in the Kalman-filter section, we continued to use vectors xt and yt to represent the kinematic state of the cursor and the neural features at time t. We introduced a new term, \({{\tilde{x}}_t}\), which represents the intended cursor velocity (\({{\tilde{x}}_t} = \left[ {\tilde v_t^{\mathrm{hor}},\tilde v_t^{\mathrm{vert}},1} \right]^T\)). In this work, we assumed that at each time step the user was intending to move the cursor directly from its current position to the current target location with a constant speed3.

Next, we defined three ‘sufficient statistics’, R, S and T, which tracked the covariance of the intended cursor state, the intended cursor state with neural features and the neural features, respectively; we also kept track of EBS, the effective batch size. Each of these statistics were updated at each time step:

Here, λ is a preset term, which defines the rate of adaptation. We report λ in terms of its intuitive half-life equivalent: h (Supplementary Note). During daily initialization experiments, in which decoder convergence had to happen within a day, h was set to values ranging from 250–1,000 s. During ltCLDA experiments, we could use slower adaptation rates; h was set to 500–5,000 s. The Kalman matrices C and Q could be updated directly from these sufficient statistics:

This C matrix only contains velocity weights and baseline weights. To use it with the full cursor state, including position terms, we added two columns of zeros.

During some ltCLDA control blocks, we used an assistive control paradigm35 to temporarily aid the participant in completing trials. The cursor velocity (\(v_t^{\mathrm{(cursor)}}\)) was a weighted average of the decoded cursor velocity (\(v_t^{{({\rm{dec}})}}\)) and the intended cursor velocity (\(v_t^{\mathrm{(int)}}\)).

α was a set parameter that decayed from 0.1 to 0 in about two CLDA control blocks.

Parameter initialization

During daily initialization sessions, the decoder parameters were initialized based on neural activity recorded during imagined control blocks. At least ten blocks (80 trials with N time bins) of data were used to initialize the ltCLDA sufficient statistics and, consequently, the Kalman C and Q matrices.

During ltCLDA sessions, the decoder parameters were not reinitialized. Instead, the previous session’s decoder parameters were used. At the beginning of each session, we continued to collect spontaneous activity during baseline recording blocks to account for day-to-day changes in signal statistics (see “Signal conditioning and feature extraction”).

BCI performance assessment

BCI performance was evaluated using three commonly used performance metrics: success rate, time to target and Fitt’s ITR. First, we computed the success rate as the percentage of trials in which the target was acquired within the time limit. The time to target was computed as the time in seconds required to move the cursor from the center target to one of the eight outer targets. For unsuccessful trials the time to target was set to the time limit (15 s or 25 s). To compare performance across different target sizes (Sz), target distances (D) and BCI experiments, we computed the Fitt’s ITR3. Unless otherwise specified, all statistics calculating performance differences used the Fitt’s ITR.

Meanwhile, with respect to cursor control using the clicker, the bit rate (BR) was computed as follows56:

where Cs, Is, Ng and T are the number of correct selections, incorrect selections, targets on a grid and the total time for performing the task, respectively. Trials that timed out without a click did not contribute to the numerator; nevertheless, the time taken while trying to click was included in the denominator.

Decoder convergence

We assessed decoder differences across CLDA trials by analyzing the third and fourth columns of the Kalman C matrix. The third and fourth columns represent the weights that linearly map cursor x and y velocities to the neural feature space, respectively. These weights or coefficients at each CLDA trial were concatenated across all 896 features independently for the x and y velocities to create two vectors, each of dimension 896 × 1. Depending on the analysis, the reference vector was the vector from either the last CLDA trial (convergence across days; Fig. 1f) or the last CLDA trial of a given day (convergence within a day; Fig. 1e). The angle between the reference vector and all other vectors was computed using the subspace() function in MATLAB, independently for the x (purple line, Fig. 1e,f) and y (green line, Fig. 1e,f) velocities. Additionally, as another measure, we combined the weights for x and y velocities to create a PD for each feature i, given by \(\tan ^{ - 1}\frac{{C_{v_y}^i}}{{C_{v_x}^i}}\). This step created one vector of dimensions 896 × 1 that contained the PD for each feature at every CLDA trial. We then computed the angular differences between the reference vector of PD (within day and across days) to all other vectors of PD (vectorial difference) again using the subspace() function in MATLAB (Supplementary Figs. 1d,e and 2).

Finally, to quantify across-day decoder convergence rates for individual features (Fig. 1f), we first extracted the coefficients for each feature independently (128 per feature), and then we computed on the change in decoder angle during the first half of ltCLDA (days 0–14). We computed a linear fit to the decoder angle during this period and used the slope of this fit as a measure of convergence rate. We also contrasted convergence trajectories pairwise between features or between daily and ltCLDA initialization experiments using two-sample KS tests of significance.

Kalman gain convergence

Equivalent analysis to analyze changes in decoder weights were also performed for the corresponding Kalman gains. Here, the third and fourth rows of the Kalman gain can be viewed as representations of internal dynamics, which has the role in estimating cursor x and y velocities similar to the C matrix but now additionally weighted by the error covariance matrix and the error in the linear relationship between neural features and cursor velocities. Similarly to the decoder convergence method described above, we calculated the corresponding angles for all features across days and months (Supplementary Figs. 2b and 4b, respectively).

Estimating the R 2 for imagined cursor movements

For a single session (Fig. 1g; dashed line), we used 80% of the data to build a multivariate regression model to predict Vx and Vy separate. R2 values represent the match between the predicted and the actual values for the left-out data. This was repeated five times per session. For the multiple-session curve (solid line), we added sessions as indicated in the y axis. The leave-out validation was randomly interspersed in the respective combined datasets.

Tuning model analysis

To assess the plasticity and stability of individual neural feature representations on a given recording channel, we first sought to characterize each neural representation with a cosine-tuning model38. For a given day, we gathered all trials during fixed control blocks and separated trials based on the direction of the instructed target. To focus on neural activity that best reflected the user’s intentions, we only used features collected during the first 2 s of each trial15. Next, we fit the cosine-tuning model:

where f is the vector of neural features, α is the vector of target directions and the remaining variables (Bi) are the fit parameters. We then focused our analysis on PD = tan−1(B2/B3) (the PD of that feature on that day) and the goodness of fit of the cosine-tuning curve (R2).

To measure the stability of the neural representations during learning, we used the circular s.d. of PD across days. To assess whether the decoder weights were linked to tuning stability, we used two approaches. First, we measured the Pearson correlation between the stability of the PD during the final 5 d of the dataset with the magnitude of the decoder weights (M) on the final day (Fig. 3d). Second, we computed the Pearson correlation coefficient between the goodness of fit of the tuning curve (R2) and the decoder weights (M) and tracked this correlation across days. The result of this computation was used to generate Fig. 3e,f.

Mask experiments

Binary masks were constructed independently for each of the seven neural features. First, we extracted the x- and y-velocity decoder weights \(C_{V_x}\) and \(C_{V_y}\)) for a given feature. Then, we computed the magnitude (M) of the weights for each channel:

Next, we computed the 25th, 50th and 75th percentiles of the distribution of M. We ran the ‘top 75% mask’ experiment by masking (removing) the channels (and corresponding elements of the Kalman filter) that had weight magnitudes below the 25th percentile. To visualize the γ2 masks (Fig. 2f), channels below the threshold were set to 0 (white), and channels above the threshold were set to 1 (black). For all other features, these binary masks are shown in Supplementary Fig. 7.

Neural population representation analysis

For a given day, we gathered all trials during fixed control blocks. Instead of separating trials based on target direction, we separated individual time steps based on the participant’s intended cursor velocity (see “Cursor-control algorithm–Parameter adaptation”). We then discretized intended cursor velocities into 45° bins based on the angle of the intended cursor velocity vector.

Day-to-day correlation analysis

We measured the day-to-day similarity of the neural features. First, we computed the average feature on every channel for each of the binned intended cursor directions. From the 7 neural features and 8 binned intended cursor directions, 15 128-channel vectors were derived—1 vector for each of the 15 experimental days.

We then split these vectors into two groups based on the magnitude of the Kalman C matrix weights on the final day (M). This method is similar to the method used to construct binary masks; from the original 128-channel vectors, we constructed two 32-channel vectors–one with channels corresponding to the bottom 25% of weights and the other with channels corresponding to the top 25% of weights. We next computed the pairwise correlation of these vectors across days (Supplementary Fig. 11). To assess average changes in neural representation, we used the mean of the pairwise correlation matrices across neural features and across binned cursor directions.

Click algorithm

A binary click decoder was trained to allow the participant to consciously select targets on-screen. During BCI control, at each time step, we classified neural features into two states: ‘clicking’ or ‘not clicking’. If the participant was deemed to be clicking and the cursor was on a target, we registered the click and selected that target. If the participant was not clicking, then we resumed velocity Kalman-filter cursor control. To classify clicking from cursor control, we trained a linear support vector machine (SVM) with a L2 loss function57. The linear SVM finds the optimal hyperplane in 768 dimensions (corresponding to all the ECoG power features) to separate the two states. Since classification depends on only a few support vectors or data points, it works very well in high-dimensional spaces, as is the case for this particular ECoG dataset. The inner product of the SVM hyperplane with the vector of ECoG features at any time step produces a scalar representation of the neural features (that is, a distance) from a decision boundary; distances below the decision boundary were determined to be clicking, while distances above the boundary were determined to be not clicking. During online testing, we set the decision boundary between −0.6 and −0.5.

Parameter fitting

The decoder was trained as the participant controlled the cursor in the point-and-click task environment (typically, a target was arranged in a 3 × 3 grid of square targets; Fig. 5a). Whenever the participant’s BCI-controlled cursor was within the cued target, the cursor’s position was frozen for 1.5 s, and the participant was instructed to imagine a distinct action (clicking a mouse as confirming OK) for ‘click’. After data acquisition, the SVM classifier was trained to differentiate ECoG features during time periods when the participant was imagining clicking versus time periods of active BCI cursor control. As there were many more time periods of cursor control versus clicking, the training datasets were balanced wherein an equal number of time periods from both states were used to train the SVM. This procedure was repeated 20 times, each time training random cursor-control time periods versus clicking periods. The average SVM hyperplane was used for online decoding. Once the click decoder was initialized, it was subsequently retrained by appending online clicking and not-clicking data collected from online testing. The slack parameter in the linear SVM cost function that allows for a tolerance in misclassifying the two states was identified offline using tenfold cross validation.

Stacking of clickers

Once the initial click action (A) was stable across days, we trained the participant to perform another clicking action distinct from the first click action (B; Fig. 5e). Specifically, we asked to the participant to imagine ‘kicking a ball’ and built the hyperplane for this decoder by training it against both cursor control and the initial click action.

Plug-and-play experiments

To test PnP control and measure decoder stability across days and months, we ran a series of tests in which we systematically adapted and tested fixed decoders (Fig. 4 and Supplementary Fig. 1f). Testing consisted of at least 24 fixed trials per session.

Computational model of BCI learning with ltCLDA

To investigate the effect of ltCLDA on the participant’s ability to control the BCI, we developed a computational model of the interplay between the participant learning to control the BCI and CLDA based on previously published work58. The overall framework of the model is detailed in Fig. 1h and further details are available in the Supplementary Note.

Controlling for overt movements

We did not observe any overt movement strategy to control the cursor. To verify that BCI control in this study was not driven by decoded activity from overt movements, we recorded two videos of the participant while he was performing the cursor-control task: one during CLDA control and the other during fixed control. First, we synchronized these videos with the cursor kinematics. To detect movement, we computed the difference in pixels frame by frame. We then computed the Pearson correlation between this motion time series and the cursor kinematics. We found no significant correlation between the two.

Reporting Summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

The data that support the findings of this study are available on reasonable request from the corresponding author. The data are not publicly available because they contain information that might compromise the privacy of the research participant.

Code availability

The custom MATLAB code used for analyses is available upon request from the corresponding author.

References

Schwartz, A. B. Cortical neural prosthetics. Annu. Rev. Neurosci. 27, 487–507 (2004).

Taylor, D. M., Tillery, S. I. & Schwartz, A. B. Direct cortical control of 3D neuroprosthetic devices. Science 296, 1829–1832 (2002).

Gilja, V. et al. A high-performance neural prosthesis enabled by control algorithm design. Nat. Neurosci. 15, 1752–1757 (2012).

Hochberg, L. R. et al. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature 442, 164–171 (2006).

Carmena, J. M. et al. Learning to control a brain–machine interface for reaching and grasping by primates. PLoS Biol. 1, E42 (2003).

Degenhart, A. D. et al. Remapping cortical modulation for electrocorticographic brain–computer interfaces: a somatotopy-based approach in participants with upper-limb paralysis. J. Neural Eng. 15, 026021 (2018).

Pandarinath, C. et al. High performance communication by people with paralysis using an intracortical brain–computer interface. eLife 6, e18554 https://doi.org/10.7554/eLife.18554 (2017).

Wang, W. et al. An electrocorticographic brain interface in an individual with tetraplegia. PLoS ONE 8, e55344 (2013).

Aflalo, T. et al. Neurophysiology. Decoding motor imagery from the posterior parietal cortex of a tetraplegic human. Science 348, 906–910 (2015).

Wolpaw, J. R. & McFarland, D. J. Control of a two-dimensional movement signal by a noninvasive brain–computer interface in humans. Proc. Natl Acad. Sci. USA 101, 17849–17854 (2004).

Sellers, E. W., Vaughan, T. M. & Wolpaw, J. R. A brain–computer interface for long-term independent home use. Amyotroph. Lateral Scler. 11, 449–455 (2010).

Ajiboye, A. B. et al. Restoration of reaching and grasping movements through brain-controlled muscle stimulation in a person with tetraplegia: a proof-of-concept demonstration. Lancet 389, 1821–1830 (2017).

Benabid, A. L. et al. An exoskeleton controlled by an epidural wireless brain–machine interface in a tetraplegic patient: a proof-of-concept demonstration. Lancet Neurol. 18, https://doi.org/10.1016/S1474-4422(19)30321-7 (2019).

Collinger, J. L. et al. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet 381, 557–564 (2013).

Ganguly, K. & Carmena, J. M. Emergence of a stable cortical map for neuroprosthetic control. PLoS Biol. 7, e1000153 (2009).

Gulati, T., Ramanathan, D. S., Wong, C. C. & Ganguly, K. Reactivation of emergent task-related ensembles during slow-wave sleep after neuroprosthetic learning. Nat Neurosci. 17, 1107–1113 https://doi.org/10.1038/nn.3759 (2014).

Flint, R. D., Scheid, M. R., Wright, Z. A., Solla, S. A. & Slutzky, M. W. Long-term stability of motor cortical activity: implications for brain–machine interfaces and optimal feedback control. J. Neurosci. 36, 3623–3632 (2016).

Dayan, E. & Cohen, L. G. Neuroplasticity subserving motor skill learning. Neuron 72, 443–454 (2011).

Wodlinger, B. et al. Ten-dimensional anthropomorphic arm control in a human brain–machine interface: difficulties, solutions and limitations. J. Neural Eng. 12, 016011 (2015).

Wolpaw, J. R. et al. Independent home use of a brain–computer interface by people with amyotrophic lateral sclerosis. Neurology 91, e258–e267 (2018).

Ganguly, K., Dimitrov, D. F., Wallis, J. D. & Carmena, J. M. Reversible large-scale modification of cortical networks during neuroprosthetic control. Nat. Neurosci. 14, 662–667 (2011).

Farina, D. et al. The extraction of neural information from the surface EMG for the control of upper-limb prostheses: emerging avenues and challenges. IEEE Trans. Neural Syst. Rehabil. Eng. 22, 797–809 (2014).

Liu, J., Sheng, X., Zhang, D., He, J. & Zhu, X. Reduced daily recalibration of myoelectric prosthesis classifiers based on domain adaptation. IEEE J. Biomed. Health Inform. 20, 166–176 (2016).

Cordella, F. et al. Literature review on needs of upper limb prosthesis users. Front Neurosci. 10, 209 (2016).

Phillips, B. & Zhao, H. Predictors of assistive technology abandonment. Assist. Technol. 5, 36–45 (1993).

Green, A. M. & Kalaska, J. F. Learning to move machines with the mind. Trends Neurosci. 34, 61–75 (2011).

Chao, Z. C., Nagasaka, Y. & Fujii, N. Long-term asynchronous decoding of arm motion using electrocorticographic signals in monkeys. Front Neuroeng. 3, 3 (2010).

Degenhart, A. D. et al. Histological evaluation of a chronically-implanted electrocorticographic electrode grid in a non-human primate. J. Neural Eng. 13, 046019 (2016).

Leuthardt, E. C., Schalk, G., Wolpaw, J. R., Ojemann, J. G. & Moran, D. W. A brain–computer interface using electrocorticographic signals in humans. J. Neural Eng. 1, 63–71 (2004).

Rouse, A. G., Williams, J. J., Wheeler, J. J. & Moran, D. W. Spatial coadaptation of cortical control columns in a micro-ECoG brain–computer interface. J. Neural Eng. 13, 056018 (2016).

Chang, E. F. Towards large-scale, human-based, mesoscopic neurotechnologies. Neuron 86, 68–78 (2015).

Vansteensel, M. J. et al. Fully implanted brain–computer interface in a locked-in patient with ALS. N. Engl. J. Med. 375, 2060–2066 (2016).

Yanagisawa, T. et al. Electrocorticographic control of a prosthetic arm in paralyzed patients. Ann. Neurol. 71, 353–361 (2012).

Orsborn, A. L. et al. Closed-loop decoder adaptation shapes neural plasticity for skillful neuroprosthetic control. Neuron 82, 1380–1393 (2014).

Dangi, S., Orsborn, A. L., Moorman, H. G. & Carmena, J. M. Design and analysis of closed-loop decoder adaptation algorithms for brain–machine interfaces. Neural Comput. 25, 1693–1731 (2013).

Shenoy, K. V. & Carmena, J. M. Combining decoder design and neural adaptation in brain–machine interfaces. Neuron 84, 665–680 (2014).

Gulati, T., Guo, L., Ramanathan, D. S., Bodepudi, A. & Ganguly, K. Neural reactivations during sleep determine network credit assignment. Nat. Neurosci. 20, 1277–1284 (2017).

Georgopoulos, A. P., Schwartz, A. B. & Kettner, R. E. Neuronal population coding of movement direction. Science 233, 1416–1419 (1986).

Ajemian, R., D’Ausilio, A., Moorman, H. & Bizzi, E. Why professional athletes need a prolonged period of warm-up and other peculiarities of human motor learning. J. Mot. Behav. 42, 381–388 (2010).

Ajemian, R., D’Ausilio, A., Moorman, H. & Bizzi, E. A theory for how sensorimotor skills are learned and retained in noisy and nonstationary neural circuits. Proc. Natl Acad. Sci. USA 110, E5078–E5087 (2013).

Chestek, C. A. et al. Single-neuron stability during repeated reaching in macaque premotor cortex. J. Neurosci. 27, 10742–10750 (2007).

Stevenson, I. H. et al. Statistical assessment of the stability of neural movement representations. J. Neurophysiol. 106, 764–774 (2011).

Gallego, J. A., Perich, M. G., Chowdhury, R. H., Solla, S. A. & Miller, L. E. Long-term stability of cortical population dynamics underlying consistent behavior. Nat. Neurosci. 23, 260–270 (2020).

Murthy, V. N. & Fetz, E. E. Coherent 25- to 35-Hz oscillations in the sensorimotor cortex of awake behaving monkeys. Proc. Natl Acad. Sci. USA 89, 5670–5674 (1992).

Crone, N. E., Miglioretti, D. L., Gordon, B. & Lesser, R. P. Functional mapping of human sensorimotor cortex with electrocorticographic spectral analysis. II. Event-related synchronization in the gamma band. Brain 121(Pt 12), 2301–2315 (1998).

Hall, T. M., de Carvalho, F. & Jackson, A. A common structure underlies low-frequency cortical dynamics in movement, sleep and sedation. Neuron 83, 1185–1199 (2014).

Ramanathan, D. S. et al. Low-frequency cortical activity is a neuromodulatory target that tracks recovery after stroke. Nat. Med. 24, 1257–1267 (2018).

Buzsaki, G., Anastassiou, C. A. & Koch, C. The origin of extracellular fields and currents—EEG, ECoG, LFP and spikes. Nat. Rev. Neurosci. 13, 407–420 (2012).

Lemke, S. M., Ramanathan, D. S., Guo, L., Won, S. J. & Ganguly, K. Emergent modular neural control drives coordinated motor actions. Nat. Neurosci. 22, 1122–1131 (2019).

Downey, J. E. et al. Blending of brain–machine interface and vision-guided autonomous robotics improves neuroprosthetic arm performance during grasping. J. Neuroeng. Rehabil. 13, 28–28 (2016).

Chestek, C. A. et al. Long-term stability of neural prosthetic control signals from silicon cortical arrays in rhesus macaque motor cortex. J. Neural Eng. 8, 045005 (2011).

Jarosiewicz, B. et al. Virtual typing by people with tetraplegia using a self-calibrating intracortical brain–computer interface. Sci. Transl. Med. 7, 313ra179 (2015).

Hochberg, L. R. et al. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature 485, 372–375 (2012).

Moses, D. A., Leonard, M. K., Makin, J. G. & Chang, E. F. Real-time decoding of question-and-answer speech dialogue using human cortical activity. Nat. Commun. 10, 3096 (2019).

Brainard, D. H. The psychophysics toolbox. Spat. Vis. 10, 433–436 (1997).

Kao, J. C., Nuyujukian, P., Ryu, S. I. & Shenoy, K. V. A high-performance neural prosthesis incorporating discrete state selection with hidden Markov models. IEEE Trans. Biomed. Eng. 64, 935–945 (2017).

Fan, R., Chang, K., Hsieh, C., Wang, X. & Lin, C. Liblinear: a library for large linear classification. J. Mach. Learn. Res. 9, 1871–1875 (2008).

Heliot, R., Ganguly, K., Jimenez, J. & Carmena, J. M. Learning in closed-loop brain–machine interfaces: modeling and experimental validation. IEEE Trans. Syst. Man Cybern. B Cybern. 40, 1387–1397 (2010).

Acknowledgements

This work was funded by the National Institutes of Health (NIH) through the NIH Director’s New Innovator Award Program (grant no. 1 DP2 HD087955]. The development of the signal processing and decoding approach used in this study was supported by the Doris Duke Charitable Foundation (grant no. 2013101). The authors especially thank our participant ‘Bravo-1’ for his unwavering commitment to this project. We also thank C.C. Rodriguez for assisting with data collection and D.A. Moses, J.R. Liu and M. Dougherty for assistance with the clinical trial.

Author information

Authors and Affiliations

Contributions

D.S. established the decoder and real-time setup for cursor control. D.S., R.A., N.F.H. and N.N. collected data. R.A. and D.S. performed data analysis. N.F.H. specifically conducted the analysis of ECoG power. N.N. established the decoder framework for clickers, designed and implemented the ltCLDA-BCI learning simulation and wrote supplementary note. A.T.C. conducted patient recruitment and patient care. E.F.C. performed the surgical implantation and participated in patient care. K.G. supervised all aspects of this study. D.S., R.A. and K.G. wrote the manuscript. All authors read and revised the manuscript.

Corresponding author

Ethics declarations

Competing interests

E.F.C., K.G., N.N. and A.T.C. receive some salary support from Facebook Reality Labs for a separate project on speech decoding. The funders had no role in study design, data collection, data analysis and the contents of this paper.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary Information

Supplementary Figs. 1–12, Supplementary Table 1, Supplementary Video Legends 1 and 2 and Supplementary Note

Supplementary Video 1

Example of BCI cursor control during center-out task. This video shows performance during a fixed block after ltCLDA. The 8 targets are shown.

Supplementary Video 2

Example of point-and-click task using ECoG-based BCI. Neural control of the cursor during reach to an instructed target in a 4x4 grid, clicking on that target was a correct selection. The instructed target was illuminated green to indicate that it was the instructed target. After each trial, the next instructed target was randomly selected from the grid and immediately illuminated. Overall, for this example of block, the bitrate was calculated as 0.92.

Rights and permissions

About this article

Cite this article

Silversmith, D.B., Abiri, R., Hardy, N.F. et al. Plug-and-play control of a brain–computer interface through neural map stabilization. Nat Biotechnol 39, 326–335 (2021). https://doi.org/10.1038/s41587-020-0662-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41587-020-0662-5

This article is cited by

-

Towards unlocking motor control in spinal cord injured by applying an online EEG-based framework to decode motor intention, trajectory and error processing

Scientific Reports (2024)

-

Decoding motor plans using a closed-loop ultrasonic brain–machine interface

Nature Neuroscience (2024)

-

Online speech synthesis using a chronically implanted brain–computer interface in an individual with ALS

Scientific Reports (2024)

-

Brain–computer interface: trend, challenges, and threats

Brain Informatics (2023)

-

Brainmask: an ultrasoft and moist micro-electrocorticography electrode for accurate positioning and long-lasting recordings

Microsystems & Nanoengineering (2023)