Abstract

In ophthalmologic practice, retinal images are routinely obtained to diagnose and monitor primary eye diseases and systemic conditions affecting the eye, such as diabetic retinopathy. Recent studies have shown that biomarkers on retinal images, for example, retinal blood vessel density or tortuosity, are associated with cardiac function and may identify patients at risk of coronary artery disease. In this work we investigate the use of retinal images, alongside relevant patient metadata, to estimate left ventricular mass and left ventricular end-diastolic volume, and subsequently, predict incident myocardial infarction. We trained a multichannel variational autoencoder and a deep regressor model to estimate left ventricular mass (4.4 (–32.30, 41.1) g) and left ventricular end-diastolic volume (3.02 (–53.45, 59.49) ml) and predict risk of myocardial infarction (AUC = 0.80 ± 0.02, sensitivity = 0.74 ± 0.02, specificity = 0.71 ± 0.03) using just the retinal images and demographic data. Our results indicate that one could identify patients at high risk of future myocardial infarction from retinal imaging available in every optician and eye clinic.

Similar content being viewed by others

Main

Cardiovascular diseases (CVDs) represent a major cause of death and socio-economic burden globally. In 2015 alone, there were ~18 million CVD-related deaths worldwide1. Identification and timely treatment of CVD risk factors is a key strategy for reducing CVD prevalence in populations and for risk modulation in individuals. Conventionally, CVD risk is estimated using demographic/clinical parameters such as age, sex, ethnicity, smoking status, family history, history of hyperlipidaemia, diabetes mellitus or hypertension2. Imaging tests such as coronary computed tomography, echocardiography and cardiovascular magnetic resonance (CMR) help further stratify patient risk by assessing coronary calcium burden, myocardial scar burden, ischaemia, cardiac chamber size and function.

Cardiovascular imaging is usually performed in secondary care and is relatively expensive, limiting its availability in underdeveloped and developing countries. An alternative approach to risk stratification is to use the information available from non-cardiac investigations. Retinal microvascular abnormalities such as generalized arteriolar narrowing, focal arteriolar narrowing and arteriovenous nicking have shown strong associations with systemic and cardiovascular diseases such as diabetes mellitus, hypertension and coronary artery disease3,4. Retinal images (including details of principal blood vessels) are now routinely acquired in optometric and ophthalmologic practice and are relatively inexpensive. Retinal images could, therefore, be a potential cost-effective screening tool for cardiovascular disease. Beyond risk prediction, retinal images have also been associated with cardiovascular phenotypes such as left ventricular dimensions and mass4. Poplin et al. showed for the first time that retinal images allowed prediction of cardiovascular risk factors such as age, gender, smoking status, systolic blood pressure and major adverse cardiac events5, driven by anatomical features such as the optic disc or retinal blood vessels. This highlighted the potential for using retinal images to assess risk of cardiovascular diseases.

We explore new ways to extend this line of research, by learning a combined representation of retinal images and CMR images, to assess cardiac function and predict myocardial infarction (MI) events. This is supported by the work of Cheung et al.6, who highlighted the adverse effects of blood pressure and cardiac dysfunction on retinal microvasculature. Similarly, Tapp et al.7 established associations between retinal vessel morphology and cardiovascular disease risk factors and/or CVD outcomes using a multilevel linear regressor. This study assessed the relationships between retinal vessel morphometry, blood pressure, and arterial stiffness index, furthering our understanding of preclinical disease processes and the interplay between microvascular and macrovascular diseases. Using retinal fundus images, Gargeya et al.8 and Qummar et al.9 used deep learning to detect diabetes, and classify different grades of diabetic retinopathy, respectively. These studies demonstrate the efficacy of deep learning techniques to quantify and stratify cardiovascular disease risk factors, given retinal images. Other studies such as Pickhardt et al.10 utilised whole-body CT scans and deep learning to predict future adverse cardiovascular events, further supporting the hypothesis that alternate image modalities, covering multiple organs, help assess cardiovascular health and predict CVD risk.

As markers of cardiovascular diseases are often manifested in the retina, images of this organ could identify future cardiovascular events such as left ventricular hypertrophy or MI. This work proposes a novel method that estimates cardiac indices and predicts incident MI based on retinal images and demographic data from the UK biobank (UKB). For MI, we only considered incidents that occurred after the retinal image was taken. Our approach uses a multichannel variational autoencoder trained on two channels of information: retinal and CMR images from the same subject. This method combines features extracted from both imaging modalities in a common latent space, allowing us to use that latent space to subsequently estimate relevant quantities from just one channel of information (that is retinal images) and demographic data. Applied to clinical practice, estimation of cardiac indices from retinal images could guide patients at risk of CVDs to cardiologists following a routine ophthalmic check or directly predicting MI based on the retinal and minimal demographic data.

Patient datasets and demographic data

This study used CMR images (end-diastolic short-axis view), retinal images and demographic data from the UKB cohort (under access application no. 11350) to train and validate the proposed method. When this method was developed, 39,705 participants underwent CMR imaging using a clinical wide bore 1.5 T MRI system (MAGNETOM Aera, Syngo Platform VD13A, Siemens Healthcare)11, and 84,760 participants underwent retinal imaging using a Topcon 3D OCT 1000 Mark 2 (45° field-of-view, centred to include both optic disc and macula)12. Only those participants with CMR, retinal images and demographic data were selected to train our proposed method, totalling 11,383 participants.

From 11,383 participants, 676 participants were excluded due to a history of conditions known to affect left ventricular mass; for example, diabetes (336 subjects), past MI (293 subjects), cardiomyopathy (14 subjects) or frequent strenuous exercise routines (33 subjects).

After excluding participants with the conditions above, a deep learning method for quality assessment13 was used to obtain the retinal images of sufficient quality, as per certain pre-specified criteria. This quality assessment method utilises the public dataset called EyePACS14, a well-known dataset presented in the Kaggle platform for automatic diabetic retinopathy detection, to train and validate its performance. Following quality assessment, 5,663 participants were identified to have good quality retinal images. We followed the RECORD statement for reporting observational data, and a STROBE flow diagram showing the exclusion criteria is presented in Fig. 1. Subsequent preprocessing steps for retinal and CMR images (that is, ROI detection15) are presented in Supplementary Section 1.

Regarding the demographic data, a combination of variables derived from the patient’s history and blood samples—such as sex, age, gender, HbA1c, systolic and diastolic blood pressure, smoking habit, alcohol consumption, glucose and body mass index—were also used as input to train and test the proposed method. Although we excluded participants with diabetes, we retain HbA1c as multiple studies have shown positive correlation between HbA1c and cardiovascular mortality even in subjects without a history of diabetes16,17,18. Furthermore, in ref. 19 the authors showed a strong association between HbA1c and left ventricular mass. They found that a 1% rise in HbA1c level was associated with a 3.0 g increase in left ventricular mass in elderly subjects. All of these variables are summarized in Supplementary Table 2.

Aside from demographic data, we also used the left ventricular end-diastolic volume (LVEDV) and left ventricular mass (LVM) extracted directly from the CMR images. These cardiac indices were computed from the manual delineations20 generated using the commercially available cvi42 post-processing software, and segmentations generated automatically using the method proposed by Attar and colleagues21. More details about how these values were used are outlined in the ‘Experiments and results’ section.

Age-Related Eye Disease Study database

The Age-Related Eye Disease Study (AREDS) was a multicenter prospective study of the clinical course of age-related macular degeneration (AMD) and age-related cataract, as well as a phase-III randomized controlled trial designed to assess the effects of nutritional supplements on AMD and cataract progression22,23. Institutional review board approval was obtained at each clinical site and written informed consent for the research was obtained from all study participants. The research was conducted under the Declaration of Helsinki. Further information on AREDS and associated demographic data is included in Supplementary Section 2.

Deep learning approach

Our method is based on the multichannel variational autoencoder (mcVAE)24 and a deep regression network (https://doi.org/10.5281/zenodo.5716142) (ResNet50). For the mcVAE, we designed two pairs of encoder/decoders to train the network, in which each pair is trained on one of the two data channels (retinal and CMR images) with a shared latent space. The full diagram of the proposed method is presented in Fig. 2. The encoders and the decoders are further described in Supplementary Table 3.

This system comprises two main components: a mcVAE and a deep regressor network. During Stage I, a joint latent space is created with two channels: retinal and cardiac magnetic resonance. Then, during Stage II a deep regressor is trained on the reconstructed CMR plus demographic data to estimate LVM and LVEDV. Figure rreproduced with permission from UK Biobank.

Antelmi et al.24 highlighted that using a sparse version of the mcVAE ensures the evidence lower bound generally reaches the maximum value at convergence when the number of latent dimensions coincides with the true one used to generate the data. Consequently, we used the sparse version of the mcVAE. We trained a sparse latent space z for both channels of information. A detailed explanation on how mcVAE works, and the difference between mcVAE and a vanilla VAE25,26,27,28, is provided in Supplementary Section 3.

Once the mcVAE was trained, we used the learned latent space to train the deep regressor (ResNet50). To do that, we used CMR images reconstructed from the retinal images plus the demographic data (Stage II in Fig. 2).

Prediction of incident MI

We evaluate the ability of the proposed approach to estimate LVM and LVEDV from the retinal images and demographic data. As an additional experiment, we predict MI using logistic regression in two settings: (1) using the demographic data alone; and (2) using LVM/LVEDV estimated from the retinal images and the demographic data, and subsequently, combined with the latter for predicting MI. Logistic regression eased interpretability, allowing us to compare the weights/coefficients of the variables towards the final prediction (see Extended Data Fig. 3). We extract the cases with MI events from the participants not used to train the system to make this comparison; that is, 73,477 participants out of a total 84,760 participants with retinal images. Of the 73,477, 2,954 subjects have previous MI; however, we only consider the cases where MI occurred after the retinal images were taken, which results in 992 MI cases and 70,523 no-MI cases.

We are dealing with imbalanced data and thus we randomly resampled the normal cases to the same number of MI cases (992). Past studies29 have highlighted that resampling the majority class is a robust solution when having hundreds of cases in the minority class. Once the majority class was resampled, we performed tenfold cross-validation using logistic regression to predict MI in the scenarios described previously (that is, using only demographic and using demographic plus LVM/LVEDV).

Experiments and results

In this study we jointly trained a mcVAE and deep regressor network on CMR, retinal images and demographic data from participants in the UKB cohort. As the first experiment, we used manual and automatic delineations of the CMR images as ground truth to estimate LVM and LVEDV from retinal images. These manually delineated images were analysed by a team of eight experts using cvi4230. On the other hand, the automatic delineations were obtained from the method proposed by Attar and colleagues31. The main motivation for this set of experiments is to perform a fair comparison between our system and the state-of-the-art methods. All methods published in the literature that used the UKB cohort are trained using the aforementioned manual delineations. Results of this experiment are presented in Bland–Altman and Pearson’s correlation plots (see Fig. 3a).

a, Bland–Altman and correlation plots for estimated LVM and LVEDV using manual annotations on CMR images. b, Bland–Altman and correlation plots for estimated LVM and LVEDV using automatic annotations computed from Attar and colleagues31 method. GT stands for ground truth or expert manual measurements. In Case A, we used all the available subjects to train and test our method. The solid line represents the logistic regression, and the dotted line represents the line of identity.

Figure 3a denotes the correlation between the LVM (r = 0.65) and LVEDV (r = 0.45) values estimated using our approach, and the ones manually computed from the CMR images using cvi42. The results obtained from this experiment support the clinical findings published years ago by clinical researchers in refs. 3,4,32. They found that retinal images could be potentially used to quantify parameters in the heart.

Aside from the Bland–Altman and correlation plots, we also compared our proposed method with state-of-the-art cardiac quantification methods using CMR images (Bai and colleagues33), including the Siemens Inline VF system (see Supplementary Table 4). The Siemens Inline VF system was the first fully automatic left ventricular analysis tools commercially available34; D13 and E11C versions are currently used as a baseline for comparison against manual delineation30.

Bland–Altman plots and Pearson’s correlation were computed for the participants with automatic annotations for LVM and LVEDV (see Fig. 3b).

Figure 3b shows a considerable correlation between the LVM and LVEDV estimated by the proposed method and parameters computed from Attar’s algorithm. Using more images to train our method positively impacts the obtained error (See Supplementary Table 4, experiment Exp 2B).

Our approach can estimate LVM and LVEDV from the retinal images and demographic data and can improve the prediction of future MI events. To demonstrate this, we compare MI prediction in two settings: (1) using only demographic data and (2) using LVM/LVEDV (predicted using our approach) plus demographic data. To do that, we performed tenfold cross-validation on subjects not previously used for training and a logistic regression model (See Fig. 4).

ROC curves obtained for MI prediction using only demographic data. Accuracy: 0.66 ± 0.03, sensitivity: 0.7 ± 0.04, specificity: 0.64 ± 0.03, precision: 0.64 ± 0.03, and F1 Score: 0.66 ± 0.03 (left). ROC curves obtained for MI prediction using LVM, LVEDV (derived from the proposed pipeline) and demographic data. Accuracy, 0.74 ± 0.03; sensitivity, 0.74 ± 0.02; specificity, 0.71 ± 0.03; precision, 0.73 ± 0.05; F1 Score, 0.74 ± 0.03 (right).

Figure 4 (right) shows a notable increase in the area under the ROC curve when using LVM/LVEDV plus demographics to predict MI.

Aside from predicting myocardial infarction, we also compared the estimated LVM/LVEDV values between the MI cases and no-MI cases using a t-test. Here the null hypothesis is that the LVM/LVEDV values come from the same distribution, whereas the alternative hypothesis is that these values come from different distributions. We consider that the obtained results are different if the P-value is less than 0.05. According to experimental results, P-values of 1.43 × 10−57 and 2.32 × 10−52 were obtained for LVM and LVEDV correspondingly, meaning we rejected the null hypothesis and LVM/LVEDV values for MI no-MI cases come from different distributions.

Additional experiments evaluating the Frechet inception distance35 score for reconstructed CMR images and the impact of the training set size, retinal image size, and different demographic variables to the proposed algorithm are presented in the Supplementary Section 5.

External validation

Finally, external validation using the optimal model identified from the preceding experiments was carried out. This validation was conducted on the AREDS dataset using retinal images and the demographic data presented in Supplementary Table 1. As previously mentioned, this dataset is composed of 3,010 participants in total. From these participants, there are 180 participants with MI events and 2,830 with no-MI events.

We used the mcVAE trained on all the 5,663 retinal images available of size 128 × 128px. In the AREDS dataset, the demographic data available differed from that in the UKB. We trained our method for the available metadata in the AREDS. This means variables such as systolic blood pressure, diastolic blood pressure, smoking status, alcohol consumption status, body mass index, age, and gender were used for the external validation. The demographic variable ‘alcohol consumption’ was converted to a continuous variable—in terms of gm/day consumed. The remaining variables are consistent between datasets in the way they are coded.

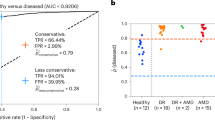

As the AREDS dataset was initially used because of the detailed information of AMD, we performed three analyses discarding different levels of AMD to show the impact AMD has on MI prediction. The obtained results can be seen in Fig. 5 and Table 1.

Discussion

This study demonstrates that retinal images and demographic data could be of great value to estimate cardiac indices such as the LVM and LVEDV by jointly learning a latent space retinal and CMR images. To the best of our knowledge, no previous works use a multimodal approach with retinal and CMR to learn a joint latent space and subsequently estimate cardiac indices using only retinal and demographic data. Our results follow past research demonstrating strong associations between biomarkers in the retina and the heart3,4,32, similar to what has been shown in a recent study in which cardiovascular risk factors such as age, gender, blood pressure were quantified using only retinal images5.

Using the proposed method to estimate LVM and LVEDV, we can assess patients at risk of future MI or similar adverse cardiovascular events at routine ophthalmic visits. This would enable patient referral for further examination. Estimated LVM/LVEDV could also be used to provide insights into pathological cardiac remodelling or hypertension at no extra cost. This means that, if an ophthalmologist keeps a record of those indices for their patient over time, they can refer patients for further assessment to cardiologists, if a remarkable increase in the LVM or LVEDV is detected. The ophthalmologist could be bypassed with automated risk detection if patients consented to share their data on the cloud.

Figure 3 shows that our trained model is less powerful at estimating higher LVM and LVEDV. Two main factors are involved here: (1) the proportion of subjects with elevated LVM/LVEDV available for training (with retinal images) is limited, and (2) retinal images do not contain all of the information to assess cardiac function.

We chose to predict LVM and LVEDV as an intermediate step rather than directly predicting future MI events because: (1) this ensures that the developed approach is flexible in its clinical application, as it could be used not just to predict MI, but to assess left ventricular function in general; (2) using LVM and LVEDV enhances the explainability of predictions, as evidenced by the analysis of the logistic regression coefficients presented in the Supplementary Information.

In the external validation analyses, we presented detailed data on the relative performance of the algorithm to predict incident MI, according to the presence and severity of AMD in the retinal images. The performance was highest in the absence of AMD and seemed decrease with the inclusion of individuals with AMD of gradually increasing severity of it. In its most severe form (that is, neovascular AMD) it can cause extensive fibrosis, haemorrhage and exudation across much of the macula; this is likely to obliterate the relevant signals employed by the algorithm for predicting incident MI. Even in less severe forms, such as early and intermediate AMD, substantial alterations to macular anatomy are observed, including drusen and pigmentary abnormalities36, which may partially degrade or interfere with the relevant signals. We might assume that the most important signals from the retinal images, for MI prediction, are encoded in the retinal vessels5. In this case, even early and intermediate AMD are accompanied by substantial changes in the retinal vasculature’s quantitative and morphological features37. Overall, the presence of retinal disease such as AMD, particularly in its more severe forms, presumably interferes with the ability of the algorithm to infer characteristics of the systemic circulation from the retinal circulation.

The AUC scores obtained using our approach for UKB and AREDS populations has to be considered in the context of a second referral setting at an optician/eye clinic and not a primary cardiology clinic. The sensitivity, specificity and precision/positive predictive value (PPV) of our approach at predicting future MI events from retinal images in: (1) the UKB population were 0.74, 0.72 and 0.68, respectively, when just age and gender were considered as additional demographic variables (representative of the information available in an optician/eye clinic), as highlighted in Supplementary Fig. 7; and (2) the AREDS population—after excluding all AMD cases—were 0.70, 0.67 and 0.67 respectively. Established cardiovascular disease risk assessment models (for example, the Framingham Risk Score (FRS), Systemic Coronary Risk Evaluation (SCORE), Pooled Cohort Equation (PCE) and so on)38,39,40,41 used previously to screen populations for atherosclerotic cardiovascular disease are comparable to our approach in discriminatory capacity, while requiring several additional demographic variables and clinical measurements not readily available at an optician/eye clinic. For instance, in ref. 39 the authors compare FRS, PCE and SCORE in the multi-ethnic study of atherosclerosis, each achieving an AUC of 0.717, 0.737 and 0.721, respectively, and corresponding sensitivity and specificity ranges of 0.7–0.8 and 0.5–0.6, respectively. Similarly, in ref. 38, the sensitivity, specificity and PPV of multiple cardiovascular risk assessment models were compared on the Diabetes and Cardiovascular Risk Evaluation: Targets and Essential Data for Commitment of Treatment study. This study revealed that FRS and PCE’s sensitivity, specificity and PPV ranged from 0.56–0.78, 0.60–0.78 and 0.12–0.24, respectively, when considering a 10% risk threshold. Although the performance of our approach in this study cannot be directly compared to the risk assessment models evaluated in either of the studies above, they provide context to the results obtained on both UKB and AREDS populations, highlighting its potential for use as a second referral tool at an eye clinic/optician; however, it is important to note that this is a proof of concept study with limitations in study design (detailed in the Supplementary Information), predominantly the limited availability of the multimodal data required for such analyses.

Conclusion

This study presents a system that estimates cardiac indices such as LVM and LVEDV, and predicts future MI events using inexpensive and easy to obtain retinal photographs and demographic data. We used 5,663 subjects from the UKB imaging study—with end-diastolic cardiac magnetic resonance, retinal images and demographic data—to train and test our method. We used this system to predict MI in subjects that have retinal images and were not used during the training process. We found that using cardiac indices and demographic data together yields improvements in predicting MI events compared with using only demographic data. Finally, we performed an independent replication study of our method on the AREDS dataset. Although a drop in performance was observed, the discrimination capacity of our approach remained comparable to established CVD risk assessment models reported previously. This highlights the potential for our approach to be employed as a second referral tool in eye clinics/opticians, to identify patients at risk of future MI events. Future work will explore genetic data to improve the discriminatory capacity of the proposed approach and explainable artificial intelligence techniques to identify the dominant retinal phenotypes that help assess CVD risk. This will help facilitate fine-grained stratification of CVD risk in patients, which will be a crucial step towards delivering personalized medicine.

Data availability

All UKB images and demographic data are available, with restrictions, from UKB. Researchers who use the UKB dataset must first complete the UKB online access management system application form. More information on accessing the UKB dataset can be found in this link: https://www.ukbiobank.ac.uk/enable-your-research/apply-for-access. The AREDS dataset (NCT00000145) is available in the dbGAP repository: https://www.ncbi.nlm.nih.gov/projects/gap/cgi-bin/study.cgi?study_id=phs000001.v3.p1.

Code availability

All algorithms used in this study were developed using libraries and scripts in PyTorch. The source code is publicly available at https://doi.org/10.5281/zenodo.5716142.

References

Roth, G. A. et al. Global, regional, and national burden of cardiovascular diseases for 10 causes, 1990 to 2015. J. Am. College Cardiol. 70, 1–25 (2017).

D’Agostino, R. B. et al. General cardiovascular risk profile for use in primary care. Circulation 117, 743–753 (2008).

Wong, T. Y. et al. Retinal microvascular abnormalities and their relationship with hypertension, cardiovascular disease, and mortality. Survey Ophthalmol. 46 1, 59–80 (2001).

McClintic, B. R., McClintic, J. I., Bisognano, J. D. & Block, R. C. The relationship between retinal microvascular abnormalities and coronary heart disease: a review. Am. J. Med. 123, 374–e1 (2010).

Poplin, R. et al. Predicting cardiovascular risk factors from retinal fundus photographs using deep learning. Nat. Biomed. Eng. 2, 158–164 (2018).

Cheung, C. et al. Quantitative and qualitative retinal microvascular characteristics and blood pressure. J. Hypertens. 27, 1380–1391 (2011).

Tapp, R. J. et al. Associations of retinal microvascular diameters and tortuosity with blood pressure and arterial stiffness. Hypertension 74, 1383–1390 (2019).

Gargeya, R. & Leng, T. Automated identification of diabetic retinopathy using deep learning. Ophthalmology 124, 962–969 (2017).

Qummar, S. et al. A deep learning ensemble approach for diabetic retinopathy detection. IEEE Access 7, 150530–150539 (2019).

Pickhardt, P. J. et al. Automated CT biomarkers for opportunistic prediction of future cardiovascular events and mortality in an asymptomatic screening population: a retrospective cohort study. Lancet Digit. Health 2, e192–e200 (2020).

Petersen, S. E. et al. UK Biobank’s cardiovascular magnetic resonance protocol. J. Cardiovasc. Magn. Reson. 18, 1–7 (2015).

MacGillivray, T. J. et al. Suitability of UK Biobank retinal images for automatic analysis of morphometric properties of the vasculature. PLoS ONE 10, 1–10 (2015).

Fu, H. et al. Evaluation of retinal image quality assessment networks in different color-spaces. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2019 48–56 (Springer, 2019).

Kaggle Diabetic Retinopathy Competition (Kaggle, accessed 19 January 2020); https://www.kaggle.com/c/diabetic-retinopathy-detection/data

Zheng, Q., Delingette, H., Duchateau, N. & Ayache, N. 3D Consistent and robust segmentation of cardiac images by deep learning with spatial propagation. IEEE Trans. Med. Imaging 37, 2137–2148 (2018).

Khaw, K.-T. et al. Association of hemoglobin A1c with cardiovascular disease and mortality in adults: the European prospective investigation into cancer in Norfolk. Annals Intern. Med. 141, 413–420 (2004).

Levitan, E. et al. Hba1c measured in stored erythrocytes and mortality rate among middle-aged and older women. Diabetologia 51, 267–275 (2008).

Gerstein, H. C. et al. The hemoglobin A1c level as a progressive risk factor for cardiovascular death, hospitalization for heart failure, or death in patients with chronic heart failure: an analysis of the Candesartan in heart failure: assessment of reduction in mortality and morbidity (CHARM) program. Arch. Intern. Med. 168, 1699–1704 (2008).

Skali, H. et al. Cardiac structure and function across the glycemic spectrum in elderly men and women free of prevalent heart disease: the atherosclerosis risk in the community study. Circ. Heart Fail. 8, 448–454 (2015).

Petersen, S. E. et al. Reference ranges for cardiac structure and function in cardiovascular magnetic resonance (CMR) imaging in Caucasians from the UK Biobank population cohort. J. Cardiovasc. Magn. Reson. 19, 18 (2017).

Attar, R. et al. 3D Cardiac shape prediction with deep neural networks: simultaneous use of images and patient metadata. In Medical Image Computing and Computer Assisted Intervention—MICCAI 2019 586–594 (Springer, 2019).

The Age-Related Eye Disease Study Sytem Group et al.The Age-Related Eye Disease Study (AREDS): design implications AREDS report number 1. Control. Clin. Trials 20, 573–600 (1999).

The Age-Related Eye Disease Study System Groupet al. The Age-Related Eye Disease Study system for classifying age-related macular degeneration from stereoscopic color fundus photographs: the Age-Related Eye Disease Study report number 6. Am. J. Ophthalmol. 132, 668–681 (2001).

Antelmi, L., Ayache, N., Robert, P. & Lorenzi, M. Sparse multi-channel variational autoencoder for the joint analysis of heterogeneous data. In Proc. 36th International Conference on Machine Learning Vol. 97, 302–311 (PMLR, 2019).

Kingma, D. P. & Welling, M. Auto-encoding variational Bayes. Proc. 2nd International Conference on Learning Representations (ICLR, 2014).

Rezende, D. J., Mohamed, S. & Wierstra, D. Stochastic backpropagation and approximate inference in deep generative models. Proc. 31st International Conference on International Conference on Machine Learning, 32, 1278–1286 (ICML, 2014).

Hotelling, H. Relations between two sets of variates. Biometrika 28, 321–377 (1936).

Haufe, S. et al. On the interpretation of weight vectors of linear models in multivariate neuroimaging. NeuroImage 87, 96–110 (2014).

Johnson, J. M. & Khoshgoftaar, T. M. Survey on deep learning with class imbalance. J. Big Data 6, 27 (2019).

Suinesiaputra, A. et al. Fully-automated left ventricular mass and volume MRI analysis in the UK Biobank population cohort: evaluation of initial results. Int. J. Cardiovasc. Imag. 34, 281–291 (2018).

Attar, R. et al. Quantitative CMR population imaging on 20,000 subjects of the UK Biobank imaging study: LV/RV quantification pipeline and its evaluation. Med. Image Anal. 56, 26–42 (2019).

Keith, N. Some different types of essential hypertension: their course and prognosis. Am. J. Med. Sci. 268, 336–345 (1974).

Bai, W. et al. Automated cardiovascular magnetic resonance image analysis with fully convolutional networks. J. Cardiovasc. Magn. Reson. 20, 65 (2018).

Lin, K. et al. Automated assessment of left ventricular function and mass using heart deformation analysis: initial experience in 160 older adults. Acad. Radiol. 23, 321–325 (2016).

Heusel, M., Ramsauer, H., Unterthiner, T., Nessler, B. & Hochreiter, S. GANs trained by a two time-scale update rule converge to a local Nash equilibrium. Proc. 31st International Conference on Neural Information Processing Systemsm 30, 6629–6640 (NeurIPS, 2017).

Ferris, F. L. et al. Clinical classification of age-related macular degeneration. Ophthalmology 120, 844–851 (2013).

Trinh, M., Kalloniatis, M. & Nivison-Smith, L. Vascular changes in intermediate age-related macular degeneration quantified using optical coherence tomography angiography. Transl. Vis. Sci. Technol. 8, 20 (2019).

Grammer, T. B. et al. Cardiovascular risk algorithms in primary care: results from the detect study. Sci. Rep. 9, 1–12 (2019).

Qureshi, W. T. et al. Impact of replacing the pooled cohort equation with other cardiovascular disease risk scores on atherosclerotic cardiovascular disease risk assessment (from the multi-ethnic study of atherosclerosis [mesa]). Am. J. Cardiol. 118, 691–696 (2016).

Wallisch, C. et al. External validation of two framingham cardiovascular risk equations and the pooled cohort equations: a nationwide registry analysis. Int. J. Cardiol. 283, 165–170 (2019).

Wallisch, C. et al. Re-estimation improved the performance of two framingham cardiovascular risk equations and the pooled cohort equations: a nationwide registry analysis. Sci. Rep. 10, 1–11 (2020).

Acknowledgements

A.F.F. is supported by the Royal Academy of Engineering Chair in Emerging Technologies Scheme (grant no. CiET1819\19), the MedIAN Network (grant no. EP/N026993/1) funded by the Engineering and Physical Sciences Research Council (EPSRC). This work was also supported by the Intramural Research Program of National Library of Medicine and National Eye Institute, National Institutes of Health. This research was also supported by the European Union’s Horizon 2020 InSilc (grant no. 777119) and EPSRC TUSCA (grant no. EP/V04799X/1) programmes. E.D. acknowledges funding from the BHF grant FS/13/71/30378.

Author information

Authors and Affiliations

Contributions

A.D.-P. designed and executed all experiments, conducted all subsequent statistical analyses, and drafted the manuscript. N.R. helped design the experiments, helped with the writing, data interpretation and made substantial revisions and edits of the draft manuscript. R.A. helped design the experiments, contributed to the data analysis and data cleaning. A.S. and Y.Z. contributed to the data analysis. E.L., E.D., C.P.G., R.P.G. and S.P. contributed to the analysis of retinal and cardiac magnetic resonance images and shaped the medical contribution of this work. M.L. contributed to the design and implementation of the mcVAE. Q.C., T.D.L.K., E.A., E.Y.C. and Z.L. contributed to the external validation of the proposed method. A.F.F. conceived the methodology, helped design the experiments and contributed to the writing. All authors contributed to the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Machine Intelligence thanks the anonymous reviewers for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1 Variance Comparison.

Comparison of the joint latent space variance: Obtained distribution of the latent variables when using the mcVAE on only retinal images and both retina + CMR.

Extended Data Fig. 2 FID.

Distribution of the Frechet Inception Distance (FID) score: Obtained distribution of the FID score on the reconstructed CMR images.

Extended Data Fig. 3 LinearRegression Coefficients.

Coefficients obtained from the logistic regression applied to myocardial infarction prediction using the estimated LVM/LVEDV and demographic data. Chol stands for cholesterol, bmi for body mass index, ads for alcohol daily consumption status, sbpa and dbpa stand for systolic and diastolic blood pressure, respectively.

Extended Data Fig. 4 Bland-Altman plots for different retinal image sizes.

Bland-Altman plots, correlation plots, and ROC curves for estimated LVM/LVEDV and MI prediction using different retinal image sizes. The solid line represents the logistic regression, and the dotted line represents the identity line.

Extended Data Fig. 5 Bland-Altman plots for different dataset sizes.

Bland-Altman plots, correlation plots, and ROC curves for estimated LVM/LVEDV and MI prediction using different dataset sizes. The solid line represents the logistic regression, and the dotted line represents the identity line.

Extended Data Fig. 6 Bland-Altman plots for estimated LVM/LVEDV using only retinal images.

Estimation of LVM and LVEDV using retinal images only or demographic data only: Bland-Altman and correlation plots for estimated LVM/LVEDV using only retinal images (upper plots) and only demographic data (lower plots). The solid line represents the logistic regression, and the dotted line represents the identity line.

Extended Data Fig. 7 ROC curves for MI prediction using different demographic data.

ROC curves for MI prediction using different demographic data: An eye clinic (that is age, gender). Accuracy: 0.71 ± 0.01, Sensitivity: 0.74 ± 0.03, Specificity: 0.73 ± 0.06, Precision: 0.68 ± 0.03, and F1 Score: 0.71 ± 0.01. ROC curves for MI prediction using demographic data that may be available at a cardiology department (that is Age, Gender, BMI, Diastolic BP, Systolic BP, HbA1c scores, Glucose, Cholesterol, Smoking and Drinking status). Accuracy: 0.72 ± 0.03, Sensitivity: 0.74 ± 0.02, Specificity: 0.70 ± 0.05, Precision: 0.70 ± 0.05, and F1 Score: 0.72 ± 0.03. The solid line represents the logistic regression, and the dotted line represents the identity line.

Extended Data Fig. 8 Coefficients and ROC curves for MI prediction obtained from the AREDS datasets.

Coefficients and ROC curves for MI prediction obtained from the AREDS datasets: (a) Coefficients obtained from the logistic regression applied to myocardial infarction prediction using the AREDS demographic data (sex, diastolic blood pressure (dbpa), systolic blood pressure (sbpa), smoking status (ss), body mass index (bmi) and age), and (b) ROC curve obtained for 10-fold cross-validation for MI predictions using only demographic data available in the AREDS dataset, and (c) ROC curve obtained for MI predictions using a logistic regression model trained on the UK Biobank and evaluated on the AREDS dataset. The solid line represents the logistic regression, and the dotted line represents the identity line.

Supplementary information

Supplementary Information

Supplementary Figs. 1–8, Tables 1–4 and Methods.

Rights and permissions

About this article

Cite this article

Diaz-Pinto, A., Ravikumar, N., Attar, R. et al. Predicting myocardial infarction through retinal scans and minimal personal information. Nat Mach Intell 4, 55–61 (2022). https://doi.org/10.1038/s42256-021-00427-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-021-00427-7

This article is cited by

-

Evaluating imaging repeatability of fully self-service fundus photography within a community-based eye disease screening setting

BioMedical Engineering OnLine (2024)

-

AI-integrated ocular imaging for predicting cardiovascular disease: advancements and future outlook

Eye (2024)

-

Oculomics: A Crusade Against the Four Horsemen of Chronic Disease

Ophthalmology and Therapy (2024)

-

Retinal imaging for the assessment of stroke risk: a systematic review

Journal of Neurology (2024)

-

Fine-mapping of retinal vascular complexity loci identifies Notch regulation as a shared mechanism with myocardial infarction outcomes

Communications Biology (2023)