Abstract

With the emergence of individualized medicine and the increasing amount and complexity of available medical data, a growing need exists for the development of clinical decision-support systems based on prediction models of treatment outcome. In radiation oncology, these models combine both predictive and prognostic data factors from clinical, imaging, molecular and other sources to achieve the highest accuracy to predict tumour response and follow-up event rates. In this Review, we provide an overview of the factors that are correlated with outcome—including survival, recurrence patterns and toxicity—in radiation oncology and discuss the methodology behind the development of prediction models, which is a multistage process. Even after initial development and clinical introduction, a truly useful predictive model will be continuously re-evaluated on different patient datasets from different regions to ensure its population-specific strength. In the future, validated decision-support systems will be fully integrated in the clinic, with data and knowledge being shared in a standardized, instant and global manner.

Key Points

-

Many prediction models that consider factors related to disease and treatment are available, but lack standardized assessments of their robustness, reproducibility or clinical utility

-

The complete cycle of model development for decision making in radiotherapy involves several stages, including selection of data, performance measure, classification and external validation

-

Clinical decision-support systems (CDSSs) based on validated predictors will be crucial to implement personalized radiation oncology

-

Tolerance of normal tissue is the dose-limiting factor for the administration of radiotherapy, therefore, any CDSS should be based on predictors of tumour control and the probability of complications

-

Rapid-learning healthcare will enable the increasingly rapid validation of CDSSs, which, in turn, will enable the next major advances in shared decision making

Similar content being viewed by others

Introduction

Over the past decade, we have witnessed advances in cancer care, with many new diagnostic methods and treatment modalities becoming available,1 including advances in radiation oncology.2 The abundance of new options and the progress in individualized medicine has, however, created new challenges. For example, achieving level I evidence is increasingly difficult given the numerous disease and patient parameters that have been discovered, resulting in an ever-diminishing number of 'homogeneous' patients.3 This reality contrasts to a certain extent with classic evidence-based medicine, whereby randomized trials are designed for large populations of patients. Thus, new strategies are needed to find evidence for subpopulations on the basis of patient and disease characteristics.4

For each patient, the clinician needs to consider state-of-the-art imaging, blood tests, new drugs, improved modalities for radiotherapy planning and, in the near future, genomic data. Medical decisions must also consider quality of life, patient preferences and, in many health-care systems, cost efficiency. This combination of factors renders clinical decision making a dauntingly complex, and perhaps inhuman, task because human cognitive capacity is limited to approximately five factors per decision.3 Furthermore, dramatic genetic,5 transcriptomic,6 histological7 and microenvironmental8 heterogeneity exists within individual tumours, and even greater heterogeneity exists between patients.9 Despite these complexities, individualized cancer treatment is inevitable. Indeed, intratumoural and intertumoural variability might be leveraged advantageously to maximize the therapeutic index by increasing the effects of radiotherapy on the tumour and decreasing those effects on normal tissues.10,11,12

The central challenge, however, is how to integrate diverse, multimodal information (clinical, imaging and molecular data) in a quantitative manner to provide specific clinical predictions that accurately and robustly estimate patient outcomes as a function of the possible decisions. Currently, many prediction models are being published that consider factors related to disease and treatment, but without standardized assessments of their robustness, reproducibility or clinical utility.13 Consequently, these prediction models might not be suitable for clinical decision-support systems for routine care.

In this Review, we highlight prognostic and predictive models in radiation oncology, with a focus on the methodological aspects of prediction model development. Some characteristic prognostic and predictive factors and their challenges are discussed in relation to clinical, treatment, imaging and molecular factors. We also enumerate the steps that will be required to present these models to clinical professionals and to integrate them into clinical decision-support systems (CDSSs).

Methodological aspects

Factors for prediction

The overall aim of developing a prediction model for a CDSS is to find a combination of factors that accurately anticipate an individual patient's outcome.14 These factors include, but are not limited to, patient demographics as well the results of imaging, pathology, proteomic and genomic testing, the presence of key biomarkers and, crucially, the treatment undertaken. 'Outcome' can be defined as tumour response to radiotherapy, toxicity evolution during follow up, rates of local recurrence, evolution to metastatic disease, survival or a combination of these end points. Although predictive factors (that is, factors that influence the response to a specific treatment) are necessary for decision support, prognostic factors (that is, factors that influence response in the absence of treatment)15 are equally important in revealing the complex relationship with outcome. Herein, we refer to both of these terms generically as 'features' because, for a predictive model, correlation with outcome must be demonstrable.

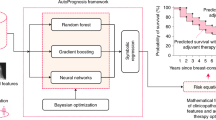

Model development stages

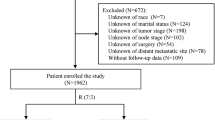

The procedure for finding a combination of features correlated with outcome is analogous to the development of biomarker assays.16 In that framework, we can distinguish qualification and validation. Qualification demonstrates that the data are indicative or predictive of an end point, whereas validation is a formalized process used to demonstrate that a combination of features is both reliable and suitable for the intended purpose. That is, we need to identify features, test whether they are predictive in independent datasets and then determine whether treatment decisions made using these features improve outcome. The complete cycle of model development entails several stages (Figure 1).

In the hypothesis-generation stage, one must consider the end point to predict, the timing of the treatment decision and the available data at these time points. In the data-selection step, a review of potential features is first conducted, ideally by an expert panel. A practical inventory of the available data and sample-size calculations are recommended, especially for the validation phase.17,18 Data from both clinical trials (high quality, low quantity, controlled, biased selection) and clinical practice (low quality, high quantity, unbiased selection) are useful, but selection biases must be identified in both cases and the inclusion criteria should be equivalent. For all features, including the characteristics of the treatment decision, data heterogeneity is a requirement to identify predictive features and to have the freedom to tailor treatment.

Next, performance measures for models are determined, and these measures include the area under the receiver operating characteristic curve (AUC), accuracy, sensitivity, specificity and c-index of censored data.19 AUC, which has values between 0 and 1 (with 1 denoting the best model and 0.5 randomness), is the most commonly used performance measure. However, for time-to-event models, the c-index and hazard ratio are more appropriate because both can handle censored data.

The preprocessing stage deals with missing data (imputation strategies; that is, replacing missing values by calculated estimates),20 identifying incorrectly measured or entered data21 as well as discretizing (if applicable) and normalizing data to avoid sensitivity for different orders of data scales.22 If an external, independent dataset is not available for validation, the available data must be split (in a separate stage) into a model-training dataset and a validation set, the latter of which is used subsequently in the validation step. In the feature-selection stage, the ratio of the number of evaluated features to number of outcome events must be kept as low as possible to avoid overfitting. When a model is overfitted, it is specifically and exclusively trained for the training data (including its data noise) and, as a result, performs poorly on new data. Data-driven preselection of features is, therefore, recommended.23 Univariate analyses are commonly used to prioritize the features—that is, testing each feature individually and ranking them on their strength of correlation with outcome.

Predicting outcomes

In the next stage, the input data are fed into a model that can classify all possible patient outcomes. Traditional statistical24 and machine-learning models25 can be considered. For two or more classes (for example, response versus no response), one might consider logistic regression, support vector machines, decision trees, Bayesian networks or Naive Bayes algorithms.26,27 For time-to-event outcomes, whether censored or not, Cox proportional hazards models28 or the Fine and Gray model28 of competing risks are most common. The choice of model depends on the type of outcome (for example, logistic regression for two or more outcomes, or Cox regression for survival-type data) and the type of input data (for example, Bayesian networks require categorized data, whereas support-vector machines can deal with continuous data). In general, several models with similar properties can be tested to find the optimal model for the available data. A simple model is, however, preferred because it is expected to be robust to a wider range of data than a more complex model.

Performance on the training dataset is upwards-biased because the features were selected. Thus, external validation data must be used, which can be derived from a separate institute or independent trial. When data are limited, internal validation can be considered using random split, temporal split or k-fold cross-validation techniques.29 The developed model should have a benefit over standard decision making, and must be assessed prospectively in the clinic in the penultimate stage of development. Models must be compared against predictions by clinicians30,31 and to standard prognostic and predictive factors.32 Critically, to demonstrate the improvement of patient outcome, quality of life and/or reduced toxicity,33 clinical trials must be conducted whereby the random assignment of patients is based on the prediction model output. Fulfilling this requirement will generate the final evidence that the model is improving health care by comparing, in a controlled way, the tailored treatments with standard treatments in the clinic.

Finally, the prediction models and data can be published, enabling the wider oncological community to evaluate them. Full transparency on the data and methodology is the key towards global implementation of the model into CDSSs. This suggestion is similar to clinical 'omics' publications for which the raw data, the code used to derive the results from the raw data, evidence for data provenance (the process that led to a piece of data) and a written description of nonscriptable analysis steps are routinely made available.34

In practice, this cycle of development usually begins by identifying clinical parameters, because these are widely and instantly available in patient information systems and clinical trials. These clinical variables also form the basis for extending prediction models with imaging or molecular data.

Clinical features

Decision making in radiotherapy is mainly based on clinical features, such as the patient performance status, organ function and grade and extent of the tumour (for example, as defined by the TNM system). In almost all studies, such features have been found to be prognostic for survival and development of toxicity.35,36,37 Consequently, these features should be evaluated in building robust and clinically acceptable radiotherapy prognostic and predictive models. Moreover, measurement of some clinical variables, such as performance status, can be captured with minimal effort.

Even the simplest questionnaire, however, should be validated as is the case for laboratory measurements of organ function or parameters measured from blood.38,39 Furthermore, a standardized protocol should be available to ensure that comparisons are possible between centres and questionnaires over time.40 Moreover, why specific features were chosen for measurement should be clearly explained. For example, if haemoglobin measurements were only taken in patients with fatigue, the resulting bias would demand caution when including and interpreting the measurements. Only when clinical parameters are recorded prospectively with the same scrutiny as laboratory measurements will observational studies become as reliable as randomized trials.41,42

Toxicity measurements and scoring should also build on validated scoring systems, such as the Common Terminology Criteria for Adverse Events (CTCAE), which can be scored by the physician or patient.43,44 Indeed, a meta-analysis showed that high-quality toxicity assessments from observational trials are similar to those of randomized trials.45,46 However, a prospective protocol must clarify which scoring system was used and how changes in toxicity score were dealt with over time with respect to treatment.

Finally, to ensure a standardized interpretation, the reporting of clinical and toxicity data and their analyses should be performed in line with the STROBE (Strengthening the Reporting of Observational Studies in Epidemiology) statement for observational studies and genetic-association studies, which is represented as checklists of items that should be addressed in reports to facilitate the critical appraisal and interpretation of these type of studies.47,48

Treatment features

Currently, image-guided radiotherapy (IGRT) is a highly accurate cancer treatment modality in delivering its agent (radiation) to the tumour.49 Furthermore, very accurate knowledge of the effects of radiation on normal tissue has been obtained.50 With modern radiotherapy techniques—such as intensity-modulated radiotherapy, volumetric arc therapy or particle-beam therapy—the treatment dose can be sculpted around the target volume with dosimetric accuracy of a few percentage points. IGRT ensures millimetre precision to spare the organs at risk as much as possible.51

For prediction modelling, recording features that are derived from planned spatial and temporal distribution of the radiotherapy dose is crucial. Additionally, features must be recorded that describe the efforts undertaken during treatment to ensure that the dose is delivered as planned (that is, in vivo dosimetry); a delicate balance exists between tumour control and treatment-related toxicity.52 Additional therapies, such as (concurrent) chemotherapy, targeted agents and surgery, and their features must also be recorded because these have various effects on outcome.32,53 An example is the difference between concurrent versus sequential chemoradiation, which has a major influence on the occurrence of acute oesophagitis that induces dysphagia.54

With respect to the spatial dimension of radiotherapy, how to combine information about the spatially variable dose distribution for every subvolume of the target tumour (or organ) with the global effect to the tumour or adjacent normal tissue remains indeterminate. Dose-response relationships for tumour tissues are often reported in terms of mean (biologically equivalent) dose, although voxel-based measures have also been reported.55 Mean doses or doses to a prescription point inside the tumour are easily determined and reported and can suffice for many applications. However, spatial characteristics might be more relevant in personalized approaches to ensure radioresistant areas of the tumour receive higher doses.55 For normal tissue toxicity, dose features—including the mean and maximum dosage, as well as the volume of the normal tissue receiving a certain dose—are important. For example, V20 <35% is a common threshold to prevent lung toxicity.56

Clinical dose–volume histogram analysis for pneumonitis after the 3D treatment for non-small-cell lung cancer was first described in 1991.57 In 2010, a series of detailed reviews of all frequently irradiated organs (the QUANTEC project) was described,50 showing that, as for the tumour, care must be taken when assessing dose at the organ level. For example, in some organs, the volume receiving a certain dose is important (such as the oesophagus or lung) because of their proximity to other vital structures, whereas the maximum dose to a small region of other organs might be most important (such as for the spinal cord) because preserving its post-treatment function is crucial. Predicting complications to normal tissue is an active research area in ongoing, large, prospective multicentre projects, including ALLEGRO58 and others.59,60,61

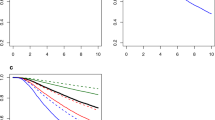

Although important, in general, one must be careful about relying completely on planned-radiotherapy dose-based predictions because patients display wide variability in toxicity development. The reasons for this variability include many known clinical and molecular-based features as well as the quality of the treatment execution. The focus on the planned radiotherapy dose distribution as the prime determinant of outcome is perhaps the most common pitfall in prediction models because deviations from the original plan during the time of treatment frequently occur.62 The accuracy of prediction models is expected to increase when measured dose is used, as this measure reflects the effect of radiotherapy most accurately. Figure 2 shows an example of these variations in a patient with prostate cancer. Dose reconstructions (2D and 3D), Gamma-Index calculations and dose–volume histograms during treatment can help in identifying increasingly accurate dose-related features,63,64 such as radiation pneumonitis65 and oesophagitis.66

a | Original planning CT scan that includes contours of the prostate (red), bladder (yellow), exterior wall of the rectum (blue) and seminal vesicles (green). b | Contoured CT scan after 16 fractions of radiotherapy. c | Reconstructed 3D dose after 16 fractions of radiotherapy. d | Calculated dose differences (expressed as a 3D Gamma Index) after 16 fractions of radiotherapy. e | Dose–volume histograms at fractions 1, 6, 11, 16, 21 and 26 (dashed lines) as well as pretreatment histograms (solid lines). Clear deviations are visible from the planned dose–volume histogram for the rectum and bladder.

The temporal aspect of fractionated radiotherapy is also an active area of research. The fact that higher radiation doses are required to control a tumour when treatment is prolonged is well-known, and increasing evidence suggests that accelerated regimens giving the same physical dose can improve outcome.67,68 A multicentre analysis of patients with head-and-neck cancer treated with radiotherapy alone showed that the potential doubling time of the tumour before treatment was not a predictor for local control.69 Alongside the classic explanation of accelerated repopulation,70 changes in cell loss, hypoxia and selection of radioresistant stem cells have each been suggested as underlying causes of this observation, the possible implications of which include shorter overall treatment times with higher doses per fraction and the avoidance of breaks during treatment.71,72 Overall, treatment time is an accessible feature that is correlated with local failure in several tumour sites.73,74

Ideally, the spatial and temporal dimensions of radiotherapy would be exploited by showing a fractional dose distribution in a tumour radioresistance (and normal-tissue radiosensitivity) map that is continuously updated during treatment. However, such an image of radioresistance does not yet exist. If it did, CDSSs would guide the planning and modification of the spatial and temporal distribution of radiation in such a way as to maintain or improve the balance between tumour control and the probability of normal tissue complications continuously during treatment, instead of the current approach that delivers radiation as planned with an identical dose to the tumour as a whole.

Imaging features

Medical imaging has a fundamental role in radiation oncology, particularly for treatment planning and response monitoring.75,76 Technological advances in noninvasive imaging—including improved temporal and spatial resolution, faster scanners and protocol standardization—have enabled the field to move towards the identification of quantitative noninvasive imaging biomarkers.77,78,79

Metrics based on tumour size and volume are the most commonly used image-based predictors of tumour response to therapy and survival,80,81,82,83,84,85,86,87 and rely on CT and MRI technology for 3D measurement.88,89,90 Although used in clinical practice, tumour size and volume measurements are subject to interobserver variability that can be attributed to differences in tumour delineations.85,86,87,91,92 Moreover, the optimal measurement technique and definitions of appropriate response criteria, in terms of changes in tumour size, are unclear.93 Additionally, tumour motion and image artefacts are additional sources of variability.94,95 To overcome these issues, automated tumour delineation methods have been introduced96,97,98,99 on the basis of, for example, the selection of ranges of Hounsfield units (which represent the linear attenuation coefficient of the X-ray beam by the tissue) on CT that define a certain tissue type, or calculation of the gradient of an image (mathematical filter) to reveal the borders between tissue types. Extensive evaluation, however, is needed before these methods can be used routinely in the clinic.100,101,102

A commonly used probe for the metabolic uptake of the tumour is 18F-fluorodeoxyglucose (FDG) for PET imaging.103,104 The pretreatment maximum standardized uptake value (SUV, which is the normalized FDG uptake for an injected dose according to the patient's body weight) is strongly associated with overall survival and tumour recurrence in a range of tumour sites, including the lung, head and neck, rectum, oesophagus and cervix.105,106,107,108,109,110,111 Furthermore, several studies have shown that changes in SUV during and after treatment are early predictors of tumour recurrence.112,113,114,115 FDG–PET measurements, however, are dependent on a number of factors, including injected dose, baseline glucose concentration, FDG clearance, image reconstruction methods used and partial-volume effects.116,117 Standardization of these factors across institutions is, therefore, fundamental to enable comparisons and validation of data from FDG–PET imaging.118,119

Multiple studies have shown that diffusion-weighted MRI parameters, such as the apparent diffusion coefficient (ADC), which is a measure of water mobility in tissues, can accurately predict response and survival in multiple tumour sites.120,121,122,123,124 However, lack of reproducibility of ADC measurements—due to lack of standardization of instruments between vendors and of internationally accepted calibration protocols—remains a bottleneck in these types of studies.125 Evaluations of different time points in dynamic contrast-enhanced MRI have also been used to describe tumour perfusion.90,126,127,128 Indeed, hypothesis-driven preclinical129 and xenograft studies130,131 support these clinical studies. For example, assessment of the correlation of features from imaging (such as lactate level and the extent of reoxygenation) with tumour control is possible.130,131

Increasingly advanced image-based features are currently being investigated. For example, routine clinical imaging can capture both tumour heterogeneity and post-treatment changes, which can be analysed to identify functional biomarkers (Figure 3). Changes in Hounsfield units in contrast-enhanced CT are directly proportional to the quantity of contrast agent present in the tissue and have been used as a surrogate for tumour perfusion.132,133 Indeed, reductions of Hounsfield units following treatment have been used to evaluate treatment response in rectal, hepatic and pulmonary cancers.134,135

Standardizing the extraction and quantification of a large number of traits derived from diagnostic imaging are now being considered in new imaging marker approaches.79 Through advanced image-analysis methods, we can quantify descriptors of tumour heterogeneity (such as variance or entropy of the voxel values) and the relationship of the tumour with adjacent tissues.136,137,138 These analytical methods enable high-throughput evaluation of imaging parameters that can be correlated with treatment outcome and, potentially, with biological data. Indeed, qualitative imaging parameters on CT and MRI scans have been used to predict mRNA abundance variation in hepatocellular carcinomas and brain tumours.139,140,141 Furthermore, a combination of anatomical, functional and metabolic imaging techniques might be used to capture pathophysiological and morphological tumour characteristics in a noninvasive manner, including apparent intratumoural heterogeneity.142

Molecular features

Biological markers are also valuable clinical decision-support features; these include prognostic and predictive factors for outcomes, such as tumour response and normal-tissue tolerance. Despite these strengths, trials of molecular biomarkers are prone to experimental variability; for this reason standardizing assay criteria, trial design and analysis are imperative if multiple molecular markers are to be used in predictive modelling.16

Tumour response

Next to tumour size, tumour control after radiotherapy is largely determined by three criteria: intrinsic radiosensitivity, cell proliferation and the extent of hypoxia.143 In addition, large tumours intuitively require higher doses of radiation than small tumours because there are simply more cells to kill—this requirement is true even if intrinsic radiosensitivity, hypoxia and repopulation rates are equal. Several approaches have been developed to measure these additional three parameters to predict tumour response to radiotherapy.

Intrinsic radiosensitivity

Malignant tumours display wide variation in intrinsic radiosensitivity, even between tumours of similar origin and histological type.144 Attempts to assess the radiosensitivity of human tumours have relied on determining the ex vivo tumour survival fraction.145 Those studies and others have shown that tumour cell radiosensitivity is a significant prognostic factor for radiotherapy outcome in both cervical146 and head-and-neck147 carcinomas. However, these colony assays suffer from technical disadvantages that include a low success rate (<70%) for human tumours and the time needed to produce data, which can be up to several weeks.

Other studies have included assessments of chromosome damage, DNA damage, glutathione levels and apoptosis.148 Indeed, some clinical studies using such assays have shown correlations with radiotherapy outcome, whereas others have not.149 However, these cell-based functional assays only have limited clinical utility as predictive assays, despite being useful in confirming a mechanism that underlies differences in the response of tumours to radiotherapy. For example, some studies have provided encouraging data showing that immunohistochemical staining for γ-histone H2AX, a marker of DNA damage, might be a useful way to assess intrinsic radiosensitivity very early after the start of treatment.150,151 Double-stranded breaks are generated when cells are exposed to ionizing radiation or DNA-damaging chemotherapeutic agents, which rapidly results in the phosphorylation of γ-histone H2AX. γ-Histone H2AX is the most sensitive marker that can be used to examine the DNA damage and its subsequent repair, and it can be detected by immunoblotting and immunostaining using microscopic or flow cytometric detection. Clinically, two biopsies (one before and one after treatment) are needed to assess the γ-histone H2AX status, which is not always easy to implement in practice.

Hypoxia

Tumour hypoxia is the key factor involved in determining resistance to treatment and malignant progression; it is a negative prognostic factor after treatment with radiotherapy, chemotherapy and surgery.152,153 Indeed, some data show that hypoxia promotes both angiogenesis and metastasis and, therefore, has a key role in tumour progression.154 Although a good correlation has been demonstrated between pimonidazole (a chemical probe of hypoxia) staining and outcome after radiotherapy in head-and-neck cancer,155 the same relationship has not been found in cervical cancer.156 In light of these contrasting results, one of the hypotheses put forward to explain this is that hypoxia tolerance is more important than hypoxia itself.157

The use of fluorinated derivatives of such chemical probes also enables their detection by noninvasive PET.158,159,160 Although this approach requires administration of a drug, it does benefit from sampling the whole tumour and not just a small part of it. Another possible surrogate marker of hypoxia is tumour vasculature; the prognostic significance of tumour vascularity has been measured as both intercapillary distance (thought to reflect tumour oxygenation) and microvessel density (the 'hotspot' method that provides a histological assessment of tumour angiogenesis). Some studies have found positive correlations with outcome—mainly using microvessel density in cervical cancer—whereas others have shown negative correlations.161 Some concerns have been raised about the extent to which biopsies taken randomly truly represent the usually large, heterogeneous tumours.

Proliferation

If the overall radiotherapy treatment time is prolonged, for example, for technical reasons (breakdown of a linear particle accelerator) or because of poor tolerance by the patient to the treatment, higher doses of radiation are required for tumour control—clearly indicating that the influence of tumour proliferation is important.162 Although proliferation during fractionated radiotherapy is clearly an important factor in determining outcome, reliable measurement methods are not yet available. To understand why radiation leads to an accelerated repopulation response in some tumours and not in others, a greater understanding of the response at both the cellular and molecular level is required.

Normal-tissue tolerance

Inherent differences in cellular radiosensitivity among patients dominate normal-tissue reactions more than other contributing factors.164 That is, the radiation doses given to most patients might in actuality be too low for an optimal cure because 5% of patients are very sensitive; these 5% of patients are so sensitive that they skew what is 'optimal' radiotherapy to the lower end of the spectrum, to the detriment of the majority of patients who are not as sensitive. Future CDSSs should be able to distinguish such overly sensitive patients and classify them separately so they receive different treatments to the less-sensitive patients.

Several small165 and large166 in vitro studies found a correlation between radiosensitivity and severity of late effects, namely radiation-induced fibrosis of the breast, but these findings were not consistent because no standardized quality assurance exists for radiotherapy in vivo.167,168 Similar discrepancies were later found using rapid assays that measure chromosomal damage,169 DNA damage170 and clonogenic cell survival.171 For example, the lymphocyte apoptosis assay has been used in a prospective trial as a stratification factor to assess late toxicity using letrozole as radiosensitizer in patients with breast cancer.172 Cytokines such as TGF-β, which influences fibroblast proliferation and differentiation, are known to have a central role in fibrosis and senescence.173,174 Currently, the relationships between the lymphocyte predictive assay, TGF-β and late complications are purely correlative and a clear molecular explanation is lacking. Genome-wide association studies (GWAS) and the analysis of single nucleotide polymorphisms (SNPs) in candidate genes have also shown promise in identifying normal-tissue tolerance,175,176 although these do not often validate results from independent studies.177 In general, the problem with all these studies has been the wide experimental variability rather than interindividual differences in radiosensitivity. Normal-tissue tolerance is the dose-limiting factor for the administration of radiotherapy, therefore, any CDSS should be based on predictors of tumour control and the probability of complications.

Representation of predictions

Although the decisions made in the process of developing predictive models will determine the characteristics of a multivariate model (for example, which features are selected and the overall prediction accuracy), the success of the model depends on other factors, such as its availability and interactivity, which increases the acceptability. Even models based on large patient populations, with proper external validation, can fail to be accepted within the health-care community if the model and its output are not easily interpretable, if there is a lack of opportunity to apply the model or if the clinical usefulness is not proven or reported.178

Although some models, such as decision trees, implicitly have a visual representation that is somewhat interpretable, most models do not. One highly interpretable representation of a set of features is the nomogram.179 The nomogram was originally used in the early 20th century to make approximate graphical computations of mathematical equations. In medicine, nomograms have experienced a revival, reflected by the increasing number of studies reporting them.180,181,182,183,184 Figure 4 shows an example of a published clinical nomogram of local control in larynx cancer in which values for the selected features directly relate to a prediction score. The sum of these scores corresponds to a probability of local control within 2 or 5 years.181

Clinical and treatment variables are associated with local control status at follow-up durations of 2 and 5 years. The predictors are age of the patient (in years), haemoglobin level (in mmol/l), clinical tumour stage (T-stage), clinical nodal stage (N-stage), patient's sex and equivalent dose (in Gy). A probability for local control can be calculated by drawing a vertical line from each predictor value to the score scale at the top—'points'. After manually summing up the scores, the 'total points' correspond to the probability of local control, which are estimated by drawing a vertical line from this value to the bottom scales to estimate local control.181

Another idea for increasing acceptability of computer-assisted personalized medicine is to make prediction models available on the internet. If interactive, peer-reviewed models are provided with sufficient background information, clinicians can test them using their own patient data. Such a system would provide retrospective validation of the multiple features by the wider community, as well as provide an indication on the clinical usefulness of the methodology. The best-known website with interactive clinical prediction tools is Adjuvant! Online.185 This website provides decision support for adjuvant therapy (for example, chemotherapy and hormone therapy) after surgery for patients with early-stage cancer. Many researchers have evaluated the models available on this prediction website, thereby refining them with additional predictors and updated external validations.186,187 A prediction website that focuses on decision support for radiotherapy was recently established.188 The aim of this website is to let users work with and validate the interactive models developed for patients with cancer treated with radiotherapy, which contributes to CDSS development in general by demonstrating the potential of these predictions and raising the awareness of their existence and limitations.

Future prospects

The major focus of this Review, thus far, has been model development, validation and presentation (including the features from different domains that might be considered as predictive and prognostic). Although an accurate outcome prediction model forms the basis of a CDSS, additional considerations must be made before a new CDSS can be used in daily radiation oncology practice.

First, any decision a patient or physician makes is based on a balance between its benefits (survival, local control and quality of life) and harms (toxic effects, complications, quality of life and financial cost). For example, an increased radiation dose usually results in both a higher probability of tumour control, but a concomitant higher probability of normal-tissue complications. Identifying the right balance between harm and benefit is a deeply personal choice that can vary substantially among patients. Thus, a CDSS should simultaneously predict local control, survival, treatment toxicity, quality of life and cost. The system should represent these predictions and the balance between them in a way that is not only clear to the physician, but also to the patient, to achieve shared decision making.

Additionally, any prediction using a CDSS should be accompanied by a confidence interval. Accurately evaluating the confidence interval is an active and challenging area of research because uncertainties in the input features, missing features, size and quality of the training set and the intrinsic uncertainty of cancer must be incorporated to specify the uncertainty in the prediction for an individual patient. Without knowing if two possible decisions have a statistically significant and clinically meaningful difference in outcome, clinical decision support is difficult. Always sharing the data on which the model was based is a crucial prerequisite for this effort.

Current prediction models for decision support can only assist in distinguishing very high-level decisions—such as palliative versus curative treatment, sequential versus concurrent chemoradiation, surgery versus a watch-and-wait approach. The radiation oncology community, however, is probably more interested in decisions such as intensity-modulated radiotherapy versus 3D-conformal radiotherapy or accelerated versus nonaccelerated treatment. The current prediction models are simply not trained on datasets with these detailed subgroups and are not, therefore, accurate enough to support these decisions. Whether learning from increasingly diverse patient groups and adding other features will sufficiently improve the current models is unclear. As a result, tightly controlled studies using evidence-based medicine approaches are still crucial to guide clinical practice.

Finally, CDSSs should be seen as medical devices that require stringent acceptance, commissioning and quality assurance by the local institute. The key part of the commissioning and subsequent quality assurance is to validate the accuracy of the prediction model in the local patient population. Indeed, local patient data should be collected and the predicted outcomes compared with actual outcomes to convince local physicians that the support system works in their local setting. This 'local validation' should be done at the commissioning stage, but should be repeated to ensure the decision support remains valid, despite changes in local practice. Validation studies need to indicate what will be the required commission frequency.

This required quality assurance also enables the improvement of the system as more patient data becomes available. Using routine patient data to extract knowledge and apply that knowledge immediately is called 'rapid learning'.3,189 Rapid learning via continuously updated CDSSs offers a way to quickly learn from retrospective data and include new data sets (such as randomized controlled trial results) to adapt treatment protocols and deliver personalized decision support.

As a data-driven discipline with well-established standards, such as DICOM–RT (digital imaging and communications in medicine in radiotherapy), radiotherapy offers an excellent starting point for adopting these rapid-learning principles (Figure 5). Aside from the importance of local data capture, which is still often lacking for (patient-reported) outcome and toxicity in particular, the quantity and heterogeneity of data that is necessary for rapid learning requires the pooling of data in a multi-institutional, international fashion.190,191 One method of pooling data is to replicate routine clinical data sources in a distributed de-identified data warehouse, such as what is done in an international Computer-Aided Theragnostics network.192 Examples of initiatives that create large centralized data and tissue infrastructures for routine radiation oncology patients are GENEPI,193 the Radiogenomics Consortium,194 ALLEGRO58 and ULICE.195 These initiatives also facilitate studies for external validation, reproducibility and hypothesis generation.190

As datasets become larger (both in number of patients and in number of features per patient) high-throughput methods, both molecular196,197,198,199,200,201 and imaging-based,79 can produce large numbers of features that correlate with outcome.68,70,202,203,204 A limited application of these techniques has already transformed our understanding of radiotherapy response. For example, GWAS have associated SNPs with radiation toxicity.205,206 Similarly, mRNA-abundance microarrays have been used to predict tumour response and normal-tissue toxicity in both patient and in-vitro studies,207,208,209,210,211 as well as to create markers that reflect biological phenotypes that are important for radiation response, such as hypoxia212,213 and proliferation.214 Both the data analysis and validation are important but challenging aspects of model development.196,215 For example, the studies described above207,208,209,210,211,212,213,214 suffer from the substantial multiple-testing problem (that is, a large number of measured features compared with the sample number), which renders their results preliminary. Human input and large, robust validation studies are, therefore, needed before features from high-throughput techniques can be included in CDSSs.216,217,218

Although studies on a single feature can be informative, only its combination into multimodal, multivariate models can be expected to provide a more holistic view of the response to radiation. By combining events at different levels using systems-biology-like approaches, creating tumour-specific and patient-specific models of the effects and implications of radiation therapy should become possible (Figure 6). Indeed, future studies will not only need to identify the individual components related to radiation response, but will also need to establish the interactions and relations amongst them.219 Although this approach has not yet been applied to model radiotherapy responses, at least one study has demonstrated that combining multiple high-throughput data types can be used to map molecular cancer characteristics.220 Combining models at different levels (societal, patient, whole tumour or organ, local tumour or organ, and cellular) is expected to lead to an increasingly holistic and accurate CDSS for the individual patient. Evidence that longitudinal data have added value to predicting outcome in, for example, repeated PET-imaging221 and tumour-perfusion222 studies is growing, implying that this data need to be taken into account as candidates for future CDSSs.

a | On the basis of in-vitro, in-vivo and patient data, modules representing the three biological categories (gene expression, immunohistochemical data and mutation data) important for radiotherapy response can be created. b | For an individual patient, appropriate molecular data will be accumulated. c | Combining the individual patient data with the modules will provide knowledge on specific module alterations (such as a deletion [X], upregulation [red] or downregulation [blue]), which can be translated to information on relative radioresistance and the molecular 'weak' spots of the tumour. This information will subsequently indicate whether dose escalation is necessary and which targeted drug is most effective for the patient. Part b used with permission from the National Academy of Sciences © Duboisa, L. J. Proc. Natl Acad. Sci. USA 108, 14620–14625 (2011).

Despite the challenges that remain, the vision of predictive models leading to CDSSs that are continuously updated via rapid learning on large datasets is clear, and numerous steps have already been taken. These include universal data-quality assurance programmes and semantic interoperability issues.223 However, we believe that this truly innovative journey will lead to necessary improvement of healthcare effectiveness and efficiency. Indeed, investments are being made in research and innovation for health-informatics systems, with an emphasis on interoperability and standards for secured data transfer, which shows that 'eHealth' will be among the largest health-care innovations of the coming decade.223,224

Conclusions

Accurate, externally validated prediction models are being rapidly developed, whereby multiple features related to the patient's disease are combined into an integrated prediction. The key, however, is standardization—mainly in data acquisition across all areas, including molecular-based and imaging-based assays, patient preferences and possible treatments. Standardization requires harmonized clinical guidelines, regulated image acquisition and analysis parameters, validated biomarker assay criteria and data-sharing methods that use identical ontologies. Assessing the clinical usefulness of any CDSS is just as important as standardizing the development of externally validated accurate prediction models with high-quality data, preferably by standardizing the design of clinical trials. These crucial steps are the basis of validating a CDSS, which, in turn, will stimulate developments in rapid-learning healthcare and will enable the next major advances in shared decision making.

Review criteria

The PubMed and MEDLINE databases were searched for articles published in English (not restricted by date of publication) using a range of key phrases, including but not limited to: “PET imaging”, “heterogeneity in imaging”, “tumour response in radiotherapy”.

References

Vogelzang, N. J. et al. Clinical cancer advances 2011: annual report on progress against cancer from the American Society of Clinical Oncology. J. Clin. Oncol. 30, 88–109 (2012).

Fraass, B. A. & Moran, J. M. Quality, technology and outcomes: evolution and evaluation of new treatments and/or new technology. Semin. Radiat. Oncol. 22, 3–10 (2012).

Abernethy, A. P. et al. Rapid-learning system for cancer care. J. Clin. Oncol. 28, 4268–4274 (2010).

Maitland, M. L. & Schilsky, R. L. Clinical trials in the era of personalized oncology. CA Cancer J. Clin. 61, 365–381 (2011).

Gerlinger, M. et al. Intratumor heterogeneity and branched evolution revealed by multiregion sequencing. N. Engl. J. Med. 366, 883–892 (2012).

Bachtiary, B. et al. Gene expression profiling in cervical cancer: an exploration of intratumor heterogeneity. Clin. Cancer Res. 12, 5632–5640 (2006).

Boyd, C. A., Benarroch-Gampel, J., Sheffield, K. M., Cooksley, C. D. & Riall, T. S. 415 patients with adenosquamous carcinoma of the pancreas: a population-based analysis of prognosis and survival. J. Surg. Res. 174, 12–19 (2012).

Milosevic, M. F. et al. Interstitial fluid pressure in cervical carcinoma: within tumor heterogeneity, and relation to oxygen tension. Cancer 82, 2418–2426 (1998).

Curtis, C. et al. The genomic and transcriptomic architecture of 2,000 breast tumours reveals novel subgroups. Nature 468, 346–352 (2012).

Suit, H., Skates, S., Taghian, A., Okunieff, P. & Efird, J. T. Clinical implications of heterogeneity of tumor response to radiation therapy. Radiother. Oncol. 25, 251–260 (1992).

Aerts, H. J. et al. Identification of residual metabolic-active areas within NSCLC tumours using a pre-radiotherapy FDG-PET-CT scan: a prospective validation. Lung Cancer 75, 73–76 (2012).

Aerts, H. J. et al. Identification of residual metabolic-active areas within individual NSCLC tumours using a pre-radiotherapy (18)Fluorodeoxyglucose–PET–CT scan. Radiother. Oncol. 91, 386–392 (2009).

Vickers, A. J. Prediction models: revolutionary in principle, but do they do more good than harm? J. Clin. Oncol. 29, 2951–2952 (2011).

Bright, T. J. et al. Effect of clinical decision-support systems: a systematic review. Ann. Intern. Med. 157 29–43 (2012).

Clark, G. M. Prognostic factors versus predictive factors: examples from a clinical trial of erlotinib. Mol. Oncol. 1, 406–412 (2008).

Dancey, J. E. et al. Guidelines for the development and incorporation of biomarker studies in early clinical trials of novel agents. Clin. Cancer Res. 16, 1745–1755 (2010).

Peek, N., Arts, D. G., Bosman, R. J., van der Voort, P. H. & de Keizer, N. F. External validation of prognostic models for critically ill patients required substantial sample sizes. J. Clin. Epidemiol. 60, 491–501 (2007).

Vergouwe, Y., Steyerberg, E. W., Eijkemans, M. J. & Habbema, J. D. Substantial effective sample sizes were required for external validation studies of predictive logistic regression models. J. Clin. Epidemiol. 58, 475–483 (2005).

Steyerberg, E. W. et al. Assessing the performance of prediction models: a framework for traditional and novel measures. Epidemiology 21, 128–138 (2010).

Aittokallio, T. Dealing with missing values in large-scale studies: microarray data imputation and beyond. Brief. Bioinform. 11, 253–264 (2010).

Ludbrook, J. Outlying observations and missing values: how should they be handled? Clin. Exp. Pharmacol. Physiol. 35, 670–678 (2008).

Jayalakshmi, T. & Santhakumaran, A. Statistical normalization and back propagation for classification. Int. J. Comput. Theory Eng. 3, 89–93 (2011).

Huan, L. & Motoda, H. Feature Selection for Knowledge Discovery and Data Mining (Kluwer Academic Publishers, Norwell, MA, 1998).

Harrell, F. E. Regression Modeling Strategies (Springer, New York, 2001).

Bishop, C. M. Pattern Recognition and Machine Learning (Springer, New York, 2007).

Lee, S. M. & Abbott, P. A. Bayesian networks for knowledge discovery in large datasets: basics for nurse researchers. J. Biomed. Inform. 36, 389–399 (2003).

Cruz, J. A. & Wishart, D. S. Applications of machine learning in cancer prediction and prognosis. Cancer Inform. 2, 59–77 (2007).

Putter, H., Fiocco, M. & Geskus, R. B. Tutorial in biostatistics: competing risks and multi-state models. Stat. Med. 26, 2389–2430 (2007).

Moons, K. G. et al. Risk prediction models: II. External validation, model updating, and impact assessment. Heart 98, 691–698 (2012).

Dehing-Oberije, C. et al. Development, external validation and clinical usefulness of a practical prediction model for radiation-induced dysphagia in lung cancer patients. Radiother. Oncol. 97, 455–461 (2010).

Specht, M. C., Kattan, M. W., Gonen, M., Fey, J. & Van Zee, K. J. Predicting nonsentinel node status after positive sentinel lymph biopsy for breast cancer: clinicians versus nomogram. Ann. Surg. Oncol. 12, 654–659 (2005).

Dehing-Oberije, C. et al. Tumor volume combined with number of positive lymph node stations is a more important prognostic factor than TNM stage for survival of non-small-cell lung cancer patients treated with (chemo)radiotherapy. Int. J. Radiat. Oncol. Biol. Phys. 70, 1039–1044 (2008).

Vickers, A. J., Kramer, B. S. & Baker, S. G. Selecting patients for randomized trials: a systematic approach based on risk group. Trials 7, 30 (2006).

Baggerly, K. A. & Coombes, K. R. What information should be required to support clinical “omics” publications? Clin. Chem. 57, 688–690 (2011).

Klopp, A. H. & Eifel, P. J. Biological predictors of cervical cancer response to radiation therapy. Semin. Radiat. Oncol. 22, 143–150 (2012).

Kristiansen, G. Diagnostic and prognostic molecular biomarkers for prostate cancer. Histopathology 60, 125–141 (2012).

Dehing-Oberije, C. et al. Development and external validation of prognostic model for 2-year survival of non-small-cell lung cancer patients treated with chemoradiotherapy. Int. J. Radiat. Oncol. Biol. Phys. 74, 355–362 (2009).

Ang, C. S., Phung, J. & Nice, E. C. The discovery and validation of colorectal cancer biomarkers. Biomed. Chromatogr. 25, 82–99 (2011).

Schmidt, M. E. & Steindorf, K. Statistical methods for the validation of questionnaires--discrepancy between theory and practice. Methods Inf. Med. 45, 409–413 (2006).

Garrido-Laguna, I. et al. Validation of the Royal Marsden Hospital prognostic score in patients treated in the Phase I Clinical Trials Program at the MD Anderson Cancer Center. Cancer 118, 1422–1428 (2012).

Shrier, I. et al. Should meta-analyses of interventions include observational studies in addition to randomized controlled trials? A critical examination of underlying principles. Am. J. Epidemiol. 166, 1203–1209 (2007).

Tzoulaki, I., Siontis, K. C. & Ioannidis, J. P. Prognostic effect size of cardiovascular biomarkers in datasets from observational studies versus randomised trials: meta-epidemiology study. BMJ 343, d6829 (2011).

Trotti, A., Colevas, A. D., Setser, A. & Basch, E. Patient-reported outcomes and the evolution of adverse event reporting in oncology. J. Clin. Oncol. 25, 5121–5127 (2007).

Trotti, A. et al. CTCAE v3.0: development of a comprehensive grading system for the adverse effects of cancer treatment. Semin. Radiat. Oncol. 13, 176–181 (2003).

Golder, S., Loke, Y. K. & Bland, M. Meta-analyses of adverse effects data derived from randomised controlled trials as compared to observational studies: methodological overview. PLoS Med. 8, e1001026 (2011).

Steg, P. G. et al. External validity of clinical trials in acute myocardial infarction. Arch. Intern. Med. 167, 68–73 (2007).

Little, J. et al. Strengthening the reporting of genetic association studies (STREGA): an extension of the STROBE statement. Ann. Intern. Med. 150, 206–215 (2009).

von Elm, E. et al. The strengthening the reporting of observational studies in epidemiology (STROBE) statement: guidelines for reporting observational studies. Lancet 370, 1453–1457 (2007).

Dawson, L. A. & Sharpe, M. B. Image-guided radiotherapy: rationale, benefits, and limitations. Lancet Oncol. 7, 848–858 (2006).

Bentzen, S. M. et al. Quantitative analyses of normal tissue effects in the clinic (QUANTEC): an introduction to the scientific issues. Int. J. Radiat. Oncol. Biol. Phys. 76 (Suppl. 3), 3–9 (2010).

Verellen, D. et al. Innovations in image-guided radiotherapy. Nat. Rev. Cancer 7, 949–960 (2007).

Holthusen, H. Erfahrungen über die Verträglichkeitsgrenze für Röntgenstrahlen und deren nutzanwendung zur verhütung von schäden [German]. Strahlentherapie 57, 254–269 (1936).

Valentini, V. et al. Nomograms for predicting local recurrence, distant metastases, and overall survival for patients with locally advanced rectal cancer on the basis of European randomized clinical trials. J. Clin. Oncol. 29, 3163–3172 (2011).

Belderbos, J. et al. Randomised trial of sequential versus concurrent chemo-radiotherapy in patients with inoperable non-small cell lung cancer (EORTC 08972–22973). Eur. J. Cancer 43, 114–121 (2007).

Lambin, P. et al. The ESTRO Breur Lecture 2009. From population to voxel-based radiotherapy: exploiting intra-tumour and intra-organ heterogeneity for advanced treatment of non-small cell lung cancer. Radiother. Oncol. 96, 145–152 (2010).

Graham, M. V. et al. Clinical dose-volume histogram analysis for pneumonitis after 3D treatment for non-small cell lung cancer (NSCLC). Int. J. Radiat. Oncol. Biol. Phys. 45, 323–329 (1999).

Emami, B. et al. Tolerance of normal tissue to therapeutic irradiation. Int. J. Radiat. Oncol. Biol. Phys. 21, 109–122 (1991).

Ottolenghi, A., Smyth, V. & Trott, K. R. The risks to healthy tissues from the use of existing and emerging techniques for radiation therapy. Radiat. Prot. Dosimetry 143, 533–535 (2011).

Beetz, I. et al. NTCP models for patient-rated xerostomia and sticky saliva after treatment with intensity modulated radiotherapy for head and neck cancer: the role of dosimetric and clinical factors. Radiother. Oncol. http://dx.doi.org/10.1016/j.radonc.2012.03.004.

van der Schaaf, A. et al. Multivariate modeling of complications with data driven variable selection: guarding against overfitting and effects of data set size. Radiother. Oncol. http://dx.doi.org/10.1016/j.radonc.2011.12.006.

Xu, C.-J., van der Schaaf, A., van' t Veld, A. A., Langendijk, J. A. & Schilstra, C. Statistical validation of normal tissue complication probability models. Int. J. Radiat. Oncol. Biol. Phys. 84, e123–e129 (2012).

Nijsten, S. M., Mijnheer, B. J., Dekker, A. L., Lambin, P. & Minken, A. W. Routine individualised patient dosimetry using electronic portal imaging devices. Radiother. Oncol. 83, 65–75 (2007).

van Elmpt, W., Petit, S., De Ruysscher, D., Lambin, P. & Dekker, A. 3D dose delivery verification using repeated cone-beam imaging and EPID dosimetry for stereotactic body radiotherapy of non-small cell lung cancer. Radiother. Oncol. 94, 188–194 (2010).

van Elmpt, W. et al. 3D in vivo dosimetry using megavoltage cone-beam CT and EPID dosimetry. Int. J. Radiat. Oncol. Biol. Phys. 73, 1580–1587 (2009).

Rodrigues, G., Lock, M., D'Souza, D., Yu, E. & Van Dyk, J. Prediction of radiation pneumonitis by dose–volume histogram parameters in lung cancer—a systematic review. Radiother. Oncol. 71, 127–138 (2004).

Werner-Wasik, M., Yorke, E., Deasy, J., Nam, J. & Marks, L. B. Radiation dose-volume effects in the esophagus. Int. J. Radiat. Oncol. Biol. Phys. 76 (Suppl. 3), S86–S93 (2010).

Saunders, M., Rojas, A. M. & Dische, S. CHART revisited: a conservative approach for advanced head and neck cancer. Clin. Oncol. (R. Coll. Radiol.) 20, 127–133 (2008).

Turner, N. et al. Integrative molecular profiling of triple negative breast cancers identifies amplicon drivers and potential therapeutic targets. Oncogene 29, 2013–2023 (2010).

Begg, A. C. et al. The value of pretreatment cell kinetic parameters as predictors for radiotherapy outcome in head and neck cancer: a multicenter analysis. Radiother. Oncol. 50, 13–23 (1999).

Taguchi, F. et al. Mass spectrometry to classify non-small-cell lung cancer patients for clinical outcome after treatment with epidermal growth factor receptor tyrosine kinase inhibitors: a multicohort cross-institutional study. J. Natl Cancer Inst. 99, 838–846 (2007).

Hessel, F. et al. Impact of increased cell loss on the repopulation rate during fractionated irradiation in human FaDu squamous cell carcinoma growing in nude mice. Int. J. Radiat. Biol. 79, 479–486 (2003).

Baumann, M., Krause, M. & Hill, R. Exploring the role of cancer stem cells in radioresistance. Nat. Rev. Cancer 8, 545–554 (2008).

Ben-Josef, E. et al. Impact of overall treatment time on survival and local control in patients with anal cancer: a pooled data analysis of Radiation Therapy Oncology Group trials 87–04 and 98–11. J. Clin. Oncol. 28, 5061–5066 (2010).

Thames, H. D. et al. The role of overall treatment time in the outcome of radiotherapy of prostate cancer: an analysis of biochemical failure in 4839 men treated between 1987 and 1995. Radiother. Oncol. 96, 6–12 (2010).

Fass, L. Imaging and cancer: A review. Mol. Oncol. 2, 115–152 (2008).

Torigian, D. A., Huang, S. S., Houseni, M. & Alavi, A. Functional imaging of cancer with emphasis on molecular techniques. CA Cancer J. Clin. 57, 206–224 (2007).

Eadie, L. H., Taylor, P. & Gibson, A. P. A systematic review of computer-assisted diagnosis in diagnostic cancer imaging. Eur. J. Radiol. 81, e70–e76 (2012).

Gillies, R. J., Anderson, A. R., Gatenby, R. A. & Morse, D. L. The biology underlying molecular imaging in oncology: from genome to anatome and back again. Clin. Radiol. 65, 517–521 (2010).

Lambin, P. et al. Radiomics: Extracting more information from medical images using advanced feature analysis. Eur. J. Cancer 48, 441–446 (2012).

Velazquez, E. R., Aerts, H. J., Oberije, C., De Ruysscher, D. & Lambin, P. Prediction of residual metabolic activity after treatment in NSCLC patients. Acta Oncol. 49, 1033–1039 (2010).

Cangir, A. K. et al. Prognostic value of tumor size in non-small cell lung cancer larger than five centimeters in diameter. Lung Cancer 46, 325–331 (2004).

Lam, J. S. et al. Prognostic relevance of tumour size in T3a renal cell carcinoma: a multicentre experience. Eur. Urol. 52, 155–162 (2007).

Pitson, G. et al. Tumor size and oxygenation are independent predictors of nodal diseases in patients with cervix cancer. Int. J. Radiat. Oncol. Biol. Phys. 51, 699–703 (2001).

Thomas, F. et al. Radical radiotherapy alone in non-operable breast cancer: The major impact of tumor size and histological grade on prognosis. Radiother. Oncol. 13, 267–276 (1988).

Steenbakkers, R. J. et al. Observer variation in target volume delineation of lung cancer related to radiation oncologist-computer interaction: a 'Big Brother' evaluation. Radiother. Oncol. 77, 182–190 (2005).

Greco, C., Rosenzweig, K., Cascini, G. L. & Tamburrini, O. Current status of PET/CT for tumour volume definition in radiotherapy treatment planning for non-small cell lung cancer (NSCLC). Lung Cancer 57, 125–134 (2007).

Caldwell, C. B. et al. Observer variation in contouring gross tumor volume in patients with poorly defined non-small-cell lung tumors on CT: the impact of 18FDG-hybrid PET fusion. Int. J. Radiat. Oncol. Biol. Phys. 51, 923–931 (2001).

Bowden, P. et al. Measurement of lung tumor volumes using three-dimensional computer planning software. Int. J. Radiat. Oncol. Biol. Phys. 53, 566–573 (2002).

Nishino, M. et al. CT tumor volume measurement in advanced non-small-cell lung cancer: performance characteristics of an emerging clinical tool. Acad. Radiol. 18, 54–62 (2011).

Marcus, C. D. et al. Imaging techniques to evaluate the response to treatment in oncology: current standards and perspectives. Crit. Rev. Oncol. Hematol. 72, 217–238 (2009).

Schwartz, L. H., Mazumdar, M., Brown, W., Smith, A. & Panicek, D. M. Variability in response assessment in solid tumors: effect of number of lesions chosen for measurement. Clin. Cancer Res. 9, 4318–4323 (2003).

Erasmus, J. J. et al. Interobserver and intraobserver variability in measurement of non-small-cell carcinoma lung lesions: implications for assessment of tumor response. J. Clin. Oncol. 21, 2574–2582 (2003).

Therasse, P. Measuring the clinical response. What does it mean? Eur. J. Cancer 38, 1817–1823 (2002).

Nehmeh, S. A. & Erdi, Y. E. Respiratory motion in positron emission tomography/computed tomography: a review. Semin. Nucl. Med. 38, 167–176 (2008).

Sonke, J. J. & Belderbos, J. Adaptive radiotherapy for lung cancer. Semin. Radiat. Oncol. 20, 94–106 (2010).

van Baardwijk, A. et al. PET-CT-based auto-contouring in non-small-cell lung cancer correlates with pathology and reduces interobserver variability in the delineation of the primary tumor and involved nodal volumes. Int. J. Radiat. Oncol. Biol. Phys. 68, 771–778 (2007).

Wu, K. et al. PET CT thresholds for radiotherapy target definition in non-small-cell lung cancer: how close are we to the pathologic findings? Int. J. Radiat. Oncol. Biol. Phys. 77, 699–706 (2010).

Wanet, M. et al. Gradient-based delineation of the primary GTV on FDG-PET in non-small cell lung cancer: a comparison with threshold-based approaches, CT and surgical specimens. Radiother. Oncol. 98, 117–125 (2011).

Strassmann, G. et al. Atlas-based semiautomatic target volume definition (CTV) for head-and-neck tumors. Int. J. Radiat. Oncol. Biol. Phys. 78, 1270–1276 (2010).

Nestle, U. et al. Comparison of different methods for delineation of 18F-FDG PET-positive tissue for target volume definition in radiotherapy of patients with non-small cell lung cancer. J. Nucl. Med. 46, 1342–1348 (2005).

Daisne, J. F. et al. Tumor volume in pharyngolaryngeal squamous cell carcinoma: comparison at CT, MR imaging, and FDG PET and validation with surgical specimen. Radiology 233, 93–100 (2004).

van Loon, J. et al. Therapeutic implications of molecular imaging with PET in the combined modality treatment of lung cancer. Cancer Treat. Rev. 37, 331–343 (2011).

Wood, K. A., Hoskin, P. J. & Saunders, M. I. Positron emission tomography in oncology: a review. Clin. Oncol. 19, 237–255 (2007).

O'Connor, J. P. et al. Quantitative imaging biomarkers in the clinical development of targeted therapeutics: current and future perspectives. Lancet Oncol. 9, 766–776 (2008).

van Baardwijk, A. et al. Time trends in the maximal uptake of FDG on PET scan during thoracic radiotherapy. A prospective study in locally advanced non-small cell lung cancer (NSCLC) patients. Radiother. Oncol. 82, 145–152 (2007).

Rodney, J. H. PET for therapeutic response monitoring in oncology. PET Clinics 3, 89–99 (2008).

Chung, H. H. et al. Prognostic value of metabolic tumor volume measured by FDG-PET/CT in patients with cervical cancer. Gynecol. Oncol. 120, 270–274 (2011).

Borst, G. R. et al. Standardised FDG uptake: a prognostic factor for inoperable non-small cell lung cancer. Eur. J. Cancer 41, 1533–1541 (2005).

Mac Manus, M. P. et al. Metabolic (FDG–PET) response after radical radiotherapy/chemoradiotherapy for non-small cell lung cancer correlates with patterns of failure. Lung Cancer 49, 95–108 (2005).

Hoekstra, C. J. et al. Prognostic relevance of response evaluation using [18F]-2-fluoro-2-deoxy-D-glucose positron emission tomography in patients with locally advanced non-small-cell lung cancer. J. Clin. Oncol. 23, 8362–8370 (2005).

Soto, D. E., Kessler, M. L., Piert, M. & Eisbruch, A. Correlation between pretreatment FDG–PET biological target volume and anatomical location of failure after radiation therapy for head and neck cancers. Radiother. Oncol. 89, 13–18 (2008).

Lambrecht, M. et al. The use of FDG–PET/CT and diffusion-weighted magnetic resonance imaging for response prediction before, during and after preoperative chemoradiotherapy for rectal cancer. Acta Oncol. 49, 956–963 (2010).

Janssen, M. H. M. et al. Evaluation of early metabolic responses in rectal cancer during combined radiochemotherapy or radiotherapy alone: Sequential FDG–PET–CT findings. Radiother. Oncol. 94, 151–155 (2010).

Ceulemans, G. et al. Can 18-FDG-PET during radiotherapy replace post-therapy scanning for detection/demonstration of tumor response in head-and-neck cancer? Int. J. Radiat. Oncol. Biol. Phys. 81, 938–942 (2011).

van Loon, J. et al. Early CT and FDG-metabolic tumour volume changes show a significant correlation with survival in stage I-III small cell lung cancer: a hypothesis generating study. Radiother. Oncol. 99, 172–175 (2011).

Bussink, J., Kaanders, J. H., van der Graaf, W. T. & Oyen, W. J. PET–CT for radiotherapy treatment planning and response monitoring in solid tumors. Nat. Rev. Clin. Oncol. 8, 233–242 (2011).

Boellaard, R. Need for standardization of 18F-FDG PET/CT for treatment response assessments. J. Nucl. Med. 52 (Suppl. 2), 93–100 (2011).

Boellaard, R. et al. The Netherlands protocol for standardisation and quantification of FDG whole body PET studies in multi-centre trials. Eur. J. Nucl. Med. Mol. Imaging 35, 2320–2333 (2008).

Boellaard, R. et al. FDG PET and PET/CT: EANM procedure guidelines for tumour PET imaging: version 1.0. Eur. J. Nucl. Med. Mol. Imaging 37, 181–200 (2010).

Bayouth, J. E. et al. Image-based biomarkers in clinical practice. Semin. Radiat. Oncol. 21, 157–166 (2011).

Harry, V. N., Semple, S. I., Parkin, D. E. & Gilbert, F. J. Use of new imaging techniques to predict tumour response to therapy. Lancet Oncol. 11, 92–102 (2010).

Heijmen, L. et al. Tumour response prediction by diffusion-weighted MR imaging: ready for clinical use? Crit. Rev. Oncol. Hematol. 83, 194–207 (2012).

Lambrecht, M. et al. The prognostic value of pretherapeutic diffusion-weighted MRI in oropharyngeal carcinoma treated with (chemo-)radiotherapy. Cancer Imaging 11, S112–S113 (2011).

Vandecaveye, V. et al. Diffusion-weighted magnetic resonance imaging early after chemoradiotherapy to monitor treatment response in head-and-neck squamous cell carcinoma. Int. J. Radiat. Oncol. Biol. Phys. 82, 1098–1107 (2012).

Kim, S. Y. et al. Malignant hepatic tumors: short-term reproducibility of apparent diffusion coefficients with breath-hold and respiratory-triggered diffusion-weighted MR imaging. Radiology 255, 815–823 (2010).

Sinkus, R., Van Beers, B. E., Vilgrain, V., Desouza, N. & Waterton, J. C. Apparent diffusion coefficient from magnetic resonance imaging as a biomarker in oncology drug development. Eur. J. Cancer 48, 425–431 (2012).

Kierkels, R. G. et al. Comparison between perfusion computed tomography and dynamic contrast-enhanced magnetic resonance imaging in rectal cancer. Int. J. Radiat. Oncol. Biol. Phys. 77, 400–408 (2010).

Shukla-Dave, A. et al. Dynamic contrast-enhanced magnetic resonance imaging as a predictor of outcome in head and neck squamous cell carcinoma patients with nodal metastases. Int. J. Radiat. Oncol. Biol. Phys. 82, 1837–1844 (2012).

Yaromina, A. et al. Co-localisation of hypoxia and perfusion markers with parameters of glucose metabolism in human squamous cell carcinoma (hSCC) xenografts. Int. J. Radiat. Biol. 85, 972–980 (2009).

Mörchel, P. et al. Correlating quantitative MR measurements of standardized tumor lines with histological parameters and tumor control dose. Radiother. Oncol. 96, 123–130 (2010).

Quennet, V. et al. Tumor lactate content predicts for response to fractionated irradiation of human squamous cell carcinomas in nude mice. Radiother. Oncol. 81, 130–135 (2006).

Kim, Y. I. et al. Multiphase contrast-enhanced CT imaging in hepatocellular carcinoma correlation with immunohistochemical angiogenic activities. Acad. Radiol. 14, 1084–1091 (2007).

Miles, K. A. Perfusion CT for the assessment of tumour vascularity: which protocol? Br. J. Radiol. 76, S36–S42 (2003).

Miles, K. A. Molecular imaging with dynamic contrast-enhanced computed tomography. Clin. Radiol. 65, 549–556 (2010).

Petralia, G. et al. CT perfusion in oncology: how to do it. Cancer Imaging 10, 8–19 (2010).

Asselin, M. C., O'Connor, J. P., Boellaard, R., Thacker, N. A. & Jackson, A. Quantifying heterogeneity in human tumours using MRI and PET. Eur. J. Cancer 48, 447–455 (2012).

Eary, J. F., O'Sullivan, F., O'Sullivan, J. & Conrad, E. U. Spatial heterogeneity in sarcoma 18F-FDG uptake as a predictor of patient outcome. J. Nucl. Med. 49, 1973–1979 (2008).

Tixier, F. et al. Intratumor heterogeneity characterized by textural features on baseline 18F-FDG PET images predicts response to concomitant radiochemotherapy in esophageal cancer. J. Nucl. Med. 52, 369–378 (2011).

Diehn, M. et al. Identification of noninvasive imaging surrogates for brain tumor gene-expression modules. Proc. Natl Acad. Sci. USA 105, 5213–5218 (2008).

Kuo, M. D., Gollub, J., Sirlin, C. B., Ooi, C. & Chen, X. Radiogenomic analysis to identify imaging phenotypes associated with drug response gene expression programs in hepatocellular carcinoma. J. Vasc. Interv. Radiol. 18, 821–831 (2007).

Segal, E. et al. Decoding global gene expression programs in liver cancer by noninvasive imaging. Nat. Biotechnol. 25, 675–680 (2007).

Rutman, A. M. & Kuo, M. D. Radiogenomics: creating a link between molecular diagnostics and diagnostic imaging. Eur. J. Radiol. 70, 232–241 (2009).

Lindegaard, J. C., Overgaard, J., Bentzen, S. M. & Pedersen, D. Is there a radiobiologic basis for improving the treatment of advanced stage cervical cancer? J. Natl Cancer Inst. Monogr. 105–112 (1996).

Slonina, D. & Gasin´ska, A. Intrinsic radiosensitivity of healthy donors and cancer patients as determined by the lymphocyte micronucleus assay. Int. J. Radiat. Biol. 72, 693–701 (1997).

Fertil, B. & Malaise, E. P. Intrinsic radiosensitivity of human cell lines is correlated with radioresponsiveness of human tumors: analysis of 101 published survival curves. Int. J. Radiat. Oncol. Biol. Phys. 11, 1699–1707 (1985).

West, C. M., Davidson, S. E., Roberts, S. A. & Hunter, R. D. The independence of intrinsic radiosensitivity as a prognostic factor for patient response to radiotherapy of carcinoma of the cervix. Br. J. Cancer 76, 1184–1190 (1997).

Björk-Eriksson, T., West, C., Karlsson, E. & Mercke, C. Tumor radiosensitivity (SF2) is a prognostic factor for local control in head and neck cancers. Int. J. Radiat. Oncol. Biol. Phys. 46, 13–19 (2000).

Bartelink, H. et al. Towards prediction and modulation of treatment response. Radiother. Oncol. 50, 1–11 (1999).

Begg, A. C. Predicting recurrence after radiotherapy in head and neck cancer. Semin. Radiat. Oncol. 22, 108–118 (2012).

Menegakis, A. et al. Prediction of clonogenic cell survival curves based on the number of residual DNA double strand breaks measured by γ-H2AX staining. Int. J. Radiat. Biol. 85, 1032–1041 (2009).

Olive, P. L. & Banáth, J. P. Phosphorylation of histone H2AX as a measure of radiosensitivity. Int. J. Radiat. Oncol. Biol. Phys. 58, 331–335 (2004).

Höckel, M. et al. Association between tumor hypoxia and malignant progression in advanced cancer of the uterine cervix. Cancer Res. 56, 4509–4515 (1996).

Vaupel, P. & Mayer, A. Hypoxia in cancer: significance and impact on clinical outcome. Cancer Metastasis Rev. 26, 225–239 (2007).

Chouaib, S. et al. Hypoxia promotes tumor growth in linking angiogenesis to immune escape. Front. Immunol. 3, 21 (2012).

Kaanders, J. H. et al. Pimonidazole binding and tumor vascularity predict for treatment outcome in head and neck cancer. Cancer Res. 62, 7066–7074 (2002).

Nordsmark, M. et al. The prognostic value of pimonidazole and tumour pO2 in human cervix carcinomas after radiation therapy: a prospective international multi-center study. Radiother. Oncol. 80, 123–131 (2006).

Rouschop, K. M. A. et al. The unfolded protein response protects human tumor cells during hypoxia through regulation of the autophagy genes MAP1LC3B and ATG5. J. Clin. Invest. 120, 127–141 (2010).

Krause, B. J., Beck, R., Souvatzoglou, M. & Piert, M. PET and PET/CT studies of tumor tissue oxygenation. Q. J. Nucl. Med. Mol. Imaging 50, 28–43 (2006).

Dubois, L. J. et al. Preclinical evaluation and validation of [18F]HX4, a promising hypoxia marker for PET imaging. Proc. Natl Acad. Sci. USA 108, 14620–14625 (2011).

van Loon, J. et al. Selective nodal irradiation on basis of (18)FDG–PET scans in limited-disease small-cell lung cancer: a prospective study. Int. J. Radiat. Oncol. Biol. Phys. 77, 329–336 (2010).

West, C. M., Cooper, R. A., Loncaster, J. A., Wilks, D. P. & Bromley, M. Tumor vascularity: a histological measure of angiogenesis and hypoxia. Cancer Res. 61, 2907–2910 (2001).

Maciejewski, B., Withers, H. R., Taylor, J. M. & Hliniak, A. Dose fractionation and regeneration in radiotherapy for cancer of the oral cavity and oropharynx: tumor dose-response and repopulation. Int. J. Radiat. Oncol. Biol. Phys. 16, 831–843 (1989).

Suzuki, Y. et al. Prognostic impact of mitotic index of proliferating cell populations in cervical cancer patients treated with carbon ion beam. Cancer 115, 1875–1882 (2009).

Turesson, I., Nyman, J., Holmberg, E. & Odén, A. Prognostic factors for acute and late skin reactions in radiotherapy patients. Int. J. Radiat. Oncol. Biol. Phys. 36, 1065–1075 (1996).

Johansen, J., Bentzen, S. M., Overgaard, J. & Overgaard, M. Evidence for a positive correlation between in vitro radiosensitivity of normal human skin fibroblasts and the occurrence of subcutaneous fibrosis after radiotherapy. Int. J. Radiat. Biol. 66, 407–412 (1994).

West, C. M. et al. Lymphocyte radiosensitivity is a significant prognostic factor for morbidity in carcinoma of the cervix. Int. J. Radiat. Oncol. Biol. Phys. 51, 10–15 (2001).

Peacock, J. et al. Cellular radiosensitivity and complication risk after curative radiotherapy. Radiother. Oncol. 55, 173–178 (2000).

Russell, N. S. et al. Low predictive value of intrinsic fibroblast radiosensitivity for fibrosis development following radiotherapy for breast cancer. Int. J. Radiat. Biol. 73, 661–670 (1998).

Russell, N. S., Arlett, C. F., Bartelink, H. & Begg, A. C. Use of fluorescence in situ hybridization to determine the relationship between chromosome aberrations and cell survival in eight human fibroblast strains. Int. J. Radiat. Biol. 68, 185–196 (1995).

Kiltie, A. E. et al. A correlation between residual radiation-induced DNA double-strand breaks in cultured fibroblasts and late radiotherapy reactions in breast cancer patients. Radiother. Oncol. 51, 55–65 (1999).

Dileto, C. L. & Travis, E. L. Fibroblast radiosensitivity in vitro and lung fibrosis in vivo: comparison between a fibrosis-prone and fibrosis-resistant mouse strain. Radiat. Res. 146, 61–67 (1996).

Azria, D. et al. Concurrent or sequential adjuvant letrozole and radiotherapy after conservative surgery for early-stage breast cancer (CO-HO-RT): a phase 2 randomised trial. Lancet Oncol. 11, 258–265 (2010).

Bentzen, S. M. Preventing or reducing late side effects of radiation therapy: radiobiology meets molecular pathology. Nat. Rev. Cancer 6, 702–713 (2006).

Rodemann, H. P. & Bamberg, M. Cellular basis of radiation-induced fibrosis. Radiother. Oncol. 35, 83–90 (1995).