Abstract

Perceptual decisions rely on accumulating sensory evidence. This computation has been studied using either drift diffusion models or neurobiological network models exhibiting winner-take-all attractor dynamics. Although both models can account for a large amount of data, it remains unclear whether their dynamics are qualitatively equivalent. Here we show that in the attractor model, but not in the drift diffusion model, an increase in the stimulus fluctuations or the stimulus duration promotes transitions between decision states. The increase in the number of transitions leads to a crossover between weighting mostly early evidence (primacy) to weighting late evidence (recency), a prediction we validate with psychophysical data. Between these two limiting cases, we found a novel flexible categorization regime, in which fluctuations can reverse initially-incorrect categorizations. This reversal asymmetry results in a non-monotonic psychometric curve, a distinctive feature of the attractor model. Our findings point to correcting decision reversals as an important feature of perceptual decision making.

Similar content being viewed by others

Introduction

Integrating information over time is a fundamental computation that neural systems can adaptively perform in a variety of contexts. The integration of perceptual evidence is an example of such computation, and its most common paradigm is the binary categorization of ambiguous stimuli characterized by a stream of sensory evidence. This process is typically modeled with the drift diffusion model with absorbing bounds (DDMA) which integrates the stimulus evidence linearly until one of the bounds is reached1. The DDMA and its different variations have been successfully used to fit psychometric and chronometric curves1,2, to capture the speed-accuracy trade-off1,2,3, to account for history dependent choice biases4, changes of mind5, confidence reports6, or the Weber’s law7. Although the absorbing bounds were originally thought of as a mechanism to terminate the integration process, the DDMA has also been applied to fixed duration tasks8,9,10. In motion discrimination tasks, for instance, it can reproduce the subjects’ tendency to give more weight to early rather than late stimulus information, which is called a primacy effect8,10,11,12,13,14. However, depending on the details of the task and the stimulus, subjects can also give more weight to late rather than to early evidence (i.e., a recency effect)15,16 or weigh the whole stimulus uniformly17. In order to account for these differences, the DDMA needs to be modified by using reflecting instead of absorbing bounds or by removing the bounds altogether18. Despite their considerable success in fitting experimental data, the DDMA and its many variants remain purely phenomenological descriptions of sensory integration. This makes it difficult to link the DDMA to the actual neural circuit mechanisms underlying perceptual decision making.

These neural circuit mechanisms have been studied with biophysical attractor network models that can integrate stimulus evidence over relatively long time scales19,20. Attractor network models have been used, among other examples, to study the adjustment of speed-accuracy trade-off in a cortico-basal ganglia circuit21, learning dynamics of sensorimotor associations22, the generation of choice correlated sensory activity in hierarchical networks23,24,25, the role of the pulvino-cortical pathway in controlling the effective connectivity within and across cortical regions26 or how trial history biases can emerge from the circuit dynamics27. In the typical regime in which the attractor network was originally used for perceptual categorization19,28, the impact of the stimulus on the decision decreases as the network evolves towards an attractor. In this regime, the integration dynamics of the attractor model are qualitatively similar to those of the DDMA as it also shows a primacy effect. Moreover, the attractor network can also provide an excellent fit to psychometric and chronometric curves19,28. Thus, a common implicit assumption is that the attractor network is simply a neurobiological implementation of the DDMA29,30 and hence there has been more interest in studying the similarities between these two models rather than their differences31 (but see refs. 32,33).

Here, we show that the attractor model has richer dynamics beyond the well known primacy regime. In particular, the model exhibits a crossover from primacy to recency as the stimulus fluctuations or stimulus duration are increased. Intermediate to these two limiting regimes, the stimulus can impact the upcoming decision nearly uniformly across the entire stimulus duration. Specifically, if the first attractor state reached corresponds to the incorrect choice, stimulus fluctuations later in the trial can lead to a correcting transition, while if the initial attractor is correct, fluctuations are not strong enough to drive an error transition. As a consequence, the model shows a non-monotonic psychometric curve as a function of the strength of stimulus fluctuations, and the maximum occurs precisely in this intermediate “flexible categorization” regime. To illustrate the relevance of our theoretical results, we re-analyze data from two psychophysical experiments34,35 and show that the attractor model can quantitatively fit the crossover from primacy to recency with the stimulus duration, and the integration and storage of evidence when stimuli are separated by a memory delay. Our characterization of the flexible categorization regime in the attractor model reveals that correcting transitions may be a key property of evidence integration in perceptual decision making.

Results

Canonical models of perceptual decision making result in stereotypical psychophysical kernels

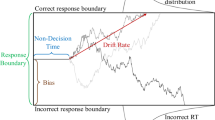

We start by characterizing the dynamics of evidence integration in standard drift diffusion models during a binary classification task. These models are described as the evolution of a decision variable x(t) that integrates the moment-by-moment evidence S(t) provided by the stimulus, plus a noise term reflecting the internal stochasticity in the process1,30,31.

where τ is the time constant of the integration process. The evidence S(t) fluctuates in time and can be written as a constant mean drift μ, plus a time-varying term, caused by the fluctuations of the input stimulus: S(t) = μ + σSξS(t). We call σS the magnitude of stimulus fluctuations. Assuming that both fluctuating terms, ξI and ξS are Gaussian stochastic processes, Eq. 1 can be recast as the motion of a particle in a potential:

where the potential φ(x) = −μx (Fig. 1d–f, inset). The conceptual advantage of using a potential relies on the fact that the dynamics of the decision variable always “roll downward” towards the minima of the potential with only the fluctuation terms ξS(t) or ξI(t) causing possible motion upward. Notice that, although the term ξS(t) is modeled as a noise term, it represents the temporal variations of the stimulus which are under the control of the experimenter. The existence of decision bounds can be readily introduced in the shape of the potential, which strongly influences how stimulus fluctuations impact the upcoming decision. To quantify this impact, we used the Psychophysical Kernel (PK) which measures the influence of the stimulus fluctuations on the decision during the course of the stimulus (see Methods): (1) In the DDMA (Fig. 1a), absorbing bounds are implemented as two vertical “cliffs” such that when the decision variable arrives at one of them, it remains there for the rest of the trial. When this happens, the fluctuations late in the stimulus are unlikely to affect the decision, yielding a decaying PK characteristic of a “primacy” effect8,18,31,33,36. (2) In the Drift Diffusion Model with Reflecting bounds (DDMR) (Fig. 1b), the bounds are two vertical walls that set limits to the accumulated evidence; early stimulus fluctuations are largely forgotten once the decision variable bounces against one bound and hence the PK shows a “recency” effect18. (3) In the Perfect Integrator (PI) (Fig. 1c), there are no bounds, the stimulus is integrated uniformly across time yielding a flat PK17. Thus, each of these three canonical models performs a qualitatively distinct integration process by virtue of how the bounds are imposed. Moreover, the characteristic integration dynamics of each model is invariant to changes in the stimulus parameters. To illustrate this, we show how the PKs depended on the magnitude of the stimulus fluctuations, σS (Fig. 1). For very weak stimulus fluctuations, all three models are trivially equivalent because the bounds are never reached and hence the PKs are flat (Fig. 1d–f). As σS increases, in both the DDMA and the DDMR, the bounds are reached faster yielding an increase and a decrease of the PK slope, respectively (Fig. 1h). In these two models, the integration of evidence becomes more and more transient as σS increases, ultimately causing a decrease of the PK area (Fig. 1g). Including collapsing bounds in the DDMA did not modify the picture qualitatively, with the integration becoming more transient as the velocity of the collapsing bounds increased (Supplementary Fig. 1). The PK for the PI remains flat for all σS (zero PK slope, Fig. 1h) and its area increases monotonically (Fig. 1g). Thus, the dynamics of evidence accumulation are an invariant and distinct property of each model.

a–c Single-trial example traces of the decision variable x(t) for weak (σS = 0.03) and intermediate (σS = 0.28) stimulus fluctuations in the three canonical models. a The DDM with absorbing bounds integrates the stimulus until it reaches one of the absorbing bounds represented in the potential landscape as infinitely deep attractors (see inset in d). The slope of the potential landscape is the mean stimulus strength, in this case μ < 0. b The DDM with reflecting bounds integrates the stimulus linearly until a bound is reached when no more evidence can be accumulated in favor of the corresponding choice option (see inset in e). c The perfect integrator integrates the entire stimulus uniformly, corresponding to a diffusion process with a flat potential (see inset in f). In the three models, the choice is given by the sign of x(t) at stimulus offset. d–f Psychophysical Kernels (PK) for the three canonical models for increasing magnitude of the stimulus fluctuations (from left to right): σS = 0.03, 0.28, and 0.69. The PK measures the time-resolved impact of the stimulus fluctuations on choice (see Methods). g–h Normalized PK area and normalized PK slope as a function of σS for the three canonical models (see inset in g for color code). The area is normalized by the PK area of the perfect integrator with no internal noise (σi = 0) and hence measures the ability of each model to integrate the stimulus fluctuations. In all panels, internal noise was fixed at σI = 0.1 (see arrows in g and h) which was sufficiently small to prevent x(t) from reaching the bounds in the absence of a stimulus. Mean stimulus evidence was μ = 0 in all cases.

Neurobiological models show a variety of integration regimes

We next characterized the dynamics of evidence accumulation in the double well model (DWM), which can accurately describe the dynamics of a biophysical attractor network model19,28. The DWM exhibits winner-take-all attractor dynamics defined by the nonlinear potential φ(x):

The resulting energy landscape can exhibit two minima (i.e., attractor states) corresponding to the two possible choices (Fig. 2a, inset). The three terms of the potential, from left to right, capture (1) the impact of the net stimulus evidence μ which, as in the canonical models, tilts the potential towards the attractor associated with the correct choice; (2) the model’s internal categorization dynamics parameterized by the height of the barrier separating the two attractors (which scales with α2), and (3) bounds, also arising from the internal dynamics, that limit the range over which evidence is accumulated. We found that the DWM had a much richer dynamical repertoire as a function of stimulus fluctuations magnitude than the canonical models. Specifically, the attractors imposed the categorization dynamics, but these could be overcome by sufficiently strong stimulus fluctuations. Thus, for weak σS, the categorization dynamics dominated: when the system reached an attractor, it remained in this initial categorization until the end of the stimulus. In this regime, only early stimulus fluctuations occurring before reaching an attractor could influence the final choice, yielding a primacy PK19,23 (Fig. 2c, second line from the left). For strong σS, the initial categorization had no impact on the final choice because transitions between the attractors occurred readily. It was the fluctuations coming late in the trial which determined the final state of the system and hence the PK showed recency (Fig. 2c, orange). For moderate values of σS, there was an intermediate regime in which the PK was a mixture between primacy and recency, but not necessarily flat (Fig. 2c, third line from the left). We called this regime flexible categorization because it represented a balance between the internal categorization dynamics and the ability of the stimulus fluctuations to overcome their attraction (Fig. 2b). As a result of this balance, the stimulus fluctuations impacted the choice over the whole trial (PK slope = 0; Fig. 2e) because both initial fluctuations and later fluctuations causing transitions had a substantial impact on choice. Moreover, these fluctuations causing transitions were more temporally extended than those in the recency regime (Supplementary Fig. 2a). Thus, the area of the PK reached its maximum (maximum area = 0.82; Fig. 2f) implying that the integration of the stimulus fluctuations carried out by DWM was comparable to a PI (which has PK area equal 1). The same crossover from primacy to recency regimes, passing through the flexible categorization regime, could be achieved, at fixed σS, by varying the stimulus duration (Fig. 2d, g). This occurs because for a fixed magnitude of stimulus fluctuations, the rate of transitions was constant but the probability to observe a transition increased with the stimulus duration changing the shape of the PK accordingly (Fig. 2d). In sum, depending on the capacity of the stimulus to generate transitions between attractors, the DWM model could operate in the primacy, the recency, or the flexible categorization integration regime.

a, b Single-trial example traces of the decision variable for the DWM with weak (a, σS = 0.1) and intermediate (b, σS = 0.58) stimulus fluctuations σS. Transitions between attractors were only possible for sufficiently strong σS (insets). c PKs for increasing values of σS (from left to right, σS = 0.02, 0.1, 0.58, and 1). d PKs for increasing values of stimulus duration T (from left to right, T = 0.5, 1, and 2.5 with σS = 0.58). e, f Normalized PK slope and PK area as a function of σS. Colored dots indicate the examples shown in panel c. The area peaks at the flexible categorization and it vanishes for small σS because choice is then driven by internal noise. g, h Normalized PK slope and area as a function of T with σS = 0.58. As T increases, the DWM integrates a smaller fraction of the stimulus making the area decrease monotonically. Internal noise was σI = 0.1 in all panels (see arrows in panels e and f).

Decision accuracy in models of evidence integration

Given that the DWM changes its integration regime when σS is varied, we next investigated the impact of this manipulation on the decision accuracy. We set the internal noise to σI = 0 and computed the psychometric function P(μ,σS) showing the proportion of correct choices as a function of the mean stimulus evidence μ and the strength of stimulus fluctuations σS. For small fixed σS the section of this surface yielded a classic sigmoid-like psychometric curve P(μ) (Fig. 3a, dark brown curve). As σS increased, this curve became shallower simply because larger fluctuations implied a drop in the signal-to-noise ratio of the stimulus (Fig. 3a, red and orange curves). Unexpectedly, however, the decline in sensitivity of the curve P(μ) was non-monotonic (Fig. 3a), an effect which was best illustrated by plotting the less conventional psychometric curve P(σS) at fixed μ (Fig. 3a, b, black curve). To understand this non-monotonic dependence, we first defined two transition probabilities: the correcting transition probability pC was the probability to be in the correct attractor at the end of a trial, given that the first visited attractor was the error. The error-generating transition probability pE was the opposite, i.e., the probability to finish in the wrong attractor given that the correct one was visited first (see Methods). Using Kramers’ reaction-rate theory37 the transition probabilities could be analytically computed, and the accuracy P could be expressed as the probability to initially make a correct categorization and maintain it, plus the probability to make an initial error and reverse it:

where P0 was the probability of first visiting the correct attractor (Methods). When the fluctuations were negligible σS ≈ 0, the decision variable always rolled down towards the correct choice because the double well potential was tilted to the correct attractor (e.g., μ > 0), and hence P = 1 (Fig. 3bi). As σS started to increase, fluctuations early in the stimulus could cause the system to fall into the incorrect attractor but, because fluctuations were not sufficiently strong to generate transitions (pE ≈ pC ≈ 0), accuracy was P = P0 (Eq. 4) and decreased with σS towards chance (gray line in Fig. 3b). As the stimulus fluctuations grew stronger, the transitions between attractors became more likely but, because the barrier to escape from the incorrect attractor was smaller than the barrier to escape from the correct attractor, the two transition probabilities were very different. Specifically, Kramers’ theory shows that the ratio between pC and pE depends exponentially on the barrier height difference (see Methods). Thus, pC increased steeply with σS, even before pE reached non-negligible values (Fig. 3c) opening a window in which transitions were only correcting: accuracy became \(P \simeq P_0 + \left( {1 - P_0} \right)p_{\mathrm{C}}\) and it increased with σS (Fig. 3biii). The maximum difference between pC and pE coincided with the flexible categorization regime in which the PK slope was zero and the accuracy showed a local maximum (Fig. 3b–d). Finally, for strong σS, error transitions also became likely and the net effect of stimulus fluctuations was again deleterious, causing a decrease of P. In sum, it was the large difference in transition probabilities caused by the double well landscape which led to the non-monotonic dependence of the psychometric curve. Because the canonical models lacked attractor dynamics, the accuracy in all of them decayed monotonically with the stimulus fluctuations (Fig. 3g).

a Probability of a righward choice as a function of the mean stimulus evidence (μ) and the stimulus fluctuations (σS). The colored lines show classic psychometric curves, accuracy versus μ (for fixed σS = 0.07, 0.26, 0.46, and 0.90) whereas the black line shows the accuracy versus σS (for fixed μ = 0.15). b Accuracy (P) as a function of the stimulus fluctuations σS obtained from numerical simulations (dots) and theory (line, same as black line in a). Insets show the PK for three values of σS (marked with colored dots). The gray line shows the accuracy of the first visit attractor (P0). c Probability to make a correcting pC (green) or an error transition pE (black) and their difference pC − pE (gray). The local maximum in P coincides with the maximum difference between the two probabilities. Insets: sequence of regimes as transitions become more likely: (i) For negligible σS, the decision variable always evolves towards the correct attractor; (ii) as σs increases, the decision variable can visit the incorrect attractor but neither kind of transition is activated; (iii) for stronger σS, only the correcting transitions (green arrow) are activated; (iv) for strong σS, both types of transition are activated. d Normalized PK slope as a function of σS. The flexible categorization regime, reached when the index is close to zero, coincides with the local maximum in accuracy (a). e Accuracy versus σS for different stimulus durations T (see inset). The accuracy for any finite T shifts as σS increases between the probability to first visit the correct attractor P0 and the stationary accuracy P∞. f Accuracy versus σS for different magnitudes of the internal noise (see inset). g Accuracy versus σS for the three canonical models (see inset). The internal noise was σi = 0 in all panels except in f.

We next asked whether the non-monotonicity of the psychometric curve was robust to variation of other parameters such as the mean stimulus evidence μ, the stimulus duration T, and the internal noise σI. We found that the non-monotonicity was robustly obtained over a broad range of μ, ranging from small values just above zero to a critical value beyond which the curve became monotonically decreasing (Supplementary Fig. 3). Because the transition probabilities scale with the stimulus duration T, the psychometric curve P(σS) was strongly affected by changes in T (Fig. 3e). To understand this dependence, we rewrote the transitions probabilities pC and pE from Eq. 4 as a function of the transition rates and the stimulus duration (see Methods, Eqs. 17 and 18):

where k is the sum of the transition rates from both attractors and P∞ is the stationary accuracy (i.e., the limit of P when T→∞). As expected, the two psychometric curves P0 and P∞, which decreased monotonically with σS, delimited the region in which P existed: for weak σS, P followed the decay of the psychometric curve P0, whereas for strong σS it tracked the decay of the stationary accuracy P∞. The switching point occurred when the probability to observe a transition was substantial, i.e., when kT ~ 1. For longer stimulus durations, the activation of the transitions occurred for weaker σS and consequently the bump in accuracy was shifted towards the left also becoming more prominent (Fig. 3e, Methods). For very short T, the activation of the transitions occurred for such a large value of σS that the two curves P0 and P∞ have come too close and the psychometric P(σS) was then monotonically decreasing (Fig. 3e). Finally, when we set the internal noise to a nonzero value, it sets a minimal level of fluctuations below which no stimulus magnitude σS could go, effectively cropping the psychometric curve P(σS) from the left (Fig. 3f). Only when the internal noise was larger than a critical value the psychometric curve became monotonically decreasing (Supplementary Fig. 3, see Methods for the computation of the critical noise value). In sum, the non-monotonicity of the psychometric curve was a robust effect, being most prominent for values of the mean stimulus evidence μ yielding an intermediate accuracy (i.e., P ~ 0.75), long stimulus durations and weak internal noise.

Consistency in models of evidence integration

In order to identify further signatures of the nonlinear attractor dynamics that could be tested experimentally, we studied the choice consistency of the DWM. Choice consistency is defined as the probability that two presentations of the same exact stimulus, i.e., the same realization of the stimulus fluctuations, yield the same choice. In the absence of internal noise, the decision process in the model is deterministic and consistency is 1. In contrast, when the stimulus has no impact on the choice, the consistency is 0.5. We used the double-pass method, which presents each stimulus twice12,38,39, to explore how consistency in the DWM depended on σS and σI (Fig. 4). We only used μ = 0 stimuli with exactly zero integrated evidence in order to avoid the parsimonious increase of consistency due to larger deviations of the accumulated evidence from the mean (see Methods). As expected, consistency was close to 0.5 when σS was small compared to σI, and it increased with increasing σS (Fig. 4a). However, despite this general increase, we found a striking drop in consistency for a range of intermediate σS values. Thus, consistency could depend non-monotonically on the strength of stimulus fluctuations, a similar effect as observed for choice accuracy. To understand this effect, we studied the time-course of the decision variable x over many repetitions of a single stimulus, at different values of σS (Fig. 4d–h). For very small σS, consistency was 0.5 because the internal noise was the dominant factor making both choices equally likely (Fig. 4d). As σS grew, stimulus fluctuations could determine the first visited attractor but decision reversals were still not activated, yielding a high consistency (Fig. 4e). For larger σS, transitions occurred but only when internal noise and the stimulus fluctuations worked together to produce a large fluctuation (Fig. 4f). The necessary contribution of the internal noise, that varied from trial-to-trial, led to the decrease in consistency. Once σS was large enough to cause reversals on its own, consistency increased again (Fig. 4g). Thus, as with the non-monotonicity in the psychometric curve, it was the difference between two transition probabilities, the transition probability with internal noise versus the probability without internal noise, that was maximal when consistency decreased (Fig. 4b). Also as before, to observe the non-monotonicity in the consistency, σI had to be sufficiently small not to cause transitions on its own (Fig. 4a, b). Notice however that the non-monotonicity here was not caused by the asymmetry between correcting versus error transitions, as consistency was computed using μ = 0 stimuli (i.e., there was no correct choice). The effect was a result of the nonlinear attractor dynamics of the DWM and thus it could not occur in any of the canonical models (Fig. 4c).

a Average consistency versus stimulus fluctuations σS for different values of the internal noise σI (see inset in b). b Difference between the transition probabilities with (pR,L(σS,σI)) and without (pR,L(σS,σI = 0)) internal noise. The drop in consistency coincides with an increase of this difference revealing the σS-range in which transitions occurred because of the cooperation of internal and stimulus fluctuations. c Consistency versus σS for the canonical models. The consistency of the perfect integration is at chance level because we used stimuli with exactly zero integrated evidence (see Methods). d–g Temporal evolution of the decision variable probability distribution f(x,t) for an example stimulus in the different regimes of σS: for negligible σS the choice is driven by the internal noise and the consistency is very low (53.2%, d). For small σS, when the stimulus determines the first visited attractor but fluctuations are not strong enough to produce transitions, the consistency is very high (97.8%, e). For intermediate σS, the transitions can only occur when σI and σS work together to cause a large fluctuation. Because the internal noise has again impact on the choice, the consistency decreases (51.7%, f). For large σS, the stimulus fluctuations are strong enough to produce transitions by itself and the consistency is again very high (100%, g). h Consistency versus σS obtained just using the example stimulus shown in d–g (points mark the σS values shown in d–g). Mean stimulus evidence was μ = 0 in all panels.

Flexible categorization in a spiking network with attractor dynamics

Having shown that the DWM generates signatures of attractor dynamics which are qualitatively different from any canonical model, we then assessed whether these could be reproduced in a more biophysically realistic network model composed of leaky integrate-and-fire neurons (Methods). The network consisted of two populations of excitatory (E) neurons (NE = 1000 for each population), each of them selective to the evidence supporting one of the two possible choices, and a nonselective inhibitory population (NI = 500) (Fig. 5a). The network had sparse, random connectivity within each population (probability of connection between neurons was 0.1) and neurons were coupled through current-based synapses with exponential decay. The stimulus was modeled as two fluctuating currents, reflecting evidence for each of the two choice options and injected into the corresponding E population. The two currents were parametrized by their mean difference μ and their standard deviation σS (see Methods). In addition, all neurons in the network received independent stochastic synaptic inputs from an external population. As in previous attractor network models used for stimulus categorization, the two E populations competed through the inhibitory population19. Thus, upon presentation of an external stimulus, there were two stable solutions: one solution in which one E population fired at a high rate while the other fired at a low rate and vice versa (Fig. 5). Notice that in contrast with the DWM in which the noise was white (i.e., temporally uncorrelated), in this network the external noise was colored (stimulus was an Ornstein–Uhlenbeck process with τstim = 20 ms) and the internal fluctuations reflected the stochasticity of the spiking network dynamics which are strongly affected by the synaptic time scales. Similar to the DWM, we found a non-monotonic relation between the accuracy and the magnitude of the stimulus fluctuations σS provided the stimulus duration T was sufficiently long (Fig. 5b). Moreover, as σS increased the integration regimes of the network changed from primacy to recency, passing through the flexible categorization regime (Fig. 5c–f). In this regime, transitions between attractor states occurred when there were input fluctuations that extended over hundreds of milliseconds, indicating that the temporal integration of evidence continued even after one of the attractors was reached (Supplementary Fig. 2b). The crossover between primacy and recency regimes was also observed at constant σS when we varied the stimulus duration T (Fig. 5g). We went one step further in including biophysical detail and confirmed that a conductance-based spiking neural network model with explicit AMPA, GABA, and NMDA receptor dynamics19 showed qualitatively the same behavior (Supplementary Fig. 4). Thus, the signatures of attractor dynamics that we had identified did not depend on the simplifying assumptions of the DWM and could be replicated in an attractor network with more biophysically plausible parameters.

a Schematic of the spiking network consisting of two stimulus-selective populations (green and purple) made of excitatory neurons that compete through an untuned inhibitory population (white population). b Accuracy PC versus stimulus fluctuations σS obtained from simulations of the spiking network for three values of the stimulus duration T = 2, 4, and 6 s (see inset). c–e Single-trial examples showing spike rastergram from the two excitatory populations (1000 + 1000 neurons) (c), traces of the instantaneous population rates (count window 30 ms) (d) and of the input stimuli (e), for different values of stimulus fluctuations σS = 2 (left), 4.5 (middle), and 9 pA (right). Colored points in (b) indicate the σS used. f Psychophysical kernels obtained for each σS value. The mean stimulus input was μ = 0.015 and the stimulus duration T = 4 s. g Psychophysical kernels for σS = 5 pA and different stimulus duration T = 1, 3, and 5 s, from left to right.

Changes in PK with stimulus duration in human subjects unveiled the flexible categorization regime

We tested whether the DWM could parsimoniously account for the variations of the integration dynamics previously found in a perceptual categorization task as the stimulus duration was varied34. In the experiment, human subjects had to discriminate the brightness of visual stimuli of variable duration T = 1, 2, 3, or 5 s. Confirming previous analyzes34, the average PKs across subjects changed from primacy to recency with increasing stimulus durations (Fig. 6a). To assess whether these changes in the shape of the PKs could be captured by the DWM, we used the DWM to categorize the same stimuli (the exact same temporal stimulus fluctuations and number of trials; see Methods) that were presented to the human subjects (Fig. 6c–f). We found that the PKs for different stimulus durations obtained in the DWM were very similar to the experimental data (Fig. 6b). Importantly, these results were obtained with fixed model parameters for all stimulus durations suggesting that the variation in PK did not necessarily indicate a change of the integration mechanism of the model, as previously suggested34. Rather, fixed, but nonlinear attractor dynamics in the DWM parsimoniously accounted for the observed PK changes.

a Psychophysical kernels for different stimulus durations, obtained from human subjects performing a brightness discrimination task (N = 21)34. From left to right, stimulus duration was T = 1, 2, 3, and 5 s. b Psychophysical kernels obtained by fitting the DWM to categorize the very same stimuli presented to the human subjects (i.e., same temporal fluctuations of net evidence; see Methods). Lines represent the kernels obtained from pooling all data across subjects and the error bands represent s.e.m. c–f Example traces of the decision variable of the fitted DWM (c, e) and the stimulus (d, f) for 1 and 3 s trials. Notice that the stimulus fluctuations mimicked the visual stimulus which was made of time frames of 100 ms.

Stimulus integration across a memory period is consistent with flexible categorization dynamics

Finally, we tested the DWM in a task that requires evidence accumulation and working memory. We used published data from two studies carrying out a psychophysical experiment in which subjects had to categorize the motion direction of a random dot kinematogram35,40. Interleaved with the trials showing a single kinematogram (single pulse trials, duration 120 ms) there were also trials having two kinematograms separated by a temporal delay (two pulse trials). In these two pulse trials, subjects had to combine information from both pulses in order to categorize the average motion direction. The two pulses could have different motion coherence but they always had the same motion direction (Fig. 7a). Subjects were able to combine the evidence from the two pulses and their accuracy did not depend on the duration of the delay period for durations up to 1 s, meaning that they were able to maintain the evidence from the first pulse without memory loss. Overall, subjects gave slightly more weight to the second than the first pulse (Primacy-Recency Index = 0.22; see Methods). Qualitatively, the DWM could in principle capture this behavior because its underlying dynamics can solve the two parts of the task, the maintenance of information during the working memory period and the combination of the two pulses of evidence (Fig. 7b). The model would categorize the first pulse in one of the attractors, which would be stably maintained during the delay because the internal noise is insufficient to cause transitions. Finally, given the asymmetry in the DWM transition rates (Fig. 3c), the second pulse could reverse incorrect initial categorizations while minimizing the risk of erroneously reversing correct ones (Fig. 7b). To assess whether the DWM could indeed fit the data quantitatively, we computed the accuracy for each stimulus condition using Kramers’ transition rate theory and fitted the parameters using maximum likelihood estimation (solid lines, Fig. 7c; Methods). We found that the DWM could fit the accuracy across conditions quite accurately (Fig. 7c). Interestingly, the fitted DWM worked close to the flexible categorization regime, matching the slight recency effect coming out from the combination of the two pulses (Fig. 7d).

a Visual motion categorization experiment consisting of interleaved double pulse (top) and single pulse trials (bottom)35,40. On double pulse trials, the two motion pulses were separated by a variable delay (duration 0, 120, 360, or 1080 ms). Coherences were randomly selected from trial-to-trial. In two pulse trials, they could be different but the motion direction was always congruent (N = 9). b Traces of the decision variable of the DWM (black), the internal fluctuations (yellow), and the stimulus (orange) for an example double pulse trial. c Accuracy for single (squares) and two pulse trials (dots) versus the coherence of the second pulse observed in the data from35,40 (dots) and the values obtained from the fitted DWM (lines). Because accuracy in the experiment did not depend on delay length, dots show the average accuracy across all delays. Different colors represent different first pulse coherences (see inset). Symbols show mean across subjects and error bars show 95% confidence intervals. d Primacy-recency index (PRI) for the DWM as a function of the barrier height (c2). The black dot marks the PRI for the fitted parameter α* = 0.7. The horizontal line is the PRI computed from the psychophysical data (gray area 95% confidence interval). Inset: accuracy for two pulse stimuli in which coherence is larger in pulse 2 than in pulse 1 (i.e., coh2 > coh1) versus accuracy for the same pulses presented in the reverse order (i.e., coh1 > coh2). Consistent with the recency effect, accuracy is slightly better for coh2 > coh1 stimuli. e Accuracy as a function of the delay duration for DWM and for the Perfect Integrator. In the DWM, which used the fitted parameter α* and σI = 0.32 and σS = 0.40, the accuracy is independent of the delay up to 1 s. In contrast, for the same internal noise σI, the accuracy of the perfect integrator decreases continuously for all delays.

Because subjects’ accuracy did not depend on delay duration35,40, the model fitting could only determine the value of the sum of the stimulus and internal noises \(\sigma = \sqrt {\sigma _{\mathrm{I}}^{2} + \sigma _{\mathrm{S}}^{2}}\) and set an upper bound \(\sigma _{\mathrm{I}}^{max}\) for the internal noise: for any value \(\sigma _{\mathrm{I}}\le \sigma _{\mathrm{I}}^{max}\) the transitions during the delay were negligible (<1%) and the DWM yielded the same behavior (see Methods). Choosing the σI to be at the upper bound \(\sigma _{\mathrm{I}}^{max},\) yielded a constant accuracy for delays up to ~1 s. For longer delays, however, transitions during the delay became active causing forgetting and accuracy decrease (Fig. 7e) as has been shown in experiments using a broader range of delays41. In contrast, the perfector integrator did not show a range of delays over which the accuracy remained constant (Fig. 7e): the internal noise had a much larger impact on the maintenance of stimulus evidence so that, for any significant level of internal noise, the accuracy decreased continuously with delay duration. In total, our analysis shows that the DWM can quantitatively fit psychophysical data from a working memory task, and that longer delays could provide a qualitative test for the model.

Discussion

We have investigated the attractor model with winner-take-all nonlinear dynamics and we have found new, experimentally testable signatures that can distinguish it from the other models. First, the attractor model exhibits a continuous crossover from the primacy regime19,23 to the recency regime. Between these two regimes we found the new flexible categorization regime in which the integration of stimulus fluctuations was maximally extended over time (Fig. 2 and Supplementary Fig. 2). Second, in this regime a qualitative asymmetry between correcting and error transitions gave rise to a non-monotonic psychometric curve (Fig. 3). Third, the rapid activation of transitions between decision states with the stimulus fluctuations also caused an unexpected non-monotonic dependence of the stimulus consistency (Fig. 4a). Finally, we used two previous psychophysical experiments to show that the attractor model can quantitatively fit variations in PK profile with stimulus duration (Fig. 6) and fit categorization accuracy in a task with integration of evidence across memory periods (Fig. 7).

Recently, two studies have proposed alternative models that can explain the differences of PK time-courses found across subjects and experiments. In the first model, based on approximate Bayesian inference, the primacy effect produced by bottom-up versus top-down hierarchical dynamics, was modulated by the stimulus properties which could yield different PK time-courses, a prediction that was tested in a visual discrimination task42. The second study proposed a model that can produce different PK time-courses by adjusting the time scales of a divisive normalization mechanism, which yields primacy, and a leak mechanism, which promotes recency43. In addition, this model can also account for bump shaped PKs, a class of PK that was found together with primacy, recency, and flat PKs, in a study carried out using a large cohort of subjects (>100)44. In the attractor model, the differences in the PK found across subjects or fixed stimulus properties could be explained by individual differences in the shape of the potential. Specifically, differences in the height of the barrier between the two attractor states would generate a variety of PK time-courses (Fig. 7c) as the integration regime ultimately depends on the ratio between the total noise \(\left(\sigma _{\mathrm{S}}^2 + \sigma _{\mathrm{I}}^2\right)\) and the height of the barrier. A natural extension of our approach would be to assume that a time-varying process during the trial, e.g., an urgency signal45, can progressively modify the shape of the potential. In that case, the DWM with an urgency signal that changed the shape of the potential from a single well at stimulus onset into a double well at stimulus offset could readily reproduce the bump shaped PKs (not shown) recently reported44. In sum, the attractor model shows a large versatility generating the diversity of PK shapes reported in the literature8,10,12,15,16,17,44. Although several distinct models can account for the variety of PK shapes, they rely on a variety of neural mechanisms. Future electrophysiological or psychophysical experiments where the different models predict qualitatively different results will help distinguish between these possible mechanisms.

It has been previously shown that noise, from the stimulus or internal sources, can increase the accuracy of an attractor model with three stable attractors (i.e., with multistability): an undecided state and two decision states46,47. In this model, the decision variable starts in the undecided state and, if it does not escape from this state during the stimulus presentation, the decision is made randomly. Thus, the noise can allow the decision variable to escape from the undecided state and increase the accuracy. Here, we have studied the attractor model in the winner-take-all regime, i.e., without an undecided state, and we have found that it is the large difference between the rate of correcting and error-generating transitions that produces the increase in accuracy in the flexible categorization regime. This is conceptually very different from transitions between the undecided state to the decision states. The same mechanism presented here drives the classic stochastic resonance48 where a particle moving in a double well potential driven by a periodic signal necessitates of a suitable magnitude of noise for the system to follow the signal (i.e., escape from the well when it is no longer the global minimum). Similar to the effect described with the multistable attractor model46, the accuracy decreases to chance in the deterministic noiseless case (σ = 0). In contrast, the accuracy for the DWM is greatest for σ = 0 because the initial position of the decision variable (x0 = 0) belongs to the basin of attraction of the correct attractor and thus it always rolls down to the correct attractor. However, whether this bump in accuracy produced by the attractor model as a function of the stimulus fluctuations (σS) is a local or a global maximum, or if it exists at all, depends on internal parameters such as the internal noise (σI) or the height of the barrier. These internal parameters can be different for different subjects and thus, one should expect to find this non-monotonic psychometric curve only in a fraction of subjects. Indeed, we carried out a visuospatial binary categorization task in which the fluctuations of the evidence σS were varied systematically from trial-to-trial. Preliminary analysis shows that the majority of subjects display a psychometric curve P(σS) with a plateau followed by a decay as σS increased. A fraction of subjects exhibited however a non-monotonic dependence but the dependence of PK and other aspects of their behavior (e.g., idiosyncratic biases) on σS were not fully captured by the DWM dynamics. A future study will extend the DWM so that it can capture these data.

The key mechanism underlying the flexible categorization regime are the transitions between attractor states which, functionally, can be viewed as changes of mind5,49. Changes of mind have been previously inferred from sudden switches in the direction of the motor response5,49 but also from decision bound crossings of the decision variable read out from neuronal population recordings50,51,52,53. In reaction time tasks, an extension of the drift diffusion model can fit the modulation of the probability of observing a change of mind as a function of the mean stimulus strength5. In this model, a first crossing of the decision bound initializes the response that is reversed if the decision variable crosses the opposite bound before the motor response is completed. As in the DWM, this model predicts that correcting changes of mind are more likely than error changes of mind. However, this asymmetry does not imply a non-monotonic accuracy with the stimulus fluctuations in a fixed duration task. This is because in the linear DDM with changes of mind5, the correcting transition probability pC is not exponentially more likely than error transitions as in the DWM (Eq. 20). Thus, the benefit of having more correcting transitions as σS increases does not offset the cost of decreasing the signal-to-noise ratio (not shown). An attractor network has also been used previously to explain changes of mind during the motor response54. Our work extends this study in several ways, by characterizing the full spectrum of integration regimes in the attractor model and by showing qualitative experimentally testable signatures of decision state transitions (e.g., non-monotonicity in the accuracy and coherence versus σS). One interesting question is whether correcting changes of mind could generate similar nonlinear effects as those reported here (Fig. 3a, b) in tasks with n > 2 choices. A preliminary analysis using rate-based networks suggests that this is in fact the case (Supplementary Fig. 5). We simulated rate networks composed of n excitatory populations competing with each other via mutual inhibition and found that in the winner-take-all regime, strong stimulus fluctuations causing attractor transitions could have a beneficial effect and yield a non-monotonic psychometric curve P(σS) (Supplementary Fig. 5c). Thus, although a more detailed analysis of these multiple-choice networks is needed, these examples suggest that the asymmetry between correcting and error transitions underlying the raise in accuracy with σS, was a general mechanism that may be in play in tasks with more than two choices.

An important question in perceptual decision making is the extent to which subjects can integrate evidence during the stimulus presentation. It has been recently pointed out that differentiating between integrating and non integrating strategies may be more difficult than naively thought55. Here we evaluate the degree of evidence integration using the PK area. In the flexible categorization regime this area is maximum, and the DWM can integrate a large fraction of stimulus fluctuations (Fig. 2f). Indeed, we have shown that in this regime, the spiking network model, built of neural units with time-constants of 20 ms, could generate transitions by integrating fluctuations over hundreds of milliseconds (Supplementary Fig. 2b). Further work would be required to quantitatively characterize the emergence of this slow integration time-scale. The PK area however, is not a measure of accuracy, when accuracy is defined as the ability to discriminate the sign of the mean stimulus evidence, μ. Thus, the accuracy in the DWM is maximal for σS ≈ 0 (Fig. 3b) but the area is close to zero (Fig. 2f). This mismatch simply reflects that, in the absence of internal noise, the task does not require integration of the stimulus fluctuations. However, if we only considered stimuli with μ = 0 and we defined the stimulus category based on the sign of stimulus integral, the accuracy would be strongly correlated with the PK area and it would be maximal in the flexible categorization regime.

Finally, equipped with the theoretical results on the attractor model, we have revisited two psychophysical studies seeking for signatures of attractor dynamics. With the data from the first study34, we have tested a key prediction of the attractor models and have shown that the DWM can readily fit the crossover from primacy, to flexible categorization, to recency observed in the data as stimulus duration increases. This fit shows that the behavioral data in this task is consistent with the presence of transitions between attractor states during the perceptual categorization process (Fig. 6). We used psychophysical data from two other studies35,40, to show that in a regime close to the flexible categorization the DWM could fit the categorization accuracy as a function of stimulus strength for all memory periods (Fig. 7). Thus, the described asymmetry between correcting and error transitions allowed the DWM to combine evidence from the two pulses and yield a higher accuracy than a single pulse, just like subjects did (Fig. 7b, compare single vs two pulse trials using the same coherence, e.g., 6.4% versus 6.4 + 6.4%). Models that assume perfect integration of evidence can generally store a parametric value in short-term memory but they are susceptible to undergoing diffusion over time, causing a drop in memory precision as the delay increases56,57. In contrast, the fact that the accuracy did not decrease with delay duration suggests that the information stored in memory could be categorical instead of parametric58,59, a feature naturally captured by the DWM (Fig. 7d). Alternatively, it could reflect a parametric memory with negligible internal noise60. Interpreting neural recordings can also be non-conclusive as different areas can simultaneously represent stimulus information with different levels of categorization61. To overcome these shortcomings in understanding whether the stored information is categorical or parametric, we propose an experiment that combining electrophysiology with psychophysics can qualitatively distinguish between these two alternatives (see Supplementary Fig. 6). An alternative version of the DDMA model where the sensitivity to the second pulse was larger than to the first one could also account for the combination of the two pulses40. This feature captured the slight recency effect found in the data, but it left unanswered the key question of why the subjects did not use their maximum sensitivity during the first pulse. In total, our findings provide evidence that an attractor model, working in the flexible categorization regime, can capture aspects of the data that were previously viewed as incompatible with its dynamics, and propose a series of testable predictions that may further shed light onto the brain dynamics during sensory evidence integration.

Methods

Model simulations

For all simulations, we solve the diffusion Eq. 2 using the Euler method:

with ∆t = τ/40. The time constant \(\tau\) of the DWM was chosen to be 200 ms to represent the effective integration time constant that emerges from the dynamics of a network19.

We summarized the parameters used in each figure in Table 1 (Supplementary Information).

In Fig. 4, we use stimuli with exactly zero integrated evidence, \({\int} {S\left( t \right)dt = 0}\). For each stimulus i, we first created a stream of normal random variables yi(t). Then we z-score y and we multiplied by σS:

After this transformation, the mean and standard deviation of Si are exactly 0 and σS respectively.

Psychophysical kernel

We measure the impact of stimulus fluctuations during the course of the trial on the eventual decision by means of the so-called PK. Put simply, given a fixed mean signal, some stimulus realizations may favor a rightward choice (say a positive decision variable) and others a leftward one. If this is the case, and we sort the stimuli over many trials by decision, we will see a clear separation which can be quantified via a ROC analysis. Mathematically, for each trial i, we subtract the mean evidence (μi) of each trial si(t) = μi + σSξi to avoid that the distributions of stimuli that produce left and right choices are trivially separated by their mean evidence:

Thus \(\widehat s_i\left( t \right) = \sigma _{\mathrm{s}}\xi _{\mathrm{i}}\) are simply the stimulus fluctuations. Then, for each time t, we compute the probability distribution function of the stimuli that produce a right (\(f\left( {\widehat s_{\mathrm{R}}\left( t \right)} \right)\)) or left (\(f\left( {\widehat s_{\mathrm{L}}\left( t \right)} \right)\)) choice. The PK is the temporal evolution of the area under the ROC curve between these two distributions

Normalized PK area and slope

In order to quantify the magnitude and the shape of a PK, we defined two measures, the PK area and the PK slope:

1) The normalized PK area is a measure of the overall impact of stimulus fluctuations on the upcoming decision, it ranges from 0 (no impact) to 1 (the stimulus fluctuations are perfectly integrated to make a choice). It is defined as

where T is the stimulus duration. NPKA is the PK area normalized by the PK area of a PI in the absence of internal noise (σi = 0), i.e., an ideal observer.

2) The normalized PK slope is the slope of a linear regression of the PK, normalized between −1 (decaying PK, primacy) to +1 (increasing PK, recency). Because we wanted the PK slope to quantify the shape of the PK rather than its magnitude (which is captured by the PK area), we first normalized the PK to have unit area,

where T is the stimulus duration. We then fit the NPK with a linear function of time,

where β1 is the PK slope and \(k = \frac{1}{{2 \cdot var\left( t \right)}}\) is a factor that normalizes the PK slope to the interval (−1, +1).

Accuracy for the DWM

To compute the accuracy for the DWM, we assume that the time spent in the unstable region is much shorter than the time spent in one of the attractors. This assumption allows us to treat the system as a Continuous Markov Chain (CMC) with only two possible states correct and error. The first step is to compute the probability of first visiting the correct attractor which will be used as the initial state of the CMC62

where φ is the potential in Eq. 3, xC and xE are the x values of the correct and error attractors whereas x0 = 0 is the initial position of x. The integrals of P0 can be computed assuming that the term x4 is very small for values of \(x_0 \simeq 0\):

The second step is to compute the correcting and error transition rates37,62

where xU is the x position at the unstable state. These are the transition rates of a Continuous Markov Chain with only two states: correct and incorrect. The probability of making a correcting and error-generating transition during a trial are63:

where k = kC + kE, T is the stimulus duration and \(P_\infty = \frac{{k_{\mathrm{C}}}}{{k_{\mathrm{C}} + k_{\mathrm{E}}}}\)is the probability of the stationary state being the correct one (T→∞). Finally, the probability of being in the correct attractor given the model and stimulus parameters is

The probability of correct is the probability to first visit the correct attractor and remain in it (P0(1−pE)) plus the probability to first visit the error attractor and correct the initial decision ((1−P0)pC). To be more quantitative, we can compute the ratio between the probability of a correcting (Eq. 17) and an error-generating transition (Eq. 18):

For small values of the mean signal \(\mu < < 1\), we can rewrite the ratio between the correcting and error-generating transitions as a function of the potential parameters. To this aim we compute the fixed points of order \(\vartheta \left( {\varepsilon ^2} \right)\) using \(\mu = {\it{\varepsilon }}\overline \mu\) where \(\overline \mu\) is a parameter of order 1 and x = x0 + εx1:

where xU is the x position of the unstable state (note that xU = 0 when μ = 0) and xC(xE) is the position of the correct (error) attractor. Using these fixed points, the ratio between the correcting and error-generating transitions is

Which shows that the ratio between correcting transitions and error-generating ones increases exponentially with the mean stimulus (μ) as long as stimulus fluctuations are not too large. These probabilities are illustrated in Fig. 3c, pC increases steeply as a function of stimulus fluctuations even before pE reaches non-negligible values and for large stimulus fluctuations both probabilities tend to 0.5.

To find the maximum of the accuracy, we derive Eq. 19 respect to σ:

where we rewrite Eq. 19 as a function of pC and P0. From this equation, it can be shown (see Supplementary Information) that the local maximum in accuracy is

and the critical value of μ above which the accuracy decreases monotonically with the stimulus fluctuations is

Spiking network

Network model

In Fig. 5 we consider a network of randomly connected current-based integrate-and-fire neurons, similar to28. The conductance-based all-to-all connected network shown in Supplementary Fig. 4 was exactly the original network model presented in ref. 19. The current-based network consists of two populations of excitatory neurons (A and B), both of which are recurrently coupled between them and to a population of inhibitory interneurons (I). We study the case in which the system is near a steady bifurcation to a winner-take-all state. It is in the vicinity of the bifurcation that the dynamics of the network can be captured in a one-dimensional amplitude equation which describes the slow evolution along the critical manifold28. The evolution of the membrane potential \(V_i^X\left( t \right)\) from the i-th neuron in population X is given by:

where the synaptic input voltages of the form IXY indicate interactions from neurons in population Y to neurons in population X, while external synaptic inputs are given by IXext. The synaptic inputs are sums over all postsynaptic potentials (PSPs), modeled as exponential functions with a delay. The synaptic inputs take the form

The dynamics of excitatory and inhibitory synapses are described by

After the presynaptic neuron j fires a spike at time \(t_k^{XY}\), the corresponding dynamic variable is incremented by one at \(t_k^{XY} + \delta _k^Y\), that is after a delay \(\delta _k^Y\).

External synapses have instantaneous dynamics

i.e., a presynaptic action potential from neuron j of the external population at time \(t_{k,j}^{Xext}\) results in an instantaneous jump of the external synaptic input variable. A spike is emitted whenever the voltage of a cell from an excitatory (inhibitory) population crosses a value Θ, after which it is reset to a reset potential Er.

We consider the case of sparse random connectivity for which, on average, each neuron from population X receives a total of CXY synapses from population Y. The pairwise probability of connection is thus \({\it{\epsilon }}_{XY} = C_{XY}/N_Y\), where NA = NB= NE and NI are the number of neurons in the respective populations. For nonzero synapses we choose \(J_{ij}^{AA} = J_{ij}^{BB} = J_{EE}\), \(J_{ij}^{IA} = J_{ij}^{IB} = J_{IE}\) and \(J_{ij}^{AI} = J_{ij}^{BI} = J_{EI}\).

The stimulus input current is modeled similar to23, with the exact same stimulus input being injected to each neuron in each of the two excitatory populations. The stimulus input onto each of the excitatory populations A and B is given by

where the first term describes the mean stimulus input onto each population and the second term the temporal modulations of the stimulus with standard deviation σstim. The term μ parametrizes the mean difference of the two stimulus inputs and it captures the amount of net stimulus evidence favoring one choice over the other (i.e., μ = 0 represents an ambiguous stimulus with zero mean sensory evidence). Finally, zA(t) and zB(t) are independent realizations of an Ornstein–Uhlenbeck process, defined by \(\tau _{stim}\frac{{dz}}{{dt}} = - z + \sqrt {2\tau _{stim}} \xi \left( t \right)\), where ξ(t) is Gaussian white noise (mean 0, variance dt).

Simulation details

The network model was implemented in Python 3 using the Brian 2 simulator version 2.364. We used the Euler integration method with a time step of 0.1 ms. We simulated fixed duration trials of varying stimulus duration. Stimulus presentation was preceded by a 500 ms interval to prevent transient effects due to initial conditions. The choice outcome of the network was determined by the neural population with a higher population firing rate over the last 100 ms of the stimulus period. Results for a given stimulus condition (σS and T) are based on 5000 trials using different realizations of the network connectivity, random initial conditions as well as different realizations of the external background inputs into each circuit. The value of all the parameters can be found in Table 2 (Supplementary Information)

Psychophysical data and model fitting

In Fig. 6, we used data from experiments 1 and 4 from34 with a total of N = 21 humans subjects (N = 13 in experiment 1 and N = 8 in experiment 4). The data can be accessed here: https://doi.org/10.1371/journal.pcbi.1004667. The stimuli consisted of two brightness-fluctuating round disks. In each stimulus frame (duration 100 ms), the brightness level of each disk was updated from one of two generative Gaussian distributions that had the same variance but different mean: either one distribution had a high mean value and the second a low value or vice versa. At the end of the stimulus, the subjects had to report the disk with a higher overall brightness (i.e., which disc corresponded to the generative distribution with higher mean). Incorrect responses were followed by an auditory feedback. Trials were separated into five equal length segments, in 80% of the trials, a congruent or incongruent pulse of evidence was presented at a random segment. This increase or decrease of evidence was corrected in the rest of the segments and as a consequence the stimuli were anticorrelated. In experiment 1 stimuli with 1, 2, or 3 s duration were presented in blocks of 60 trials whereas in experiment 4, the stimulus duration was 5 s. We computed the PK using the procedure described above (see section Psychophysical kernel) but first computing the difference in brightness of the two disks. We also subtracted the mean difference in order to have a one-dimensional stimulus trace with zero mean. Namely

where \(S_{\mathrm{L}}^i\left( t \right)\) is the brightness of the t-th frame of the left disk during the i-th trial and \(\mu _{\mathrm{L}}^i\)is the mean of the generative Gaussian distribution for the left disc in the i-th trial. We computed the PKs standard error of the mean using bootstrap with 1000 repetitions.

To compute the PK of the DWM we simulated Eq. 6 using stimuli with the exact same temporal fluctuations in evidence than the stimuli presented to the subjects. We modeled it by updating μi(t) from Eq. 3 with the difference in brightness at each time between the right and left disk:

Note that in this framework the stimulus fluctuations were set to zero σS = 0 because σS was captured inside μi(t). The DWM parameters (α = −0.8, σI = 0.3, and τ = 200 ms) were tuned to account for the change from primacy to recency with the stimulus duration.

Primacy-recency index for the two pulses trials

In Fig. 7, we define the primacy-recency index

where β1 and β2 are the coefficients of a logistic regression with the coherence of the first and second pulse as predictors:

Similar to the Normalized PK slope, the primacy-recency index ranges from −1 (primacy) to 1 (recency).

DWM fitting

In Fig. 7, we use data from two studies performing the same experiments35,40. We extract the accuracy of the subjects directly from the paper figures (with GraphClick, a software to extract data from graphs) and the number of trials from the methods of the papers. We pool the data from the two experiment and we compute the mean accuracy in each condition i as

where Ni is the number of trials in condition i, the data with superindex K and T were extracted from35 and40 respectively. The 95% confidence interval of Pi is:

In these experiments, the human subjects had to discriminate between left and right motion direction of a random dots stimulus. The experimenters interleaved trials with one and two pulses of 120 ms. For single pulse trials the possible coherence levels were 0, 3.2, 6.4, 12.8, 25.6, and 51.2%. For double pulse trials, the pulses were separated by a delay of 0, 120, 360, or 1080 ms and the coherences were randomly chosen from 3.2, 6.4, and 12.8% (nine different coherence sequences). In both papers, they reported that the subjects’ accuracy in double pulses trials was independent of the delay. Thus we assume that, in the DWM, the internal noise was too small to drive transitions during the delay and we pool the data across delays to compute the accuracy for each coherence sequence. We fit the model by maximizing the log-likelihood (Nelder–Mead algorithm):

where NC,i and NE,i are the number of correct and error trials for each coherence sequence i whereas Pi is the accuracy for sequence i predicted by the DWM.

For single pulse trials, we computed Pi as

where P0, pC, and pE were computed using Eqs. 14, 17, and 18 whereas the super index indicates the pulse number. Note that we are assuming that the time spent for the decision variable in the unstable state is short compared with the pulse duration. With this assumption, the decision variable starts in the correct attractor with probability P0. Similarly for double pulse trials the probability of correct is:

The potential and the diffusion equation can be written as

where μ is a linear scaling of the coherence to x units (μ = kcoh) and σ represents the two sources of noise, the internal noise and the stimulus fluctuations \(\sigma = \sqrt {\sigma _{\mathrm{I}} + \sigma _{\mathrm{S}}}\). The two sources of noise cannot be fitted separately because the only difference between them is that the internal noise is also activated during the delay (Fig. 7a). But internal noise does not have any impact during the delay. Thus it is impossible to distinguish σI in the range \(\left( {0,\sigma _{\mathrm{I}}^{max}} \right)\) where \(\sigma _{\mathrm{I}}^{max}\)is the maximum σI without transitions during the delay. For this reason, we assume that there are no transitions during the delay and we only fit the total noise σ. The parameters that maximize Eq. 42 and their 95% confidence interval are k* = 0.012 ± 0.0011, α* = 0.70 ± 0.05, σ* = 0.52 ± 0.05, and τ* = 3.3 ± 0.5. To compute the confidence intervals, we assume that the likelihood function around the best-fit parameters is a multi-dimensional Gaussian. Then the confidence intervals are two times the diagonal of the inverse of the Hessian matrix17,65. The Hessian matrix is the matrix of second derivatives and we compute it numerically using the finite difference method.

Although we cannot fit the internal and the stimulus sources of noise separately, we can study the range of internal noise \(\left( {0,\sigma _{\mathrm{I}}^{max}} \right)\) that produces a negligible number of transitions (<1%) during the delay (up to 1 s) and thus is compatible with the psychophysical data. For the parameters that maximize the likelihood this range is (0, 0.32), indicating that the DWM is robust to perturbations during the delay even when the magnitude of the internal noise represents a substantial part of the total noise \(\left( {\sigma _{\mathrm{I}}^{max}/\sigma _{\mathrm{S}}= 0.8} \right)\)(Fig. 7d). We also compute the accuracy of the PI as a function of the delay (Fig. 7d). To be able to compare both models, we adjust the scaling factor of the evidence to match subjects’ accuracy for the shortest delay (μPI = 0.44k×coh where k is the scaling of the DWM), and we use the parameters τ, σS, and σi that maximize the DWM.

Model for n-choice decision making

To model a categorization task with n = 3 choices (Supplementary Fig. 5) we simulated a system of standard nonlinear coupled rate equations (see e.g., Equation (38) in66):

where ξi is a Gaussian white noise process with amplitude σ for i = 1,2,3 and amplitude σI for i = I, and with transfer function ϕ(x) = 0 for x < 0, ϕ(x) = x2 for 0 ≤ x ≤ 1 and \(\phi \left( x \right) = 2\sqrt {x - 3/4}\) for x > 1. The n = 4 case is a simple extension of these equations (the general system of rate equations for n > 2 can be found in66). The parameters used for the simulations were s = 0.694, c = g = \(\sqrt 5\), τ = 20 ms, τI = 10 ms, II = 0, and σI = 0. For n = 3 the inputs were taken as I1 = I + 2∆I/3, I2 = I − ∆I/3, I3 = I − ∆I/3, while for n = 4 they were I1 = I + 3∆I/4, I2 = I − ∆I/4, I3 = I − ∆I/4, I4 = I − ∆I/4, with I = 2.25 and ∆I = 0.03*s. For the top panels in Supplementary Fig. 5 the values of the noise strength where σ = 0.18 and σ = 0.13 for n = 3 and 4, respectively. The accuracy was calculated as the fraction of trials (N = 10,000) in which the highest firing rate at the end of the trial was r1.

Reporting Summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

Data shown in Fig. 6 can be accessed here: https://doi.org/10.1371/journal.pcbi.1004667.

The data shown in Fig. 7 was extracted directly from the manuscripts35,40 using GraphClick.

Code availability

The codes to simulate the DWM and canonical models and generate the figures of the paper are available at https://bitbucket.org/delaRochaLab/flexible-categorization.

The code and analysis scripts for the spiking neural network simulations are available at https://github.com/wimmerlab/flexcat-spiking.

References

Ratcliff, R. A theory of memory retrieval. Psychological Rev. 85, 59–108 (1978).

Palmer, J., Huk, A. C. & Shadlen, M. N. The effect of stimulus strength on the speed and accuracy of a perceptual decision. J. Vis. 5, 376–404 (2005).

Bogacz, R., Hu, P. T., Holmes, P. J. & Cohen, J. D. Do humans produce the speed–accuracy trade-off that maximizes reward rate? Q. J. Exp. Psychol. 63, 863–891 (2010).

Urai, A. E., de Gee, J. W., Tsetsos, K. & Donner, T. H. Choice history biases subsequent evidence accumulation. Elife. 8, e46331 (2019).

Resulaj, A., Kiani, R., Wolpert, D. M. & Shadlen, M. N. Changes of mind in decision-making. Nature 461, 263–266 (2009).

Kiani, R., Corthell, L. & Shadlen, M. N. Choice certainty is informed by both evidence and decision time. Neuron 84, 1329–1342 (2014).

Pardo-Vazquez, J. L. et al. The mechanistic foundation of Weber’s law. Nat. Neurosci. 22, 1493–1502 (2019).

Kiani, R., Hanks, T. D. & Shadlen, M. N. Bounded integration in parietal cortex underlies decisions even when viewing duration is dictated by the environment. J. Neurosci. 28, 3017–3029 (2008).

Mazurek, M. E., Roitman, J. D., Ditterich, J. & Shadlen, M. N. A role for neural integrators in perceptual decision making. Cereb. Cortex 13, 1257–1269 (2003).

Zylberberg, A., Barttfeld, P. & Sigman, M. The construction of confidence in a perceptual decision. Front. Integr. Neurosci. 6, 79 (2012).

Yates, J. L., Park, I. M., Katz, L. N., Pillow, J. W. & Huk, A. C. Functional dissection of signal and noise in MT and LIP during decision-making. Nat. Neurosci. 20, 1285–1292 (2017).

Nienborg, H. & Cumming, B. G. Decision-related activity in sensory neurons reflects more than a neuron’s causal effect. Nature 459, 89–92 (2009).

Huk, A. C. & Shadlen, M. N. Neural activity in macaque parietal cortex reflects temporal integration of visual motion signals during perceptual decision making. J. Neurosci. 25, 10420–10436 (2005).

Odoemene, O., Pisupati, S., Nguyen, H. & Churchland, A. K. Visual evidence accumulation guides decision-making in unrestrained mice. J. Neurosci. 38, 10143–10155 (2018).

Cheadle, S. et al. Adaptive gain control during human perceptual choice. Neuron 81, 1429–1441 (2014).

Wyart, V., Myers, N. E. & Summerfield, C. Neural mechanisms of human perceptual choice under focused and divided attention. J. Neurosci. 35, 3485–3498 (2015).

Brunton, B. W., Botvinick, M. M. & Brody, C. D. Rats and humans can optimally accumulate evidence for decision-making. Science 340, 95–98 (2013).

Zhang, J., Bogacz, R. & Holmes, P. A comparison of bounded diffusion models for choice in time controlled tasks. J. Math. Psychol. 53, 231–241 (2009).

Wang, X.-J. Probabilistic decision making by slow reverberation in cortical circuits. Neuron 36, 955–968 (2002).

Wong, K.-F. & Wang, X.-J. A recurrent network mechanism of time integration in perceptual decisions. J. Neurosci. 26, 1314–1328 (2006).

Lo, C.-C. & Wang, X.-J. Cortico-basal ganglia circuit mechanism for a decision threshold in reaction time tasks. Nat. Neurosci. 9, 956–963 (2006).

Fusi, S., Asaad, W. F., Miller, E. K. & Wang, X.-J. A neural circuit model of flexible sensorimotor mapping: learning and forgetting on multiple timescales. Neuron 54, 319–333 (2007).

Wimmer, K. et al. Sensory integration dynamics in a hierarchical network explains choice probabilities in cortical area MT. Nat. Commun. 6, 6177 (2015).

Engel, T. A., Chaisangmongkon, W., Freedman, D. J. & Wang, X.-J. Choice-correlated activity fluctuations underlie learning of neuronal category representation. Nat. Commun. 6, 6454 (2015).

Wilming, N., Murphy, P. R., Meyniel, F. & Donner, T. H. Large-scale dynamics of perceptual decision information across human cortex. Nat. Commun. 11, 5109 (2020).

Jaramillo, J., Mejias, J. F. & Wang, X.-J. Engagement of pulvino-cortical feedforward and feedback pathways in cognitive computations. Neuron 101, 321–336.e9 (2019).

Bonaiuto, J. J., Berker, Ade & Bestmann, S. Response repetition biases in human perceptual decisions are explained by activity decay in competitive attractor models. Elife. 5, e20047 (2016).

Roxin, A. & Ledberg, A. Neurobiological models of two-choice decision making can be reduced to a one-dimensional nonlinear diffusion equation. PLoS Comput. Biol. 4, e1000046 (2008).

Forstmann, B. U., Ratcliff, R. & Wagenmakers, E.-J. Sequential sampling models in cognitive neuroscience: advantages, applications, and extensions. Annu. Rev. Psychol. 67, 641–666 (2016).

Gold, J. I. & Shadlen, M. N. The neural basis of decision making. Annu. Rev. Neurosci. 30, 535–574 (2007).

Bogacz, R., Brown, E., Moehlis, J., Holmes, P. & Cohen, J. D. The physics of optimal decision making: a formal analysis of models of performance in two-alternative forced-choice tasks. Psychol. Rev. 113, 700–765 (2006).

Miller, P. & Katz, D. B. Accuracy and response-time distributions for decision-making: linear perfect integrators versus nonlinear attractor-based neural circuits. J. Comput. Neurosci. 35, 261–294 (2013).

Kawaguchi, K. et al. Differentiating between models of perceptual decision making using pupil size inferred confidence. J. Neurosci. 38, 8874–8888 (2018).

Bronfman, Z. Z., Brezis, N. & Usher, M. Non-monotonic temporal-weighting indicates a dynamically modulated evidence-integration mechanism. PLoS Comput. Biol. 12, e1004667 (2016).

Kiani, R., Churchland, A. K. & Shadlen, M. N. Integration of direction cues is invariant to the temporal gap between them. J. Neurosci. 33, 16483–16489 (2013).

Tsetsos, K., Gao, J., McClelland, J. L. & Usher, M. Using time-varying evidence to test models of decision dynamics: bounded diffusion vs. the leaky competing accumulator model. Front. Neurosci. 6, 79 (2012).

Kramers, H. A. Brownian motion in a field of force and the diffusion model of chemical reactions. Physica 7, 284–304 (1940).

Neri, P. & Levi, D. M. Receptive versus perceptive fields from the reverse-correlation viewpoint. Vis. Res. 46, 2465–2474 (2006).

Ratcliff, R., Voskuilen, C. & McKoon, G. Internal and external sources of variability in perceptual decision-making. Psychol. Rev. 125, 33–46 (2018).

Tohidi-Moghaddam, M., Zabbah, S., Olianezhad, F. & Ebrahimpour, R. Sequence-dependent sensitivity explains the accuracy of decisions when cues are separated with a gap. Atten. Percept. Psychophys. 81, 2745–2754 (2019).

Melcher, D., Crespi, S., Bruno, A. & Morrone, M. C. The role of attention in central and peripheral motion integration. Vis. Res. 44, 1367–1374 (2004).

Lange, R. D., Chattoraj, A., Beck, J. M., Yates, J. L. & Haefner, R. M. A confirmation bias in perceptual decision-making due to hierarchical approximate inference. Preprint at bioRxiv https://doi.org/10.1101/440321.

Keung, W., Hagen, T. A. & Wilson, R. C. A divisive model of evidence accumulation explains uneven weighting of evidence over time. Nat. Commun. 11, 2160 (2020).

Keung, W., Hagen, T. A. & Wilson, R. C. Regulation of evidence accumulation by pupil-linked arousal processes. Nat. Hum. Behav. 3, 636–645 (2019).

Eckhoff, P., Wong-Lin, K. F. & Holmes, P. Optimality and robustness of a biophysical decision-making model under norepinephrine modulation. J. Neurosci. 29, 4301–4311 (2009).

Miller, P. & Katz, D. B. Stochastic transitions between neural states in taste processing and decision-making. J. Neurosci. 30, 2559–2570 (2010).

Deco, G., Rolls, E. T. & Romo, R. Stochastic dynamics as a principle of brain function. Prog. Neurobiol. 88, 1–16 (2009).

Gammaitoni, L., Hänggi, P., Jung, P. & Marchesoni, F. Stochastic resonance. Rev. Mod. Phys. 70, 223–287 (1998).

van den Berg, R. et al. A common mechanism underlies changes of mind about decisions and confidence. Elife. 5, e12192 (2016).

Kiani, R., Cueva, C. J., Reppas, J. B. & Newsome, W. T. Dynamics of neural population responses in prefrontal cortex indicate changes of mind on single trials. Curr. Biol. 24, 1542–1547 (2014).