Abstract

For the evacuation crowd of social agents, environment plays a big effect on the behavior and decision of the agents. When facing the uncertain environment, the behavior and decision of agents depend heavily on the perception of environment. Therefore, the cooperation between agents and their perception of environment may coexist during evacuation. Here we establish a mechanism to analyze the coevolution between the cooperation of agents and the perception of environment. In detail, we use a regular square lattice with periodic boundaries, where two payoff matrices are used to describe two kinds of games between neighbors in the safe and dangerous environments. For individual agent, its perception can be adjusted by interacting with neighboring agents. When the environment is generally considered dangerous, the fraction of cooperative agents keeps at a high level, even if the value of b is very large. When all the agents think that the environment is safe, the fraction of cooperation will decrease as the value of b increases.

Similar content being viewed by others

Introduction

It is known that the cooperation can be easily observed in nature and human society. For example, ants will share food and information with others, badgers and coyotes prey together, the human work together, and so on. It learns that cooperation may make a group play a great power, and get more reward than working alone. However, according to Darwin’s theory of natural selection, cooperation and individual selfish nature is contradictory. Therefore, how to understand the emergence and evolution of cooperative behavior remains a challenging task.

In order to understand how cooperative behavior evolves, the pioneering work is to establish the evolutionary game theory1,2,3,4,5, which provides a theoretical framework to address the conflict between cooperation and selfishness. In the past decades, the prisoner’s dilemma (PD) game6 was regarded as a paradigm for expressing the human relations in society and analyzing the cooperation. In a PD game, two players interact with each other each time. Both of them can choose to cooperate or defect. They get different rewards according to their choices. The reward for mutual cooperation is R, while the reward for mutual defection is P. If one player chooses cooperation and the other chooses defection, the cooperator receives the sucker’s payoff S, and the defector gets the defector temptation T. In the PD game, T > R > P > S. Hence one player can get maximum payoff by defecting. But if all the players choose defection, the total reward will be very small. On the contrary, cooperation can make the total reward increase, although cooperation is not an optimal choice for one player.

In addition, the spatial structure can also affect the evolution of cooperative behavior. In 1992, Nowak and May showed that structured populations via nearest neighbor interactions can promote cooperation, while the mixed populations lead to defection7. After that, some works placed PD into networks such as small-world8,9, scale-free world10,11,12, etc. Besides the spatial complex networks, many researches put forward different mechanisms that can promote the evolution of cooperation. For example, Nowak reviewed five rules (namley kin selection, direct reciprocity, indirect reciprocity, network reciprocity, and group selection) for the evolution of cooperation13. Additionally, evolutionary game with coevolutionary rules initiated by Zimmermann et al.14,15 offers a new way to promote the cooperation in a social dilemma. Coevolutionary rules may conform to realistic situations, where the strategies can evolve in time. However, some other factors such as links16,17,18,19, size20,21, mobility22,23,24, and age25,26, update at the same time, which can affect back the evolution of strategies.

Recently, coevolutionary multigames with different rules and structures have been discussed. Motivated by the fact that the same social dilemma can be perceived differently by different players, Wang et al.27 studied evolutionary multigames in structured populations. Chen and Wang28 adopted a stochastic learning updating rule to investigate the evolution of cooperation in the PD game on the small-world networks with different payoff aspiration levels. Wu et al.29 studied the evolution of cooperation in spatial PD games with and without extortion by adopting the aspiration-driven strategy updating rule. Traulsen et al.30 analyzed a cooperative game on the similarity between the players. Szolnoki and Perc31 introduced coevolutionary success-driven multigames in structured populations.

Except for the above mentioned factors, the environment is another factor strongly affecting the cooperative behavior. Note that human beings are actually in the environment all the time. Even for the same person, his/her behavior and decision may be differentially affected by different environments. In fact, the impact of the environment on people can not be ignored in the game. Some studies suggest that the environment will have a positive impact on the evolution of cooperation in the PD32,33,34,35. However, these studies only considered the objective environmental factors, such as links, mobility as mentioned above. What we want to know is whether people’s subjective perception of the environment will affect the evolution of cooperation. If the individual thinks that the current environment is dangerous, will he/she still be in a PD game?

In realistic situations, the ability of agents to perceive the environment is also different. This kind of heterogeneity may lead to the difference of the perception of environment. Even in the same scene, some agents consider that the current environment is relatively safe, but some consider it dangerous. This makes different agents take different strategies and show different behaviors in a game. In turn, the interaction between neighbors and their behavior can also affect their perceptions. This implies that there may exist some kind of coevolution between the environmental perception and the cooperative behavior. If it actually exists, but how to coevolve?

In this report, we consider this kind of coevlolution. We establish a mechanism of the coevolution of perception and cooperative behavior based on evolutionary game theory, where agents update their strategies and perceptions from more successful neighbors at the same time. From the evolutionary game theory and the coevolution mechanism, we study the cooperative behavior in a regular square lattice with periodic boundary conditions. First, we place the population in the lattice. Based on their current strategies and perceptions of the environment, agents choose payoff matrix to calculate their utilities. Then, each agent updates its strategy and perception from its random successful neighbors at a certain probability. Finally, using the Monte Carlo simulation, we record the proportion of cooperation and the proportion of agents who consider that the environment is safe. We find that when the environment is dangerous, the fraction of cooperation keeps at a high level.

Results

In order to observe cooperation and perception in the population, we count the proportion of cooperation (c) and the proportion of safe agents (s) who consider that the environment is safe. From ‘Method’ section, the agents whose perception (θ) is less than the threshold (θth) may think that the current environment is safe; otherwise, the current environment is considered to be dangerous.

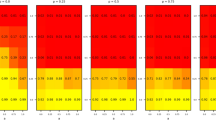

To begin with, we take a look at the effect of the threshold on the proportion of cooperation. Figure 1 shows the curve, where the cooperative proportion c changes with θth at different temptation parameter b. It is clear that for each curve, the cooperative proportion c is keeping at 1, and then gradually reduced to a stable value. The stable value decreases as the value of b increases, which is consistent with the traditional case7. Therefore, when the current environment is considered safe, people tend to do their own things, and do not care about others. This can be seemed as a typical PD game. On the contrary, when the current environment is considered dangerous, the person may think that his/her strength is limited, he/she can’t get much reward by himself/herself, and more people will choose cooperation to solve the difficulties together.

Second, the relationship between s and θth is plotted in Figure 2. In the case of b = 1, two payoff matrices are very similar, and the game between agents is almost unaffected by their perceptions. When the threshold is small, all agents consider that the environment is dangerous. This may promote the cooperation between agents, which is consistent with Figure 1. When the difference between temptation b and 1 is small (for example, b = 1.05), compared with b = 1.1 and b = 1.15, the change of s is more similar to the case of b = 1, except that the sudden rise at θth = 0.63. This abrupt rise also appears when b = 1.1 and b = 1.15. In the case of b = 1, as previously mentioned, the game between agents is almost unaffected by their perceptions, so the whole system is insensitive to the threshold. However, when b > 1, the system is sensitive, and has a critical point. When θth = 0.63, there is a sudden increase of s. Figure 2 shows that that the less b is, the more s increases when the s is just increasing from 0 (θth = 0.30). When b = 1, the growth of s with respect to θth is almost linear, which implies that the whole system has little relationship to environmental perception. When θth is small, s is approximately equal to 0. In this case, almost all the agents agree that the environment is dangerous, which can assimilate the agents who consider the environment is safe. The closer to 1 the value of b is, the less difference between danger and safety has, θth has little effect on the system, and thus we observe the monotonicity. When b is large (for example, b = 1.2 and b = 1.25), the defection temptation makes more agents to choose to defect. Even if the threshold is not large (0.5–0.6), the agent who consider environment safe will be the majority. However, we observer that s ≈ 0 at b = 1.1 and b = 1.15. Therefore, the agents’ behavior indeed has an influence on their perceptions.

Figure 3 shows the dynamical changes for c and s in a run in the case of b = 1.2. It also shows that the larger the threshold θth is, the smaller cooperative proportion c is, and the larger s is. In this figure, we notice the negative feedback mechanism in the network16. The value of c decreases before 100 steps, and then increases. At the beginning, the numbers of cooperators and defectors are equal. In this PD game, the agent will choose to defect to get more payoff. After 100 steps, the proportion of defectors is so much that they can no longer earn more. At this time, both the cooperators and the defectors get the same reward when they play the game with a defector (P = S = 0). However, the reward for mutual cooperation R = 1, so the fraction of cooperation will gradually increase, and finally stabilize. When θth is moderate, we also observe the phenomenon of the decrease after the increase of s in Figure 2. For the agents who consider the environment dangerous, their best choice is to cooperate, while the others choose to defect. Hence, the greater the threshold θth is, the more the proportion of cooperation c decreases at the beginning. When b = 1.2 and θth ≥ 0.71, all agents consider that the environment is safe. This is actually the traditional case, that is, the proportion of cooperation c will be at a low level in the final.

Similar to Figure 3, Figure 4 also shows the negative feedback mechanism in the network. From left to right, Monte Carlo simulation steps are 0, 100, 1000 and 60000, respectively. From top to bottom, the thresholds are 0.67 and 0.69, respectively. Agents considering the environment safe (s) and agents considering the environment dangerous (d) are randomly distributed in the grid. At the 100th step, it is clear that d is reduced. At the 1000th step, the yellow area expanded again. Finally, at the 60000th step, the network is in a stable state. At t = 0.69, we notice that d = 0 in the population, implying all the agent consider that the current environment is safe. At t = 0.67, s and d coexist. As Figure 4 shows, the distributions of s and d are dispersed, that is, there is no obvious cluster of s and d. This increases the interaction area between s and d, and agents can switch between the two kinds of states, and the case in which d is surrounded and destroyed by s will not appear.

To check the robustness of the results, we also did the experiments on the square lattice with degree = 4 and degree = 8. Figures 5 and 6 show the results when the number of neighbors is 4. They are consisitent with Figures 1 and 2, which shows the robustness of this mechanism.

Discussion

In this report, we study the coevolution of environmental perception and cooperative behavior, specially their impact on the proportion of cooperation and agents who consider the environment safe. We find that the proportion of cooperation will increase greatly when the environment is dangerous. The agent’s perception of environment affects the strategy it will take, and in turn, the agent’s strategy and payoff also affect its perception. In fact, this kind of coevolutionary mechanism could be more realistic. In addition, we also discuss the negative feedback mechanism16 in this mechanism, explaining the evolution of the cooperative behavior. However, the ideal coevolution mechanism we build can’t reveal the essential relationship between perception and behavior. Moreover, all agents are assumed to follow the same rules in the simulation, which can’t reflect individuals’ diversity within the crowd.

We believe that studying the role of individual subjective factors in the evolution of cooperation is also necessary. In the literature, many works focus mainly on the objective factors such as link, age, mobility, network structure and so on. However, the impact of environment on its subjective perception is also a vital part that we can’t ignore during the crowd evacuation. In different circumstances, the individual’s subjective feelings are different, and then the decisions maybe not the same. In addition, even in the same environment, the subjective feelings of different individuals are not exactly identical. Being concerned about the subjective feelings of the individual can better understand the individual’s motivation to choose cooperation. When the individual makes a decision, the environment is one of the most important factors affecting the individual. Individuals may be persuaded by their neighbors in order to get greater earnings, or they may choose their own strategies based on the urgency of the current environment. Therefore, the study of the impact of environmental perception on cooperation may reveal the secret of the cooperation evolution.

We are going to improve our work in future. In this report, the cognition level on the safe environment is fixed in each run. That is, for all agents, the threshold for perceiving the safety of the environment is the same. However, if each individual has the same threshold, the heterogeneity owing to agents’ knowledge or experience can’t be reflected. So the threshold should be determined by a certain strategy, and should be random that follows the binary distribution, uniform distribution or gauss distribution. In general, the threshold can also be represented by an interval \([{\underline{\theta }}_{th},\,{\overline{\theta }}_{th}]\). If a neighbor’s perception is not in agent x’s threshold interval, then agent x will not choose to cooperate with this neighbor36. This is in line with the hypothesis of kinship selection, that is, when the individuals choose the cooperation object, they will give a preference to their similar individuals as much as possible to make their own genes can be passed. This is also consistent with the bounded confidence model in the opinion dynamics37,38.

Note that agents choose to cooperate with others because they can get the reward for mutual cooperation. However, some agents may show cooperative behavior without reciprocity36, and they help and donate to others even though they earn nothing, which can be called altruistic behavior. As far as we know, altruistic behavior in nature really exists. For example, male mantis will be eaten by the female mantis after mating. In human society, because of the educative background, people’s moral standards, the love and so on, people may also choose altruistic behavior. Since cooperation with reciprocity and altruism coexist in evacuation, we will consider in the follow-up work. In the game, not all individuals choose to cooperate or defect, and there are also some individuals can’t determine their decision. At the beginning of the game, not all individuals immediately choose reciprocity, altruism, or defection, and they may not know what to choose. This is a zero status, that is, the agent should wait and see what happened. The individual with zero status may join in the game at any time. In addition, it may be possible for them to withdraw from the game at any time because of the diminishing payoff. This assumption is more realistic than the assumption that everyone is involved in the game.

In ‘Method’ section, the update rule of the environmental perception uses the Fermi function, and the strategy’s update rule uses a linear rule. These two rules are generally accepted, in which Fermi function from the discrete selection model has some rationality. Note that update rules are very important for the model. However, except for the above two rules, are there other reasonable update rules that can optimize the system and make the model more reasonable? This question requires our future research.

Research on cooperation evolution is not only a fundamental problem, but also has a practical significance. For example, the crowd evacuation has always been an important part of social public security problem. In the evacuation process, the environment changes faster and more urgent, the environmental impact on people becomes more obvious. In addition, the behavior of the evacuated crowd is an important factor that affects the evacuation efficiency. Therefore, how to understand the evolution of the behavior is the key to solve the evacuation problem. Therefore, the research about environmental perception’s impact on cooperative behavior has a guiding significance to solve the evacuation problem.

To conclude, the agent’s perception of environment is an important attribute that affects the agent’s behavior, and may explain the evolution of the agent’s behavior in evacuation. We hope to further consider the environmental perception in the future.

Methods

Assume that each agent can perceive the environment in a quantitative way. Let θx(0 ≤ θx ≤ 1) be the quantitative perception value of environment by agent x. In order to study the effect of the environment, assume the agents’ cognition levels on the safe environment are identical. Here a constant cognition level on the safe environment is chosen to be θth > 0. For agent x, if its perception value of environment θx satisfies θx > θth, the environment is considered safe; otherwise, the environment is dangerous. Note that the smaller θth is, the more dangerous the current environment is considered by agents. Therefore, some problems arise naturally: (1) Is it possible for each agent to change its perception value when interacting with other neighboring agents? (2) What is the effect of the changing of the perception values on the behavior of each agent?

Now we consider the above problems by introducing different kinds of games for safe and dangerous environment. For the safe environment, agents always do their own things and don’t need much help, so Nowak’s weak PD game7 is adopted. In this case, the defector temptation T = b0 > 1, the reward for mutual cooperation R = 1, the sucker’s payoff S and the punishment for mutual defection P equal to 0. For the case of dangerous environment, assume the agents who consider the current environment dangerous tend to look for help, so cooperation can increase the probability of escape. Hence, we set T = b1 < 1, R = 1, S = P = 0, which is a harmony game (HG).

In this report, we use a L × L regular square lattice with moore neighbor and periodic boundary conditions to place all agents. For the case of safe or dangerous environment, the perception of environment and the strategy in corresponding game will be updated by interacting with their neighbors. Specifically, an agent x selects its payoff matrix according to its perception of the environment, and calculates its total utility Ux by playing the game with all of its neighbors.Then, randomly selecting a neighbor y of x, if Uy > Ux, x updates its perception by

where δ ∈ [0, 1] denotes the perception, and Δx = |θx − θth|, Δy = |θy − θth|. In the first and second equations of Eq. (1), if the perception of x and y are both greater than the threshold, x and y both consider the current environment safe. Then their perception values of θx will continue to increase by a small δ. If the perception values of θx and θy are both less than the threshold, x and y both consider the current environment dangerous. Then perception values of θx will continue to decrease by a small δ. Under this Tag-based mechanism36, a pair of agents who have the same cognition will strengthen their perceptions. Here we set θx(k + 1) = 1 if θx(k + 1) ≥ 1, and θx(k + 1) = 0 if θx(k + 1) ≤ 0. If there exist the opposite perceptions, where one thinks the environment safe and the other thinks it dangerous, x will copy the perception of y with a certain probability. Here the noise k in Fermi dynamics is “tt” in equation, which is the max distance between θ and θth. When x thinks the environment safe and y considers it dangerous, we can calculate the distance(Δx, Δy) between the perception and threshold respectively, then discuss the difference between Δx and Δy. Because the difference is no more than tt, the exponent is between 0 and 1. If an agent’s cognition is different with another’s, he/she may change his/her mind.

In the third equation of Eq. (1), we use the Fermi function39 to calculate the copy probability, and k denotes the noise. From this rule, the exponential term is determined by the difference between δx and δy, where δx,y denotes the distance between the perception of agent x,y and the threshold θth. That is to say, the probability of x learning y is mainly determined by this difference. If δx is greater than δy, the probability is less than 0.5. Thus, x is not easy to be persuaded by y. If δx is smaller than δy, the probability of x learning y is greater than 0.5; if δx = δy, then the probability of x learning y is just 0.5.

Finally, agent x updates its strategy by comparing its utility with y. If Ux > Uy, x will keep its own strategy. If Uy > Ux, then x will copy the strategy of y with a certain probability16:

where sx denotes the strategy of the agent x, and the same as sy.

The simulations are realized within the framework of Monto Carlo simulation. Initially, agents are equally divided into cooperators or defectors. Environmental perceptions are assigned to agents at random, uniformly sampled from [0, 1]. An agent’s perception determines its payoff matrix, and it is affected by its more successful neighbors. In addition, we use the asynchronous update method. At each time step, randomly select an agent x, and update its strategy and perception in accordance with the above rules at the same time. The Monte Carlo simulation steps are 61,000. In the program, in order to simplify the parameters, we only reserve θth and b0, set L = 100, b1 = 0.9, and choose the learning rate δ = 0.001.

By changing the cognition level θth, we observe the changes in the proportion of cooperation and safe agents who consider the environment safe. In order to reduce the disturbance, the program is replicated 10 times and the final result is averaged.

References

Maynard Smith, J. & Price, G. R. The logic of animal conflict. Nature. 246, 15–18 (1973).

Maynard Smith, J. Evolution and the Theory of Games. (Cambridge University Press, 1982).

Weibull, J. W. Evolutionary Game Theory. (MIT Press, 1995).

Gintis, H. Game Theory Evolving. (Princeton University Press, 2000).

Nowak, M. A. Evolutionary Dynamics (Harvard University, 2006).

Axelrod, R. & Hamilton, W. D. The evolution of cooperation. Science 211, 1390–1396 (1981).

Nowak, M. A. & May, R. M. Evolutionary games and spatial chaos. Nature 359, 826–829 (1992).

Watts, D. J. & Strogatz, S. H. Collective dynamics of ‘small-world’ networks. Nature 393, 440–442 (1998).

Santos, F. C., Rodrigues, J. & Pacheco, J. M. Epidemic spreading and cooperation dynamics on homogeneous small-world networks. Phys. Rev. E 72, 056128 (2005).

Wu, Z. X., Guan, J. Y., Xu, X. J. & Wang, Y. H. Evolutionary prisoner’s dilemma game on barabási-albert scale-free networks. Physica A 379, 672–680 (2007).

Santos, F. C., Pacheco, J. M. & Lenaerts, T. Evolutionary dynamics of social dilemmas in structured heterogeneous populations. Proc. Nat1. Acad. Sci. USA 103, 3490–3494 (2006).

Li, X., Wu, Y., Rong, Z., Zhang, Z. & Zhou, S. The prisoner’s dilemma in structured scale-free networks. J. Phys. A: Math. Theor. 42, 245002 (2009).

Nowak, M. A. Five rules for the evolution of cooperation. Science 314, 1560–1563 (2006).

Perc, M. & Szolnoki, A. Coevolutionary games-a mini review. Bio Systems 99, 109–125 (2010).

Zimmermann, M. G., Eguíluz, V. & Miguel, M. S. Coevolution of dynamical states and interactions in dynamic networks. Phys. Rev. E 69, 065102(R) (2004).

Huang, K., Zheng, X., Li, Z. & Yang, Y. Understanding cooperative behavior based on the coevolution of game strategy and link weight. Scientific Report 5, 14783 (2015).

Pacheco, J. M., Traulsen, A. & Nowak, M. A. Active linking in evolutionary games. J. Theor. Biol 243, 437–443 (2006).

Santos, F. C. & Pacheco, J. M. Cooperation prevails when individuals adjust their social ties. PLoS Comput. Biol 2, 1284–1290 (2006).

Pacheco, J. M., Traulsen, A., Ohtsuki, H. & Nowak, M. A. Repeated games and direct reciprocity under active linking. J. Theor. Biol 250, 723–731 (2008).

Poncela, J., Gómez-Gardenes, J., Floría, L. M., Sánchez, A. & Moreno, Y. Complex cooperative networks from evolutionary preferential attachment. PLos One 3, e2449 (2008).

Poncela, J., Gómez-Gardenes, J., Traulsen, A. & Moreno, Y. Evolutionary game dynamics in a growing structured population. New J. Phys 11, 083031 (2009).

Helbing, D. & Yu, W. Migration as a mechanism to promote cooperation. Adv. Compl. Syst 11, 641–652 (2008).

Helbing, D. & Yu, W. The outbreak of cooperation among success-driven individuals under noisy conditions. Proc. Natl. Acad. Sci. USA 106, 3680–3685 (2009).

Majeski, S. J., Linden, G., Linden, C. & Spitzer, A. Agent mobility and the evolution of cooperative communities. Complexity 5, 16–24 (1999).

Szolnoki, A., Perc, M., Szabó, G. & Stark, H.-U. Impact of agingon the evolution of cooperation in the spatial prisoner’s dilemma game. Phys. Rev. E 80, 021901 (2009).

McNamara, J. M., Barta, Z., Fromhage, L. & Houston, A. I. The coevolution of choosiness and cooperation. Nature 451, 189–192 (2008).

Wang, Z., Szolnoki, A. & Perc, M. C. V. Different perceptions of social dilemmas: Evolutionary multigames in structuredpopulations. Phys. Rev. E 90, 032813 (2014).

Chen, X. & Wang, L. Promotion of cooperation induced by appropriate payoff aspirations in a small-world networked game. Phys. Rev. E 77, 017103 (2008).

Wu, Z. X. & Rong, Z. Boosting cooperation by involving extortion in spatial prisoner’s dilemma games. Phys. Rev. E 90, 062102 (2014).

Traulsen, A. & Claussen, J. C. Similarity-based cooperation and spatial segregation. Phys. Rev. E 70, 046128 (2004).

Szolnoki, A. & Perc, M. Coevolutionary success-driven multigames. Europhys. Lett. 108, 28004 (2014).

Guo, H. et al. Environment promotes the evolution of cooperation in spatial voluntary prisoner’s dilemma game. Appl. Math. Comput. 315, 47–53 (2017).

Perc, M. et al. Statistical physics of human cooperation. Phys. Rep. 687, 1–51 (2017).

Jin, J. H., Shen, C., Chu, C. & Shi, L. Incorporating dominant environment into individual fitness promotes cooperation in thespatial prisoner’s dilemma game. Chaos Solit.Fractals 96, 70–75 (2017).

Perc, M. Chaos promotes cooperation in the spatial prisoner’s dilemma game. Europhys. Lett. 75, 841 (2006).

Rick L. R., Michael D. C. & Robert, A. Evolution of cooperation without reciprocity. Nature 414, 6862 (2001).

Deffuant, G., Neau, D. & Amblard, F. Mixing beliefs among interacting agents. Adv. Complex Syst. 03, 87–98 (2000).

Hegselmann, R. & Krause, U. Truth and cognitive division of labour: First steps towards a computer aided socialepistemology. J. Artif. Soc. & Soc. Simul. 9, 10 (2006).

Szabó, G. & Tóke, C. Evolutionary prisoner’s dilemma game on a square lattice. Phys. Rev. E 58, 69–73 (1998).

Acknowledgements

This work was supported by the National Key R&D Program of China (Grant Nos. 2018YFC0809300, 2016YFC0801300 and 2016YFC0801200) and NSFC project under No. 61473164.

Author information

Authors and Affiliations

Contributions

Z.D., M.C., X.Z. and Y.C. designed and performed the research as well as wrote the article.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dong, Z., Chen, M., Cheng, Y. et al. Coevolution of Environmental Perception and Cooperative Behavior in Evacuation Crowd. Sci Rep 8, 16311 (2018). https://doi.org/10.1038/s41598-018-33798-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-33798-w

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.